Wonder Studio: Revolutionizing VFX and Character Animation with AI

Wonder Studio is revolutionizing VFX and character animation using AI. Explore its advanced motion capture, light mapping, and CG integration tools that simplify film production and empower creators globally.

AI ASSISTANTA LEARNINGAI/FUTUREEDUCATION/KNOWLEDGE

Sachin K Chaurasiya

6/21/20255 min read

What is Wonder Studio?

Wonder Studio is an AI-driven platform that allows users to seamlessly animate, light, and integrate 3D characters into live-action scenes with minimal manual input. Its core mission? To democratize VFX and reduce the traditionally high time and financial cost of character animation and compositing.

With just a single camera and a live-action clip, Wonder Studio automatically detects the actor's performance and replaces them with a CG character—matching lighting, camera movement, and environmental cues, all in real time or with minimal editing.

Key Features & Capabilities

Here’s what makes Wonder Studio stand apart in the AI content creation ecosystem:

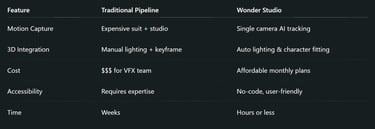

Automatic Motion Capture Without a Suit

Wonder Studio can analyze standard video footage, detect human movement, and automatically generate motion capture data for 3D character rigs. No need for mocap suits or specialized studio equipment.

One-Click Character Replacement

Simply upload your scene and select a character model (custom or prebuilt). Wonder Studio will intelligently replace the human actor with your chosen 3D character, complete with natural motion, perspective, and shadows.

AI-Driven Camera & Light Matching

It matches the lighting, shadows, reflections, and camera angles of the original footage, so your CG character fits seamlessly into the environment.

Multichannel Exporting for Professionals

While beginners can work entirely within the browser, pros can export multiple passes (motion, alpha, shadows, etc.) to integrate with professional VFX pipelines like Blender, Unreal Engine, and After Effects.

Cloud-Based Workflow

No heavy software or plugins. Wonder Studio is entirely cloud-based, allowing users to upload footage, make edits, and render animations directly in their browser.

Use Cases: From Hollywood to Indie Creators

Wonder Studio’s AI is being rapidly adopted across different segments of the entertainment and content creation world:

Filmmakers: Indie creators can now produce scenes that once required large production budgets.

Game Developers: Prototype cinematic sequences quickly without a full animation team.

YouTubers & Influencers: Add sci-fi or fantasy characters into real-world vlogs or sketches.

Studios & Agencies: Speed up workflows and reduce VFX costs in commercials or pilot episodes.

Wonder Studio has even caught the attention of Hollywood, with filmmakers exploring it as a previsualization tool or for rapid ideation during development phases.

How Wonder Studio Works: The Pipeline Breakdown

Upload Video: A live-action shot of an actor walking, standing, or interacting.

Select or Upload a 3D Character: Choose from a library or upload your own rigged model.

AI Analysis: The AI extracts motion data, lighting, and camera behavior.

Character Insertion: Your selected 3D character is inserted into the scene in place of the actor.

Editing & Exporting: Fine-tune if needed and export layered files for detailed post-production.

Technical Depth: The AI Behind the Scenes

Wonder Studio integrates multiple cutting-edge technologies under the hood:

Computer vision for pose estimation and segmentation.

3D reconstruction for motion tracking and camera perspective alignment.

Generative AI & neural rendering for shadow casting, ambient occlusion, and realistic lighting.

Cloud GPU rendering to process scenes faster without needing local computational power.

Limitations & Considerations

While Wonder Studio is powerful, it's not a full replacement for professional-grade VFX in all contexts:

It may struggle with complex movements like flips or crowd scenes.

Custom characters must be pre-rigged for seamless use.

Real-time rendering is limited compared to native engines like Unreal.

Still developing features like facial animation and lip sync.

Actor-to-Rig Mapping via Pose Estimation AI + Inverse Kinematics

Unlike conventional motion capture that uses body markers, Wonder Studio leverages multi-frame 2D/3D pose estimation using deep learning (akin to OpenPose or DensePose but customized for cinematic fidelity). This allows it to infer limb orientation and skeletal motion from flat footage.

Once the human actor’s skeleton is estimated, Wonder Studio applies inverse kinematics (IK) to retarget those movements to a custom CG rig.

It includes a corrective solver that ensures natural transitions and limits unnatural distortions across character morphologies (e.g., humanoid vs. alien).

Advanced Semantic Segmentation & Temporal Consistency

For replacing an actor with a CG model, Wonder Studio performs semantic segmentation of the human subject over time:

It uses transformer-based video segmentation models that learn temporal dependencies across frames, ensuring continuity in complex motions like overlapping limbs or occlusion.

This prevents "jittering" or misalignment that would otherwise happen in traditional frame-by-frame compositing.

Environment Light Probes from RGB Footage

One of Wonder Studio’s most advanced tricks is estimating 3D lighting from 2D footage. Using an AI-based light probe extraction system, it approximates the position, intensity, and color temperature of lights in the original scene.

This is done using

Neural light transport estimation: AI models trained on large datasets to infer where light sources exist based on object shadows and color reflections.

Spherical Harmonics Lighting: For real-time approximation of global illumination applied to the 3D character.

This level of environmental fidelity typically requires HDRI setups or light mapping in traditional pipelines.

AI-Driven Camera Tracking Without Markers

Wonder Studio extracts camera motion directly from a 2D video using monocular visual SLAM (Simultaneous Localization and Mapping). This process estimates

The camera’s 6DoF (degrees of freedom) movement.

Focal length and lens distortion correction.

Real-time parallax estimation.

It then matches the inserted 3D character’s perspective and movement according to the original cinematography. This is critical for realism, especially in handheld shots.

Real-Time Neural Retargeting with Style Preservation

When animating stylized or non-humanoid characters, Wonder Studio uses style-preserving retargeting, which includes:

Latent space deformation networks, ensuring the CG character maintains their own unique movement flavor while still mimicking the actor’s gestures.

This is useful for cartoony or fantasy characters that require exaggerated or non-physically accurate motions (e.g., elastic limbs, floating).

Integrated DeepFake & Facial Expression Inference (Beta/Upcoming)

While not fully public, Wonder Studio’s roadmap includes facial expression mapping using video-to-expression inference based on 3DMM (3D Morphable Models).

This would allow for full performance capture without markers, converting actor facial expressions to CG face rigs.

Combined with speech-to-lip-sync models (like those from RADiCAL or Wav2Lip), it’s moving toward end-to-end CG performance synthesis.

Pipeline Compatibility and Mesh Interoperability

For professionals, Wonder Studio offers:

FBX/USDZ character support for direct import/export to Blender, Maya, Unreal, and Unity.

Multi-channel EXR file exports: Shadow pass, depth pass, alpha mask, motion vectors, and ambient occlusion—all auto-generated by its rendering engine.

GPU Rendering via CloudStack: Utilizes NVIDIA RTX-based clusters in the backend for physically-based rendering (PBR) of final shots.

AI Architecture Speculation (Likely Foundation)

While not publicly disclosed in full, based on outputs and speed, Wonder Studio is likely using a combination of

Vision Transformer (ViT) or Video Swin Transformer backbones for pose and segmentation.

NeRF-based depth approximation for scenes where depth is critical.

Proprietary actor tracking models built on CLIP-style embedding + temporal transformers, trained on actor movement and lighting datasets.

The Future of Wonder Studio and AI VFX

As AI models become more accurate and multimodal, tools like Wonder Studio will likely evolve into full AI film studios in the cloud. Expect more support for facial expressions, real-time collaboration, and deeper integration with game engines and metaverse platforms.

Its creators, Wonder Dynamics, are also exploring how AI can assist in storyboarding, scene breakdowns, and even automated editing, expanding the scope of intelligent automation in storytelling.

Wonder Studio isn’t just an AI tool—it’s a cinematic equalizer. By putting powerful VFX automation into the hands of creators everywhere, it’s dismantling traditional barriers of cost, time, and skill.

Whether you're building your first short film or augmenting a blockbuster post-production pipeline, Wonder Studio is pioneering a new frontier where imagination meets automation—without compromise.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚