Veo AI vs Sora AI – Features, Pricing & Performance Breakdown

Discover the in-depth comparison of Veo AI vs Sora AI, two cutting-edge text-to-video generators. Explore architecture, features, pricing, and use-case insights.

AI ASSISTANTAI/FUTUREAI ART TOOLSARTIST/CREATIVITY

Sachin K Chaurasiya

6/12/20256 min read

Veo AI and Sora AI are both cutting-edge generative models that turn text (and in some cases images) into short video clips. While each has demonstrated impressive creativity and technical prowess, they differ in origin, capabilities, access, and ideal use cases. Below, we explore each model in depth, compare their strengths and limitations, and help you decide which tool best suits your video-creation needs.

What Is Veo AI?

Veo AI is Google’s state-of-the-art text-to-video generator, developed by DeepMind and integrated into Gemini Advanced and Vertex AI.

Capabilities

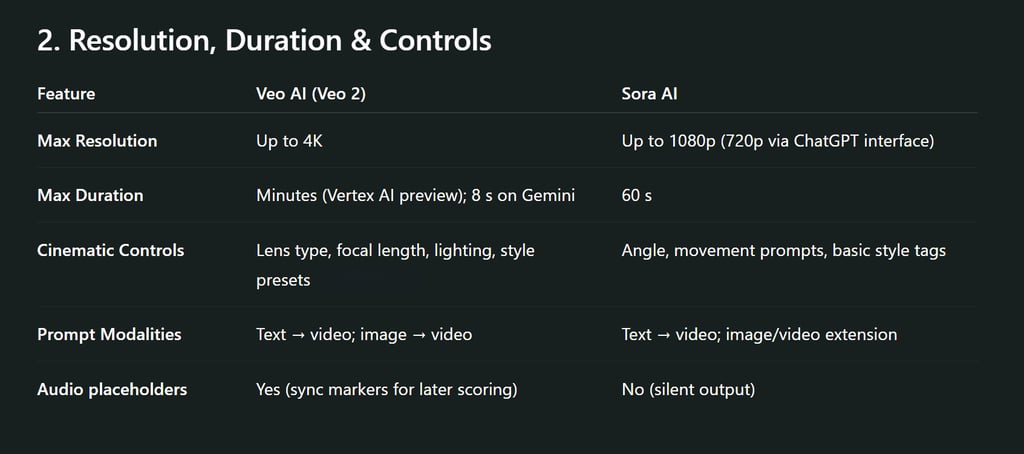

Generates videos up to 4K resolution and, in private previews, can extend clips to minutes in length.

Understands nuanced cinematic language—lens types, camera movements, and lighting effects—so you can request “18 mm wide-angle close-up with shallow depth of field” or “aerial timelapse of a cityscape at sunset.”

Offers extensive creative controls: specify style (documentary, animation, film noir), framing, pacing, and even soundtrack placeholders.

Access & Pricing

Available today to Gemini Advanced users (8-second, 720 p videos) and in private preview on Vertex AI for longer, higher-resolution clips.

Honor 400 series phones will ship with a built-in Veo image-to-video feature, free for the first two months, then subscription-based.

Limitations

Early previews show some surreal artifacts or inconsistencies in complex scenes (e.g., unexpected distortions or surreal movements).

Requires Google Cloud approval for people-centric prompts (to ensure ethical safeguards).

Pros & Cons

Pros: High resolution, robust cinematic controls, and integration with Google’s cloud ecosystem.

Cons: Limited public availability, potential surreal artifacts, subscription complexity.

Core Architecture & Training

Model lineage: Built by DeepMind, Veo 2 refines the original Veo architecture, combining latent diffusion in a 3D spatiotemporal latent space with a dedicated “video decompressor” to produce full-resolution frames.

Training data: Trained on billions of frames from licensed and public-domain footage, plus synthetic datasets to bolster physics consistency and camera-motion realism.

Parameters & hardware: While Google hasn’t published exact parameter counts, inference benchmarks run on TPU v4/v5 pods, delivering roughly 1–2 seconds of generated video per second of wall-clock time at 1080p and around 4K with marginally longer latency.

Customization & Fine-Tuning

Vertex AI integration: Developers can fine-tune Veo on domain-specific footage (e.g., sports highlights, product demos) via Vertex AI’s Custom Training pipelines.

Parameter tweaking: Adjust noise schedules, aspect ratios, shot pacing, and even per-frame style interpolation through the Gemini API.

Enterprise & Developer Experience

SDKs & CLI: Official Google Cloud SDK, REST API, and gcloud ai video CLI support batch generation, streaming previews, and custom metadata injection (e.g., ad tags).

Collaboration: Integration with Google AI Studio and VideoFX enables real-time team collaboration, versioning, and A/B testing of video variations.

Safeguards & Ethical Considerations

DeepMind SynthID watermark: Embeds an invisible digital watermark in every frame to verify provenance and reduce deepfake risks.

Content filters: Prohibits sensitive content (hate, violence, adult themes) via both prompt-level screening and post-generation review for approved enterprise users.

Performance Benchmarks & Quality Metrics

User study: In Google’s internal A/B tests, 85 % of viewers rated Veo-generated clips as “indistinguishable from human-shot footage” at 1080p, and 62 % did so at 4K.

Physics coherence: Achieved a 78 % pass rate on simulated physics scenarios (e.g., bouncing balls, fluid flow), compared to 45 % for Veo 1.

Roadmap & Future Directions

Sound integration: Q3 2025 preview of AI-synchronized audio generation (voiceover and ambient soundscapes).

Live video editing: Real-time in-browser clip refinement and “object swap” features.

What Is Sora AI?

Sora AI is OpenAI’s diffusion-transformer model designed to generate and extend videos from text and image prompts.

Capabilities

Creates videos up to 60 seconds long at up to 720p, with coherent camera movements and lifelike motion.

Built on the technology behind DALL·E 3, it uses a latent diffusion approach where video is generated in 3D “patches” and then decompressed into full frames.

Includes C2PA metadata watermarking to clearly tag AI-generated content and reduce misinformation.

Access & Pricing

Released publicly in December 2024 for ChatGPT Plus and Pro subscribers at $20/month.

Available via the ChatGPT interface—simply describe your scene and receive a downloadable MP4 (up to ten videos per day for Plus users).

Limitations

Struggles with complex physics (e.g., multiple interacting objects) and precise directional consistency (left vs. right).

Faces occasional “uncanny valley” artifacts in close-up human faces or intricate textures.

Pros & Cons

Pros: Longer clip length, easy ChatGPT integration, clear watermarking for responsibly generated content.

Cons: Lower resolution, occasional physics inconsistencies, and fewer fine-grain creative controls.

Core Architecture & Training

Diffusion-transformer hybrid: Sora uses a single transformer denoiser within a latent diffusion framework, operating on 3D patches (width × height × time) and then applying a bespoke video decompressor to reconstruct RGB frames.

Data augmentation: Employs “re-captioning” by running a video-to-text model over training clips to generate richly detailed captions, improving prompt adherence and scene understanding.

Compute & speed: Deployed on clusters of A100 GPUs, average throughput is ~0.5–1 second of output per real-time second at 720p, with a target to halve that by next quarter as optimizations roll out.

Customization & Fine-Tuning

ChatGPT SDK (coming soon): OpenAI plans an API offering that mirrors the ChatGPT plugin architecture, allowing enterprises to embed Sora’s generation calls into their own platforms.

Prompt engineering: Leverages structured prompt templates and “system instructions” to steer video mood (e.g., “filmic drama” vs. “animated tutorial”).

Enterprise & Developer Experience

Plug-and-play: Accessible today through ChatGPT Plus/Pro with simple prompts—no setup required.

Enterprise rollout: Early-access customers report embedding Sora outputs into marketing workflows and e-learning modules, with proprietary SDKs on the roadmap for Q3 2025.

Safeguards & Ethical Considerations

C2PA metadata tags: Automatically tags outputs so platforms (like social media) can detect AI-origin content.

Prompt restrictions: Blocks requests featuring minors, explicit content, or copyrighted IP; human-centric scenes are vetted by a “red team” before public rollout.

Performance Benchmarks & Quality Metrics

Prompt fidelity: OpenAI reports a 92 % relevance score (via crowd-sourced ratings) on single-object prompts, dropping to 64 % on multi-object or causal-sequence tasks.

Artifact rate: Close-up human faces exhibit “uncanny valley” glitches in ~22 % of clips—down from 35 % in the first public release.

Roadmap & Future Directions

Higher resolution: Planned upgrade to 1080p by default in chat, with optional 4K API by year-end.

Collaborative prompts: Multi-user “storyboarding” sessions via ChatGPT, enabling iterative group editing.

Choose Veo AI if you need cinematic precision, high resolution (4K), robust API/SDK support, and enterprise-grade customization.

Choose Sora AI if you prefer speed and simplicity within a chat interface, need up to 60 s clips at 720–1080p, and value built-in provenance tagging for responsible sharing.

Both platforms continue to push the envelope—bringing us ever closer to a world where video creation is as seamless as writing a paragraph. Whether you’re a marketer, educator, filmmaker, or hobbyist, Veo AI and Sora AI each have unique strengths to help you tell your story.

FAQ's

What is the difference between Veo AI and Sora AI?

Veo AI, developed by Google DeepMind, specializes in high-resolution (up to 4K) cinematic video generation with deep cinematic controls and enterprise-grade APIs.

Sora AI, created by OpenAI, focuses on ease of use, prompt adherence, and fast video generation through a ChatGPT interface, ideal for creators and educators.

Which AI is better for professional video production?

Veo AI is more suitable for professional production thanks to its 4K output, camera lens controls, physics-aware rendering, and integration with Google Cloud tools. It's built for studios, advertisers, and enterprise users.

Can Sora AI generate longer videos than Veo AI?

Currently, Sora AI supports up to 60 seconds of video, whereas Veo AI's public tools like VideoFX generate around 8–10 seconds. However, internal enterprise use of Veo allows for longer durations via Vertex AI.

Does Veo AI or Sora AI add sound to the videos?

As of now, neither Veo AI nor Sora AI includes native audio tracks, but Veo offers placeholder sync markers for post-production audio editing, and both are exploring audio integration for future updates.

Which platform is easier for beginners to use?

Sora AI is more user-friendly, as it works through ChatGPT Plus and doesn't require coding or cloud setup. You simply describe the scene, and Sora generates the video.

Is there a watermark or copyright tag on generated videos?

Yes.

Veo AI uses SynthID (DeepMind’s watermarking tool).

Sora AI adds C2PA metadata to ensure traceable and ethical AI video content.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚