TensorFlow vs. PyTorch vs. JAX – Which Deep Learning Framework is Best?

Discover the key differences between TensorFlow, PyTorch, and JAX in this in-depth comparison. Learn about their strengths, performance benchmarks, deployment capabilities, and best use cases to determine the ideal deep learning framework for your needs.

AI ASSISTANTPROGRAMMINGEDUCATION/KNOWLEDGEEDITOR/TOOLSAI/FUTURECOMPANY/INDUSTRY

Sachin K Chaurasiya

2/14/20256 min read

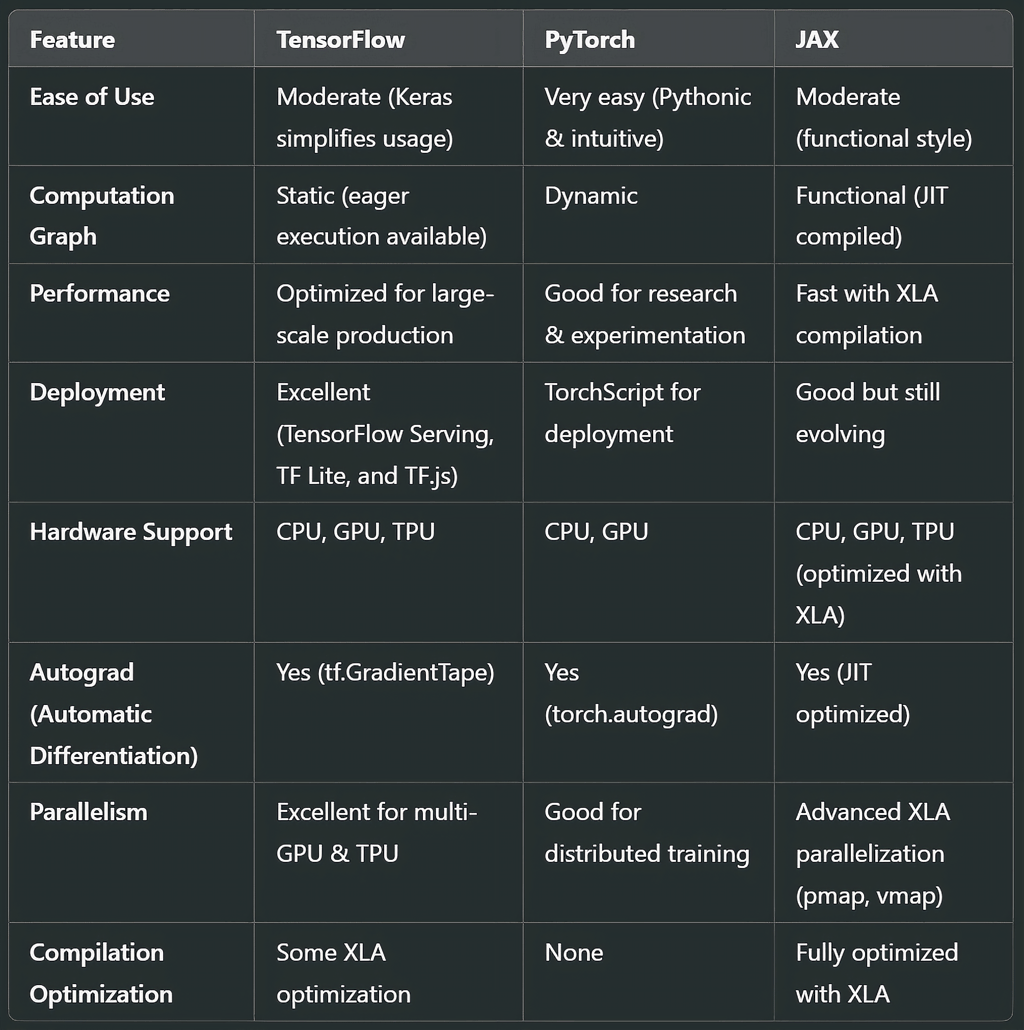

Deep learning has revolutionized artificial intelligence (AI), enabling breakthroughs in natural language processing, computer vision, and reinforcement learning. Among the most popular deep learning frameworks are TensorFlow, PyTorch, and JAX. Each has its strengths, weaknesses, and ideal use cases. In this article, we will explore these frameworks in-depth, comparing their features, performance, and best applications.

TensorFlow

Developed by: Google Brain

Released in: 2015

Current Stable Version: 2.x

TensorFlow is one of the most widely used deep learning frameworks, offering a flexible ecosystem for both research and production. It supports both CPU and GPU acceleration and provides high-level APIs like Keras for easier model development. TensorFlow also features TensorFlow Extended (TFX), which is useful for end-to-end machine learning pipelines, and TensorFlow Hub, a repository of pre-trained models for transfer learning. It supports Tensor Processing Units (TPUs), which significantly enhance performance for large-scale AI models.

Strengths

✅ Scalability and Production Readiness—Excellent for deploying large-scale ML models using TensorFlow Serving, TensorFlow Lite, and TensorFlow.js.

✅ Robust Ecosystem—Comes with TensorFlow Extended (TFX) for end-to-end ML pipelines and TensorFlow Hub for pre-trained models.

✅ Multi-platform support—Runs efficiently on CPU, GPU, and TPU with strong support for distributed training.

✅ Integration with Google Cloud—Optimized for Google Cloud AI services, making it suitable for enterprise applications.

Weaknesses

❌ Steep learning curve—More complex than PyTorch, especially when working with static computation graphs.

❌ Elaborate syntax—simple operations require more code than PyTorch.

❌ Debugging difficulty—Debugging TensorFlow models is more challenging than PyTorch due to its execution model.

Technical Information

Programming Model: Initially a static computation graph (Graph Execution), later improved with Eager Execution.

Automatic Differentiation: Implemented using tf.GradientTape.

Parallelism & Acceleration: Optimized with XLA (Accelerated Linear Algebra) and supports TPU acceleration.

Best Use Cases: Large-scale ML applications, cloud-based AI, and mobile/embedded AI applications.

PyTorch

Developed by: Facebook (Meta) AI Research Lab

Released in: 2016

Current Stable Version: 2.x

PyTorch has gained immense popularity among researchers due to its intuitive, Pythonic nature and dynamic computation graph. It is highly favored for quick prototyping, debugging, and deep learning research. PyTorch also provides TorchServe for deploying models and TorchScript for converting models into production-ready execution environments. PyTorch has native support for CUDA and cuDNN, optimizing computations on NVIDIA GPUs.

Strengths

✅ User-friendly and Pythonic—Simple and intuitive syntax makes it easy to learn and use.

✅ Dynamic computation graph—Provides flexibility, making it ideal for research and experimentation.

✅ Strong debugging capabilities—Easy debugging with Python's standard debugging tools.

✅ Great for AI research—many cutting-edge research models are first implemented in PyTorch.

Weaknesses

❌ Limited production tools—While TorchServe and TorchScript help, they are not as mature as TensorFlow's deployment ecosystem.

❌ Memory inefficiency—PyTorch can be less memory efficient than TensorFlow in large-scale deployments.

❌ Low enterprise adoption—While improving, it still lags behind TensorFlow in terms of industry adoption.

Technical Information

Programming Model: Fully dynamic computation graph (Eager Execution).

Automatic Differentiation: Implemented using torch.autograd.

Parallelism & Acceleration: Supports multi-GPU training and distributed computing via torch.distributed.

Best Use Cases: Research, academic AI projects, and quick prototyping.

JAX

Developed by: Google Research

Released in: 2018

Current Stable Version: Latest updates in 2024

JAX is a relatively newer framework designed to accelerate numerical computing and machine learning research. It leverages just-in-time (JIT) compilation and automatic differentiation for fast execution on GPUs and TPUs, making it a powerful tool for AI and scientific computing. JAX's Flax library provides high-level abstractions for deep learning, and its XLA (Accelerated Linear Algebra) backend significantly optimizes performance. JAX's pmap and vmap functions enable efficient parallel computation, making it ideal for large-scale ML workloads.

Strengths

✅ Extreme performance optimization—Uses just-in-time (JIT) compilation with XLA for faster execution on GPU/TPU.

✅ Automatic vectorization and parallelism—Provides vmap for vectorized operations and pmap for parallel computation.

✅ Functional programming approach—Encourages writing clean, composable ML code.

✅ Excellent for scientific computing—ideal for physics simulations, differential equations, and reinforcement learning.

Weaknesses

❌ Steep learning curve—requires familiarity with functional programming paradigms.

❌ Limited deployment support—deployment tools are still evolving compared to TensorFlow and PyTorch.

❌ Not widely used—smaller community and fewer pre-built models compared to TensorFlow and PyTorch.

Technical Information

Programming Model: Functional-style programming with JIT-compiled execution.

Automatic Differentiation: Implemented using jax.grad.

Parallelism & Acceleration: Uses XLA to accelerate computations on TPUs/GPUs with pmap for distributed training.

Best Use Cases: High-performance computing, reinforcement learning, and physics-based ML models.

Comparison Based on Use Cases

Research & Experimentation

PyTorch is the preferred choice among researchers because of its easy debugging, intuitive syntax, and dynamic computation graphs. Many AI research papers and models are first implemented in PyTorch before being ported to production frameworks.

Production Deployment

TensorFlow excels in production environments due to its well-integrated deployment tools like TensorFlow Serving, TensorFlow Lite (for mobile and edge devices), and TensorFlow.js (for web-based AI applications). Enterprises and cloud AI solutions favor TensorFlow for scalability.

High-Performance Computing & Large-Scale ML

JAX is gaining traction among high-performance computing (HPC) researchers and AI engineers working on large-scale deep learning. Its ability to optimize workloads using XLA (Accelerated Linear Algebra) allows it to run highly efficient computations on GPUs and TPUs.

Auto-Differentiation & Scientific Computing

JAX offers superior auto-differentiation capabilities, making it a great choice for gradient-based optimization, physics simulations, and reinforcement learning. Its JIT compilation significantly speeds up execution time, making it superior for highly complex numerical computations.

Performance Benchmarks

JAX often outperforms TensorFlow and PyTorch in tasks that require large-scale parallelism due to its integration with XLA. However, for standard deep learning tasks, the differences are marginal. PyTorch is preferred for quick prototyping, whereas TensorFlow is better for large-scale distributed training.

Training Speed: JAX > TensorFlow > PyTorch (on optimized workloads)

Memory Efficiency: JAX > TensorFlow ≈ PyTorch

Ease of Use: PyTorch > TensorFlow ≈ JAX

Inference Speed: JAX > TensorFlow > PyTorch

Which One Should You Choose?

Choose TensorFlow if: You need a robust, scalable framework for production with strong deployment capabilities.

Choose PyTorch if: You are doing research, need flexibility, and prefer an intuitive, Pythonic syntax.

Choose JAX if: You require high-performance computing, parallelism, and large-scale ML workloads with TPUs.

Which framework is best for beginners?

PyTorch is the easiest to learn due to its Pythonic syntax and dynamic computation graph, making it ideal for beginners and researchers.

Can I use TensorFlow, PyTorch, and JAX together?

Yes, but it requires careful integration. Some libraries, like Hugging Face’s transformers, support models in both TensorFlow and PyTorch, and JAX can interoperate with NumPy and TensorFlow via XLA.

Is JAX better than TensorFlow and PyTorch?

JAX offers superior performance with XLA compilation and automatic parallelism, but it has a steep learning curve and lacks extensive deployment tools like TensorFlow. It is best suited for scientific computing and high-performance ML workloads.

Which framework is best for production deployment?

TensorFlow is the best choice for production due to its deployment ecosystem, including TensorFlow Serving, TensorFlow Lite (for mobile), and TensorFlow.js (for web).

Which framework is best for deep learning research?

PyTorch dominates in AI research due to its intuitive, flexible nature and strong academic community support.

How does JAX differ from TensorFlow and PyTorch?

JAX follows a functional programming paradigm and focuses on high-performance computations with JIT compilation and automatic differentiation, making it ideal for scientific computing and large-scale parallelism.

Each of these frameworks—TensorFlow, PyTorch, and JAX—has its own strengths, making them suitable for different use cases. While TensorFlow dominates production AI, PyTorch remains a top choice for research, and JAX is paving the way for high-performance AI computing. Depending on your project goals, hardware, and deployment needs, selecting the right framework can significantly impact your model’s efficiency and scalability.

If you're working on AI research and prototyping, PyTorch is an excellent choice. If you need large-scale, production-ready AI, go for TensorFlow. But if you want cutting-edge speed and parallelism for AI workloads, JAX might be your best bet. Ultimately, the best framework is the one that fits your project’s needs and computing resources.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚