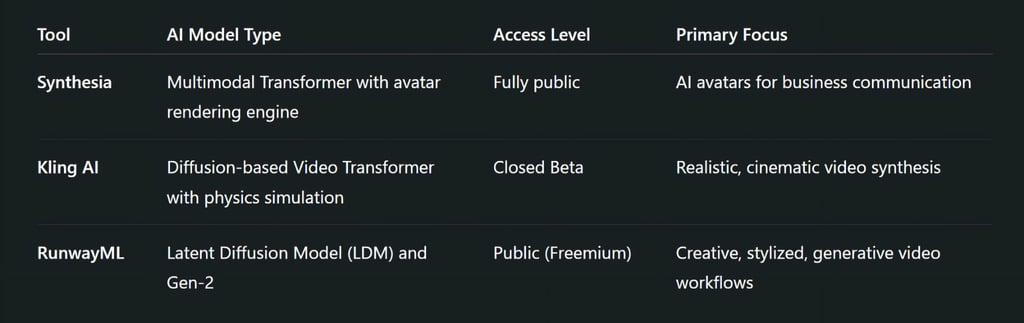

Synthesia vs Kling AI vs RunwayML: Advanced Comparison of AI Video Generation Titans

Compare Synthesia, Kling AI, and RunwayML in this in-depth 2025 guide. Explore their AI models, video generation quality, technical architectures, and ideal use cases. Discover which tool suits your business, creative, or cinematic needs best.

AI ASSISTANTAI/FUTUREEDITOR/TOOLSAI ART TOOLS

Sachin K Chaurasiya

5/11/20254 min read

Artificial intelligence is reshaping how video content is ideated, generated, and distributed. The advent of text-to-video and AI-assisted video editing tools like Synthesia, Kling AI, and RunwayML has radically transformed the creative pipeline. Whether you're a marketer, filmmaker, educator, or AI researcher, understanding the technical backbone of these platforms can guide you toward the right tool.

In this article, we’ll go beyond surface-level features and explore the technical architectures, generation models, and real-world use cases that differentiate these three major platforms.

Synthesia: Pioneering AI Avatars

Synthesia is a text-to-video platform that allows users to create professional videos using lifelike AI avatars. It's widely used for corporate training, product demos, onboarding, and internal communications.

Key Features

AI Avatars: Choose from 150+ AI presenters or create a custom avatar.

Multilingual Support: Over 120 languages supported.

No Camera Needed: Simply input your script, select an avatar, and generate the video.

Templates & Editing Tools: Offers pre-designed templates for various industries.

Technical Architecture

Synthesia combines Natural Language Processing (NLP) with Computer Vision (CV) to generate talking-head avatars. The workflow involves

Multilingual TTS (Text-to-Speech) engines with neural voice synthesis (like Tacotron 2 or WaveNet).

Avatar rendering pipeline: Pre-recorded actor data + lip-sync alignment models.

Audio-to-visual sync using deep learning to match phonemes to facial landmarks.

Video encoder built on 3D morphable models and mesh deformation techniques.

Use Case Strengths

Corporate onboarding

MOOCs and training

Localized video generation at scale

Performance Metrics

Avatar latency: ~1-2 minutes per minute of video

Supports 120+ languages

Voice cloning (premium feature)

Video output: 1080p (no 4K yet)

Pros

Fast and intuitive interface

Great for global teams needing multilingual content

High-quality lip-syncing and gestures

Cons

Limited creative freedom beyond avatar scripts

May feel too “corporate” for artistic or cinematic use

Best For

Corporate users, HR departments, marketers, and educators.

Kling AI: The New Player With Stunning Realism

Kling AI, developed by Kuaishou (a Chinese tech giant), takes text prompts and turns them into ultra-realistic videos. It's often compared to OpenAI’s Sora for its cinematic quality and physics-based animation.

Key Features

Photorealistic Output: Deep physics and motion understanding for natural results.

Prompt-to-Video: Write a scene description and watch it unfold in video.

Frame-by-Frame Consistency: Maintains spatial and temporal coherence.

Technical Architecture

Kling AI, by Kuaishou, leverages large-scale latent diffusion and spatiotemporal video transformers for unprecedented realism:

The text-to-video pipeline uses diffusion models in latent space (like Imagen Video/Sora-style design).

Temporal coherence is maintained via 3D-aware spatial modeling and attention-based frame prediction.

Physics engine integration for simulation of object trajectories, shadows, fluid motion, and camera depth.

Likely uses multi-frame denoising models and conditional UNets (like those seen in PixArt-α or Pika 1.0).

Real-World Capabilities

Generate up to 2-minute scenes (longer than most competitors).

Supports motion parallax, reflections, and lighting dynamics.

Early demos show handling of complex motion paths and multi-subject scenes.

Current Limitations

Currently in invite-only beta.

UI/UX is still under active development.

Limited API access (as of mid-2025).

Pros

Exceptional realism and physics

High creative potential for storytelling, advertising, and film

Capable of complex scenes and camera angles

Cons

Currently not publicly accessible or in closed beta

Lacks established ecosystem like Runway or Synthesia

Best For

Filmmakers, animators, and visionaries looking for realism and dynamic storytelling.

RunwayML: The Creator’s Playground

RunwayML is a multi-modal creative suite that enables video generation, editing, and enhancement through AI. It's known for tools like Gen-2, which enables text-to-video and image-to-video transformations.

Key Features

Text-to-Video (Gen-2): Describe a scene and generate a short video clip.

Inpainting & Outpainting: Edit and expand existing footage seamlessly.

Video Style Transfer & Motion Brush: Bring still images to life with stylized motion.

Technical Architecture

RunwayML’s Gen-2 builds on Gen-1’s architecture, now featuring:

Text-to-video, image-to-video, and video-to-video capabilities.

Based on Latent Diffusion Models (LDMs) similar to Stable Diffusion but adapted for temporal output.

Incorporates attention-based context modules for visual consistency.

Offers tools like Motion Brush, Frame Interpolation, Inpainting, and Depth-Aware Editing.

Advanced Features

Style transfer via custom model checkpoints.

Video Editing AI Tools:

Background removal

Rotoscoping with precision masking

Scene expansion (AI outpainting)

Creative Prompting: Accepts multi-modal inputs for control (text + image + audio triggers).

Benchmark Data

Output resolution: up to 4K (with upscale)

Frame rate: 24–30 (dynamic)

Temporal length: ~4s native; expandable with interpolation

Pros

Rich feature set beyond just video generation

Browser-based, no software needed

Vibrant community and frequent updates

Cons

May require a learning curve for advanced features

Limited control in longer video narratives

Best For

Content creators, designers, indie filmmakers, and marketing teams.

Use Case Breakdown

Business Training & Communication

Winner: Synthesia

Why? Synthesia’s avatars and language support make it ideal for internal videos and training across regions.

Creative Storytelling & Cinematic Scenes

Winner: Kling AI

Why? If you want lifelike movements, physics, and film-quality visuals, Kling AI is a game-changer.

Artistic & Marketing Campaigns

Winner: RunwayML

Why? RunwayML offers flexibility for experimentation and fast prototyping with visual styles.

Developer & AI Research Insight

Synthesia is a productized solution, meaning it's optimized for mass use but offers little flexibility in pipeline tweaking.

Kling AI resembles the next generation of AI video modeling (on par with OpenAI's Sora or Google Lumiere).

RunwayML sits at the intersection of creative AI and video post-production, making it a playground for experimentation.

If you're a developer or researcher looking to contribute or experiment:

Synthesia: Closed source, no dev access.

Kling AI: R&D-focused, likely based on large private datasets.

RunwayML: Offers a public API, community model training, and documentation.

While all three platforms revolutionize video creation, they serve very different purposes. Synthesia excels in avatar-led communication, Kling AI breaks the boundary of realism with next-gen generative models, and RunwayML empowers artists with freedom and flexibility.

As the technology matures, expect convergence — where hyper-realistic avatars, deep creative control, and long-form storytelling will become a single AI-driven solution.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚