Runway ML vs Sora: Which Video AI Wins for Creators!

Discover which AI video generation tool leads in realism, creativity, and usability. Explore deep technical comparisons, creative features, pricing, and future-readiness for creators, filmmakers, and content professionals.

AI ART TOOLSARTIST/CREATIVITYAI/FUTURECOMPANY/INDUSTRY

Sachin K Chaurasiya

7/22/20256 min read

In 2025, artificial intelligence is not just shaping text and image generation—it’s transforming how we make videos. Two names stand out in this evolving space: Runway ML and Sora by OpenAI. Whether you're a filmmaker, marketer, YouTuber, or game designer, choosing the right AI video tool could dramatically affect your creative output, workflow, and costs.

In this in-depth guide, we’ll explore the core differences between Runway ML and Sora, analyzing everything from video quality to interface design, use cases, and pricing. So, let’s dive into the question: Which AI video tool truly wins for creators?

What Are Runway ML and Sora?

RunwayML

Runway ML is a pioneering creative AI platform that offers real-time video generation and editing tools. Known for Gen-1 and Gen-2 models, Runway allows users to transform videos using text, images, or reference clips. It’s popular in film, marketing, and content production.

Launched by: Runway Research

Core Tech: Gen-1 and Gen-2 AI models

Use Case Focus: Video-to-video transformation, stylization, real-time generation

Sora (by OpenAI)

Sora is OpenAI’s newest leap into the multimodal AI world—a text-to-video generator capable of producing realistic, minute-long video clips from just a prompt. With cinematic motion, physics awareness, and deep scene comprehension, Sora is being hailed as a revolutionary tool for storytelling.

Launched by: OpenAI (2024, ongoing development in 2025)

Core Tech: Multimodal diffusion models with physics and world modeling

Use Case Focus: High-fidelity video generation from scratch using text prompts

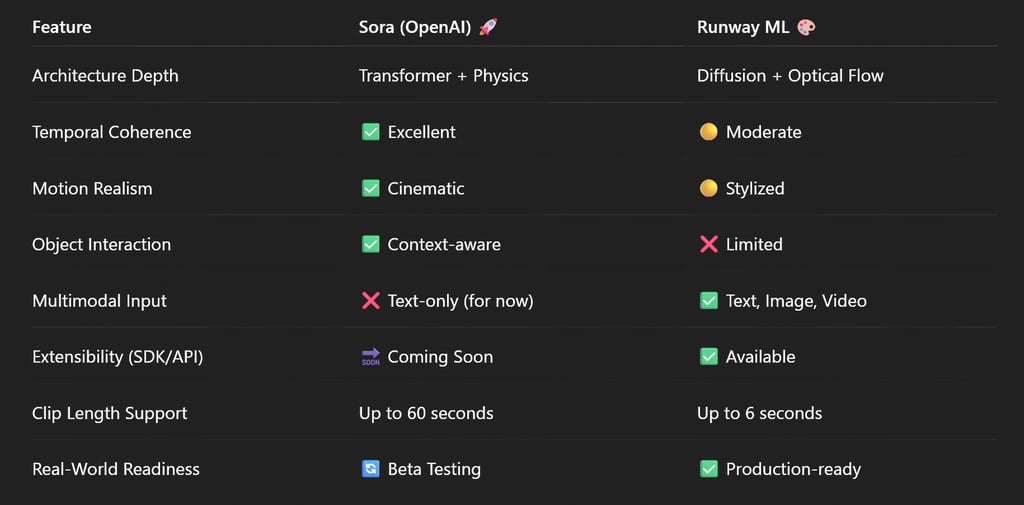

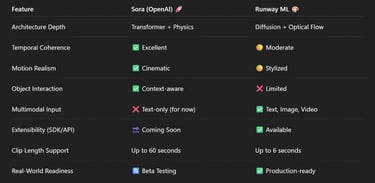

Video Quality & Realism

Sora Wins in Photorealism

Sora generates stunningly realistic videos with accurate lighting, depth, and physical interactions. From animated cityscapes to wildlife shots, it produces scenes that blur the line between real and AI.

Runway ML Excels in Stylized Outputs

While Runway's videos aren’t as photorealistic, they are highly stylized and customizable—perfect for creators seeking artistic control over motion, transitions, and effects. It also supports style transfer, which Sora currently lacks.

Verdict

For cinematic realism → Sora

For artistic editing and stylization → Runway ML

Prompt-to-Output Flexibility

Sora: Natural Language Powerhouse

Sora understands complex prompts like “A dragon flying over a snowy village at dusk” and renders high-quality video accordingly. Its deep scene understanding enables smooth, coherent frames even across long shots.

Runway: Hybrid Control

Runway offers multi-modal inputs—you can start with a video, image, or sketch and then manipulate it. This flexibility is especially useful for content editors and professionals.

Verdict

For text-only storytelling → Sora

For multimodal input flexibility → Runway ML

User Experience & Interface

Runway ML: User-Friendly, Creator-Focused

Runway ML offers a no-code, drag-and-drop interface with real-time previews, layers, and timeline editing. It integrates smoothly with tools like Adobe Premiere and Final Cut.

Sora: Research-Only (for now)

As of mid-2025, Sora is not yet publicly available for mass creators. It's in limited research access, which means UI/UX and usability remain behind closed doors. Full access is expected later this year.

Verdict

For ease of use today → Runway ML

For cutting-edge access (once available) → Sora

Collaboration & Integration

Runway ML: Built for Teams

Runway ML includes project sharing, cloud exports, and team-based editing features. It’s designed to fit into creative production pipelines.

Sora: Not Yet Integrated

Sora is powerful but not yet designed for collaborative or integrated workflows. When OpenAI opens its API and toolchain, this may change.

Verdict

For collaborative workflows → Runway ML

Pricing & Accessibility

Runway ML: Freemium Model

Free tier with limited resolution

Paid plans from ~$12/month

Export limitations apply for high-quality renders

Sora: Not Commercially Available Yet

As of now, Sora is in closed beta. OpenAI plans a developer and enterprise rollout, likely with tiered pricing models and API access, in late 2025.

Verdict

For affordable access today → RunwayML

For high-end use in the future → Sora (once launched)

Sora (OpenAI)

Model Architecture & Training Approach

Architecture

Sora is based on a diffusion transformer architecture optimized for video generation. It incorporates spatiotemporal attention to maintain consistency across both space and time.Sequence-Aware Modeling

Sora treats video as a sequence of frames where each frame is interlinked with latent representations. It handles long-term temporal dependencies, enabling up to 60-second coherent outputs—a massive leap from older models.Training Data

Sora is trained on a mix of high-quality text-video datasets, likely sourced from licensed stock content, instructional videos, cinematic footage, and synthetic 3D-rendered datasets. This diverse dataset gives Sora its deep semantic comprehension of motion, depth, and object relationships.

Temporal Coherence & Frame Consistency

Uses 3D space-aware video tokenization, making it highly consistent across scenes involving object tracking, environmental interactions, and multiple actors.

Maintains physics-informed consistency, such as shadows shifting with light or realistic hair and fabric motion.

Can simulate perspective shifts, parallax, and continuous camera movement effectively.

Motion Quality & Scene Dynamics

Motion in Sora is deeply contextual. For example, if a character walks through a field, the grass will bend appropriately, and shadows will trail naturally. It uses learned world physics models, giving it an edge for generating interactive, immersive environments.

Scene Comprehension & Object Permanence

Displays strong object permanence—people, props, and environments persist across frames.

Can simulate object interactions, like a person picking up a cup or a dog chasing a ball.

Latency, Speed & Hardware Dependency

Cloud-based rendering with GPU acceleration. Output typically takes 30 seconds to 3 minutes, depending on resolution and clip length.

Responsive on web browsers, but slower for longer scenes or high-definition exports (especially 4K).

Limitations (Technical & Ethical)

Not publicly accessible: Still in closed testing; outputs aren't yet available for creators to directly use.

Ethical concerns: OpenAI is cautious about misuse in misinformation or deepfakes.

Control issues: Over-detailed prompts can sometimes confuse scene composition.

APIs, SDKs, & Extensibility

Future API expected to support prompt engineering, conditional generation, and integration into apps via Python SDK or OpenAI Developer Platform.

Ideal for enterprise integration, gaming, and creative development once launched.

Runway ML (Gen-2 and Gen-1)

Model Architecture & Training Approach

Architecture

Runway’s Gen-2 uses a multi-stage diffusion model combining text-to-image generation with motion modules for temporal coherence. It applies latent video diffusion over frames with optical flow techniques to guide motion interpolation.Modular Layers

Gen-2 separates frame generation, motion estimation, and stylization—allowing more layered control over the transformation pipeline.Training Data

Runway uses open-domain and in-house curated datasets, including video frames, masks, segmentation maps, and image-style pairs. It excels at transferring style and structure from one input to another.

Temporal Coherence & Frame Consistency

Offers moderate temporal consistency; videos up to 4–6 seconds often show slight flickering or noise in fast motion.

Uses optical flow and frame anchoring but lacks true 3D scene understanding.

Best suited for short-form, stylized animations or effects-heavy storytelling.

Motion Quality & Scene Dynamics

Runway creates impressionistic motion, focusing more on art direction and motion prompts rather than realism. It supports:

Stylized effects like motion blur

Experimental transitions

Low-frame-rate cinematic looks (intentional or as a byproduct of generation speed)

Scene Comprehension & Object Permanence

Works best with simple object tracking. Sometimes struggles with maintaining consistency in object appearance across frames.

Object interactions are not always contextually accurate unless explicitly stylized or controlled through prompt chaining.

Latency, Speed & Hardware Dependency

Currently limited to OpenAI’s internal infrastructure—likely running on custom NVIDIA H100 GPU clusters or Azure supercomputers.

Despite its high fidelity, Sora is reportedly faster than earlier video models like Make-A-Video and Imagen Video due to its efficient sampling schedule and optimized transformer layers.

Limitations (Technical & Ethical)

Lacks photorealism: Not ideal for scenes needing ultra-realistic lighting or motion.

Limited clip length: Most outputs are between 4 and 6 seconds unless extended with external video editors.

Prompt sensitivity: Can sometimes produce vague or inconsistent results without iterative prompting.

APIs, SDKs, & Extensibility

Already supports API access, enabling embedding in apps or creative pipelines.

Offers plugin support for Adobe Creative Suite, Figma, Unity, and more.

Can be deployed in multi-user cloud environments for production teams.

Choose Runway ML if you want a mature, easy-to-use, collaborative video tool with creative flexibility and access today.

Choose Sora if you’re looking toward the future of high-end, realistic, AI-generated video and can wait for broader access.

The Bottom Line

Runway ML is the tool for today’s creators.

Sora is the vision for tomorrow’s storytelling revolution.

FAQs

Q. Is Sora available for public use yet?

As of July 2025, Sora is still in limited beta testing. OpenAI is expected to release a public API later this year.

Q. Can I use Runway ML without any video editing skills?

Yes, Runway ML is built for non-technical users with drag-and-drop controls, templates, and real-time previews.

Q. Which platform is better for filmmaking?

Sora, once available, will offer more cinematic and realistic outputs. Runway ML is excellent for experimental and stylized storytelling today.

Q. Are there mobile apps for these tools?

Runway ML supports web and limited mobile functions. Sora has no standalone app as of now.

Q. Can I export videos without watermarks in Runway?

Yes, but only with a paid subscription plan.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚