Runway ML vs Pika Labs: AI Video Editors Face Off in the Creative Arena

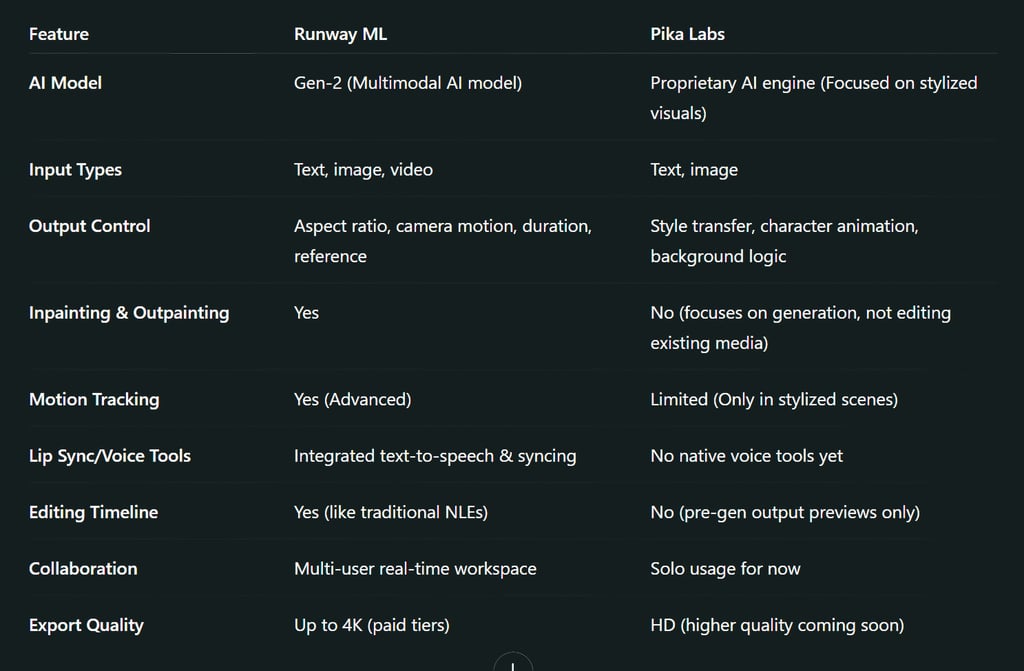

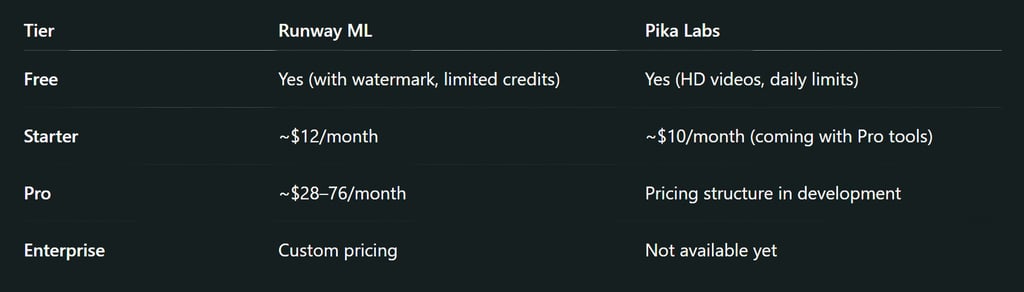

Explore a detailed comparison of Runway ML and Pika Labs—two top-tier AI video editing platforms. From technical capabilities to creative use cases, find out which tool best fits your content creation goals.

AI/FUTUREEDITOR/TOOLSAI ART TOOLSARTIST/CREATIVITY

Sachin K Chaurasiya

6/27/20255 min read

In today’s fast-evolving digital landscape, video content reigns supreme. From YouTube creators to marketing teams, the demand for rapid, creative, and professional-looking videos is higher than ever. Enter AI video editors like Runway ML and Pika Labs—tools designed to revolutionize how videos are created and edited using artificial intelligence.

But which platform truly delivers for content creators, filmmakers, and brands? Let’s dive into a detailed comparison of Runway ML vs Pika Labs, exploring their features, AI capabilities, user interface, pricing, and ideal use cases.

Runway ML

Runway ML is a pioneer in creative AI tools. With its flagship Gen-2 model, it empowers creators to generate videos from text, image, or video inputs, edit footage using inpainting, and apply motion-tracking effects—all with just a few clicks.

Launch Year: 2018

Headquarters: New York, USA

Focus: Full-suite AI video editing, text-to-video, real-time collaboration, motion tracking, inpainting, and model training.

AI Video Generation Capabilities (Runway ML’s Gen-2)

Runway’s Gen-2 is a multi-modal AI video generator that lets users input a simple text prompt, image, or even a reference video. The model interprets these inputs to generate video scenes with camera motions, visual storytelling, and stylistic coherence.

Key Strengths

Camera fly-throughs and perspective realism

Consistency between frames

Motion style transfer

Precise masking tools for object tracking and replacement

User Interface & Workflow

Clean, professional dashboard

Drag-and-drop editing timeline

Compatible with standard editing formats

Plugin integration for Adobe and other tools

Better for professionals and agencies

Use Cases & Ideal Audience

Video editors and filmmakers

Marketing agencies needing branded content

Educational/Training videos

Virtual production studios

YouTube creators seeking full-edit tools

Model Architecture & Training Approach

Architecture

Gen-2 builds on latent diffusion models (LDMs) with a hybrid spatio-temporal transformer pipeline, enabling video consistency across frames. It works through a dual encoder system for text and image inputs, with a dedicated temporal attention mechanism.

Training Data

Trained on a massive multimodal dataset (text, image, and video triplets), Gen-2 leverages large-scale motion sequences and cinematographic datasets optimized for semantic preservation across frames and time.

Key Innovations

Camera Motion Conditioning: Allows virtual camera movements (dolly, pan, zoom) using vector embeddings.

Depth Map Injection: Uses depth-aware mapping from input frames to improve motion realism.

Inpainting via Dynamic Masking: Spatial transformer networks automatically infer object boundaries to replace or erase elements contextually.

Pika Labs

Pika Labs emerged as a direct competitor in the AI video space, especially excelling in visual storytelling. Its intuitive Discord-based launch attracted early adopters, and its web platform now brings sleek, style-locked animations for creators with an emphasis on character design and expressive outputs.

Launch Year: 2023

Headquarters: San Francisco, USA

Focus: Text-to-video AI, real-time generation, style consistency, creative VFX, and short-form content creation.

AI Video Generation Capabilities (Pika Labs' Engine)

Pika Labs focuses on a stylized storytelling approach. Its text-to-video system excels at producing aesthetic visuals, fantasy-themed outputs, and expressive character-based clips. It’s incredibly popular for TikTok creators, meme editors, and indie animators.

Key Strengths

Consistent character styling

Smooth animations with a cartoon/anime aesthetic

Fast render times (10–30 seconds)

Ability to chain scenes for narrative building

User Interface & Workflow

Originally Discord-based; now has a browser UI

Simpler prompt-driven workflow

Visual style customization with reference images

Ideal for hobbyists, storytellers, and fast content makers

Use Cases & Ideal Audience

TikTok and Instagram Reels creators

Storytellers and comic animators

Indie VFX creators

Fantasy and stylized video fans

Meme makers and short skit producers

Model Architecture & Training Approach

Architecture

While not fully disclosed, Pika Labs is believed to use a lightweight, fine-tuned diffusion model optimized for frame interpolation and stylized motion continuity. It likely includes a StyleGAN-inspired layer for aesthetic enhancement, layered atop a basic frame generator.

Training Strategy

Focused on style-consistent sequences, Pika's training prioritizes character retention, facial expression framesets, and 2.5D animation motion loops. Uses low-latency vector quantization (VQ) to reduce rendering time.

Key Innovations

Prompt-Style Fusion Layer: Combines user-prompt semantics with visual style embeddings for scene coherence.

Auto Loop Smoothing Algorithm: Ensures end-to-start frame continuity for loopable reels.

Scene Chaining with Temporal Gradient Memory: Allows multiple scenes to blend into each other with memory-aware continuity.

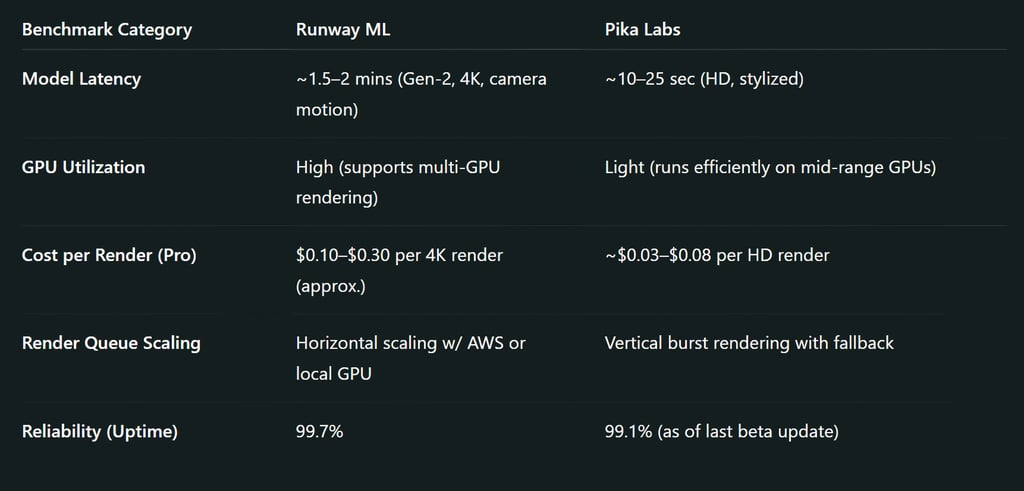

Inference Pipeline & Latency Handling

Runway ML

Inference Stack

Uses CUDA-accelerated pipelines, ONNX runtime optimization, and model quantization in lower tiers. Pro accounts benefit from multi-GPU accelerated rendering (A100s or V100s).

Latency Handling

Introduces asynchronous token buffering during prompt-to-video transformation, reducing UI input delays even when the backend is loaded.

Advanced APIs

Runway provides developer APIs to:

Call text-to-video via REST

Manipulate scene graphs

Trigger inpainting tasks programmatically

Integrate with tools like Blender, Unreal Engine, and Unity

Pika Labs

Inference Stack

Likely uses a simplified WebRTC-powered serving mechanism via distributed GPU clusters, optimized for low-batch rendering and real-time Discord feedback during beta.

Latency Handling

Uses dedicated task queues on edge GPU servers with pre-cached style prompts. The result is sub-15s turnaround for most renders—excellent for short content generation.

Developer Access

Pika has limited APIs currently but plans to launch

Prompt templating SDKs

Real-time character generator API

Scene blending from script-to-video pipelines

Advanced Use Cases & Experimental Integrations

Runway ML

Virtual Production Pipelines

Runway is already being integrated into virtual film studios to test camera motion, lighting scenarios, and environment pre-visualization.

AI Film Editors Toolkit

Features like motion brush, object masking, and “AI Replace” are part of a broader vision for cinema-quality automation, particularly useful in storyboarding, previs, and rough cuts.

Multi-modal R&D

Runway’s Gen-2 roadmap includes:

Audio-reactive generation

Emotion-conditioned scenes

Reinforcement learning-enhanced edits

Pika Labs

Narrative Video Chains

Advanced users use prompt chaining to create multi-scene animated micro-stories (a technique similar to storyboard merging).

Custom Style Tokens (Beta Users)

Some power users can inject personal art styles or anime themes using uploaded reference datasets.

Voice-to-Video (Experimental)

Future builds are expected to accept voice prompts to influence scene emotion and pacing—opening doors to narrative-driven animation from spoken input.

Community & Support

Runway ML has robust documentation, community forums, and enterprise support.

Pika Labs thrives on Discord with active user feedback, sharing, and prompt advice.

Both communities are enthusiastic, but Runway leans professional, while Pika fosters creative experimentation.

In the battle of Runway ML vs Pika Labs, the winner depends entirely on your creative goals.

Choose Runway ML if you want professional-grade editing tools, camera realism, and inpainting/masking for detailed video work.

Choose Pika Labs if you’re into fast-paced, stylized storytelling, character-driven content, or short-form social media videos with vivid imagination.

Both tools are pushing boundaries—but in very different directions. The future of AI video editing will likely include features from both ecosystems. Until then, let your vision dictate your tool.

FAQs

Q: Can I use Runway ML and Pika Labs together?

Yes! Many creators generate visuals in Pika Labs and finalize edits in Runway for polish.

Q: Which tool is better for animation-style videos?

Pika Labs excels at anime and stylized animations, while Runway is better for realism.

Q: Are both platforms browser-based?

Yes, both are accessible via browsers without needing downloads.

Q: Which offers better free value?

Pika Labs currently offers more for free, with fast render times and quality outputs.

Q: Are these tools beginner-friendly?

Pika Labs is extremely beginner-friendly. Runway ML, while intuitive, has a steeper learning curve due to its depth.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚