OpenCLIP vs ALIGN vs MetaCLIP vs DFN: Key Differences, Strengths, and Applications

OpenCLIP, ALIGN, MetaCLIP, and DFN are leading AI models in contrastive learning and data filtering. This comprehensive comparison covers their architectures, training methods, applications, and future potential. Learn how these models impact AI research, search engines, and social media technology.

AI/FUTURECOMPANY/INDUSTRYEDITOR/TOOLSEDUCATION/KNOWLEDGE

Sachin K Chaurasiya

3/21/20254 min read

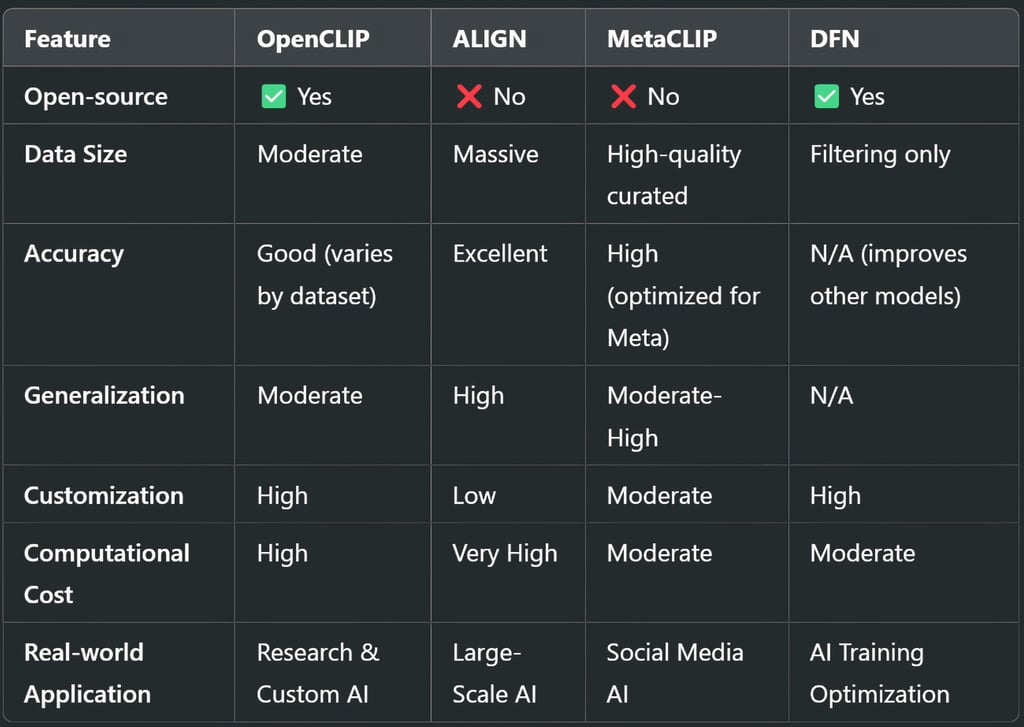

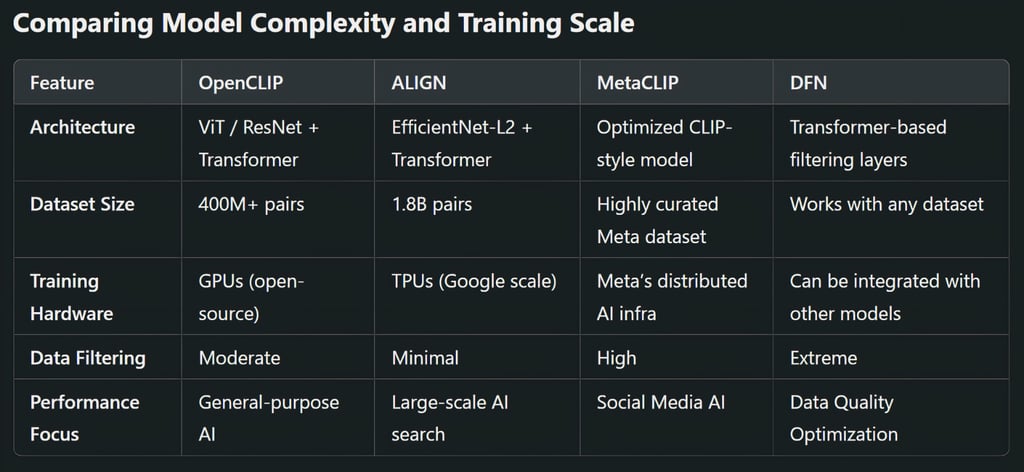

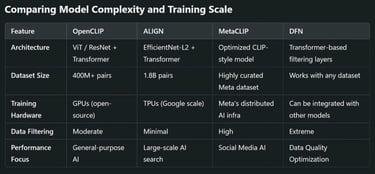

In the fast-evolving field of AI and machine learning, contrastive language-image pretraining (CLIP-style models) and data filtering strategies are reshaping how AI interprets and generates visual and textual information. Four major contenders in this space—OpenCLIP, ALIGN, MetaCLIP, and Data Filtering Networks (DFN)—stand out for their unique approaches and technological advancements. Let’s explore their key differences, strengths, applications, and future implications.

Understanding the Basics

Before diving into the specifics, let’s define each of these models:

OpenCLIP: An open-source adaptation of OpenAI’s CLIP, designed to train robust multimodal AI models by pairing images and texts.

ALIGN (Advanced Large-scale Image-Language Network): A large-scale contrastive learning model developed by Google that uses vast datasets for superior image-text matching.

MetaCLIP: Meta’s take on contrastive pretraining, designed to leverage high-quality data for efficient AI learning and deployment.

DFN (Data Filtering Networks): A model focused on refining datasets by filtering out noise and improving training efficiency, which is critical for high-performance AI.

OpenCLIP: Democratizing Multimodal AI

OpenCLIP was developed to make contrastive learning accessible to the research community. Unlike OpenAI’s proprietary CLIP, OpenCLIP allows researchers to experiment with model training, dataset choices, and architecture variations.

Key Features

Open-source and customizable: Anyone can fine-tune and expand its capabilities.

Multilingual and multimodal: Supports various languages and datasets beyond English.

Efficient pretraining: Uses contrastive loss to align images with text captions.

Diverse backbone architectures: Supports different transformer models like ViT and ResNet.

Adaptability for niche applications: Can be trained on specialized datasets for industry-specific use cases.

Pros

✔️ Open-source and widely accessible.

✔️ Community-driven improvements.

✔️ Flexibility in architecture and dataset choices.

✔️ Growing support from AI researchers and industry professionals.

Cons

❌ Requires significant computational power.

❌ Performance varies depending on dataset quality.

❌ Needs extensive fine-tuning for optimal results.

ALIGN: Google’s Large-Scale Powerhouse

Google’s ALIGN takes a data-driven approach, focusing on scale and quality. Unlike OpenCLIP, which is designed for community-driven development, ALIGN is optimized for Google’s ecosystem and massive datasets.

Key Features

Billions of image-text pairs: Trained on an extensive dataset without heavy filtering.

Superior generalization: Works well across diverse image-text tasks.

Zero-shot capabilities: Performs well without needing fine-tuning.

Cloud integration: Seamlessly integrates with Google’s AI services.

Efficient vector representation learning: Improves search and retrieval performance for multimodal content.

Pros

✔️ Large dataset allows for robust performance.

✔️ Strong generalization across multiple domains.

✔️ High scalability for enterprise applications.

✔️ Effective for commercial applications like Google Lens and image retrieval.

Cons

❌ Proprietary model, limiting external use.

❌ Data noise issues due to minimal filtering.

❌ Ethical concerns around dataset sourcing and biases.

MetaCLIP: Meta’s Precision-Tuned AI

MetaCLIP builds on CLIP-style training but emphasizes data refinement to improve model quality. Meta’s AI teams have worked on ensuring that the data fed into their models is clean, diverse, and high-quality, giving MetaCLIP a strategic edge in efficiency.

Key Features

Highly curated datasets: Reduces noise compared to ALIGN.

Optimized for social media applications: Tailored to work well with user-generated content.

Improved domain adaptation: Performs better with real-world content and user interactions.

Enhanced safety measures: Includes filtering mechanisms to detect harmful content.

Integration with Meta’s AI ecosystem: Powers features in Instagram, Facebook, and WhatsApp.

Pros

✔️ A cleaner dataset results in better accuracy.

✔️ Strong performance in content moderation and media applications.

✔️ Efficient deployment across Meta’s ecosystem.

✔️ Optimized for real-world, user-generated data.

Cons:

❌ Not as accessible for open research.

❌ May not generalize as well to datasets outside its training scope.

❌ Primarily built for Meta’s ecosystem, limiting broader applications.

DFN (Data Filtering Networks): The Hidden Backbone of AI

While OpenCLIP, ALIGN, and MetaCLIP focus on pretraining multimodal AI, DFNs specialize in data curation, an often overlooked yet critical aspect of AI training. These networks use advanced filtering techniques to remove low-quality or irrelevant data before training begins, improving efficiency and performance.

Key Features

Automated data cleaning: Reduces noise and enhances dataset quality.

Adaptive learning: Adjusts to different types of training data dynamically.

Scalability: Works well with large datasets, optimizing training speed.

Ethical filtering: Helps remove biased or problematic data points.

Enhances downstream model performance: Ensures higher-quality pretraining.

Pros:

✔️ Enhances the performance of other AI models by refining datasets.

✔️ Reduces computational overhead by filtering out irrelevant data.

✔️ Improves accuracy by focusing on high-quality inputs.

✔️ Addresses ethical concerns by removing biased data.

Cons:

❌ Does not generate models but optimizes existing AI systems.

❌ Effectiveness depends on the initial dataset structure.

❌ Computationally expensive for real-time filtering.

Future Implications and Trends

Hybrid Approaches: Future AI systems may integrate DFN with OpenCLIP or MetaCLIP for better efficiency and ethical AI training.

More Transparency: Companies like Google and Meta may face increased pressure to open-source models or improve dataset documentation.

Better Fine-tuning Methods: AI researchers will continue to develop methods to optimize CLIP-based models for specific industries.

Each model serves a unique purpose, and the choice depends on specific AI needs. A combination of these technologies could lead to breakthroughs in AI efficiency, ethics, and performance.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚