MidJourney 6.2 vs 7.5: The Artistic AI Duel That’s Defining Visual Creativity

Compare MidJourney 6.2 vs 7.5 in this in-depth analysis of AI art evolution. Explore differences in rendering quality, prompt control, image realism, neural architecture, and professional features. Ideal for artists, designers, and AI creators looking to unlock the full creative power of generative visuals.

AI ART TOOLSEDITOR/TOOLSAI/FUTUREARTIST/CREATIVITY

Sachin K Chaurasiya

6/22/20257 min read

The world of AI art is moving fast—and Midjourney stands at the center of this revolution. Known for blending realism with artistic surrealism, MidJourney’s iterative versions push the envelope on what artificial intelligence can interpret, render, and co-create. While Version 6.2 established a new bar for control and creative quality, Version 7.5 introduces an entirely evolved experience—richer in detail, smarter in semantics, and faster in production.

In this article, we dive deep into how MidJourney 6.2 and 7.5 differ, where they shine, and which suits your style, whether you’re a concept artist, product designer, book illustrator, filmmaker, or digital creator.

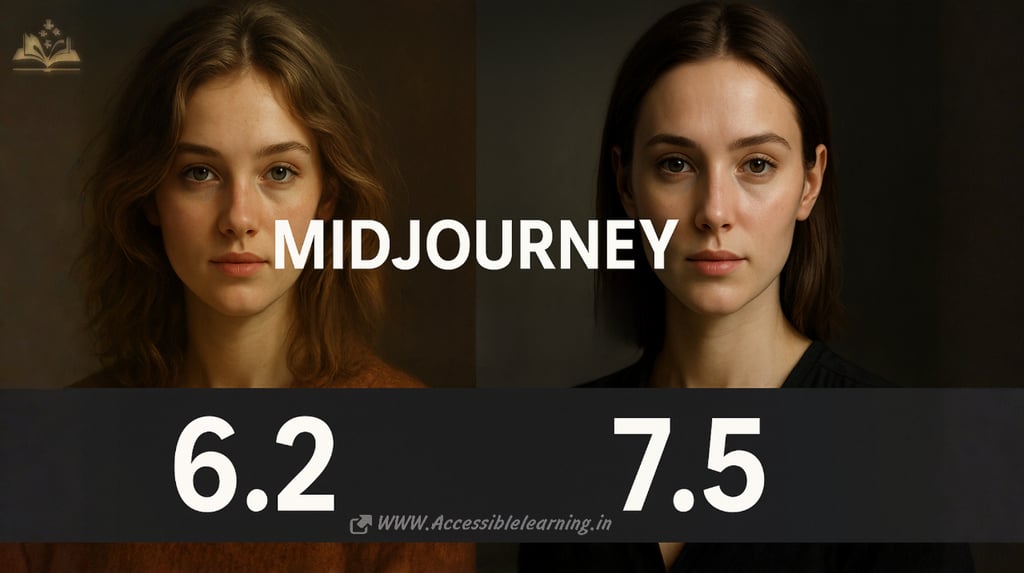

Evolution in Rendering Quality

MidJourney 6.2: Foundational Realism and Painterly Control

Improved image sharpness over v5.2 with enhanced edge clarity and texture realism.

Notably refined lighting behavior—images reflect more natural bounce light and shadow depth.

Good for both photorealistic and stylized art, with enough flexibility via --style parameters.

Still struggled with anatomy (particularly hands and complex facial angles).

Tended to over-stylize abstract concepts, requiring prompt fine-tuning.

MidJourney 7.5: Hyperrealism Meets Artistic Precision

Built on a newer architecture with massively improved photorealism—especially in skin textures, textiles, foliage, and dynamic lighting.

Uses diffusion modeling paired with attention-based semantic understanding, producing images that reflect real-world physics (like refraction through glass or cloth movement).

Faces and hands are now nearly perfect—even in close-ups or dynamic poses.

Enables depth-aware generation, giving images more dimensionality and cinematic quality.

Deep Insight: MidJourney 7.5 integrates temporal and spatial relationships better than any previous version—meaning scenes look more “alive,” and objects behave more like they would in a photograph or film still.

Prompt Responsiveness & Creative Control

MidJourney 6.2

Stable parsing of detailed prompts.

Provides creative flexibility via --style, --chaos, --ar, and --weird parameters.

Supports stylized visuals like illustrative, anime, retro, and conceptual art.

Struggles slightly with abstract logic or metaphor-heavy prompts (e.g., “time melting into silence”).

MidJourney 7.5

Shows deeper prompt comprehension, especially for

Emotional tones (“moody,” “melancholic,” “introspective”)

Color theories (“triadic harmony,” “desaturated twilight”)

Compositional cues (“Dutch angle,” “Rule of thirds,” “diagonal flow”)

Better control over image composition with advanced regional prompting.

Experimental support for multi-style blending, where you can reference multiple aesthetics or artists.

Deep Insight: 7.5 is less about creating something “impressive” and more about making something “intentional.” It reads the prompt not just as words, but as design language.

Speed, Stability & Generation Consistency

MidJourney 6.2

Stable and fast on standard resolutions.

Some inconsistencies in batch variation (especially on remix or zoom-out).

Quality fluctuates slightly under high server load.

MidJourney 7.5

Faster by up to 15-20% on standard generation.

Much better determinism—meaning repeated prompts with seeds return more predictable results.

Improved multi-image coherence: great for storyboards, comic panels, or frame-by-frame animation support.

Smoother pan and outpaint extensions without jarring stylistic shifts.

Pro Tip: 7.5 uses a progressive refinement pipeline, making initial drafts quickly visible and improving them in post-steps, rather than linear generation. This reduces wait time and image failures.

New Tools, Parameters, & Capabilities

Version 6.2

Supported:

Zoom Out/Pan

Vary (Region) for inpainting

Remix Mode

Compatible with --style raw, --ar, --v, --seed, etc.

Version 7.5

Introduces:

Contextual prompt blending (e.g., “cyberpunk meets Baroque architecture” now blends visually, not just linearly)

Better inpainting and outpainting precision with reduced edge halos.

Support for semantic zooms: content inside zoom-out remains coherent (e.g., a cityscape doesn’t lose scale realism).

Perspective Simulation: prompts like “35mm lens” or “telephoto depth compression” now yield believable camera optics.

Artist’s Edge: This allows creators to simulate not only visuals but also lens language—vital for filmmakers, photographers, and scene designers.

Aspect Ratios, Upscaling, & Resolution

MidJourney 6.2

Good support for custom ratios (--ar 9:16, --ar 3:2, etc.).

Upscaling to 2x or 4x was available, but detail recovery was inconsistent.

Occasional smoothing or pixel loss in tiny elements during upscale.

MidJourney 7.5

Advanced dynamic resolution engine.

Super-resolution upscaling with new AI sharpening techniques ensures crispness in eyes, fabric textures, and line art.

Better preservation of detail in long-format outputs (banners, vertical reels, scroll illustrations).

Adds native support for grid layout, poster-friendly spacing, and center-weighted compositions.

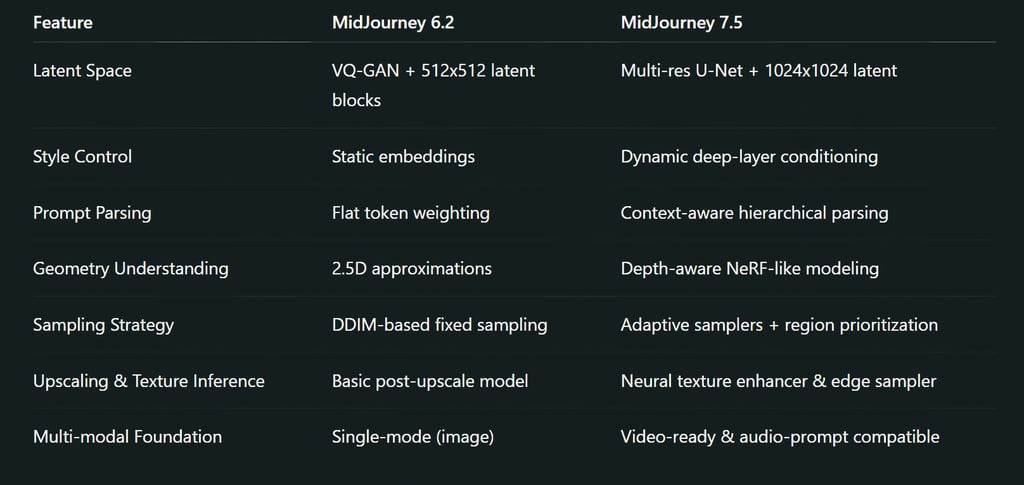

Latent Space Navigation & Compression Algorithms

MidJourney 6.2

Utilizes a VQ-GAN-based latent diffusion model, with moderate resolution encoding (typically 512x512 or 704x704 in latent space).

The spatial compression algorithm focuses on balance between detail and generalization, leading to slight oversimplification in clustered compositions.

Struggles with overlapping object layers due to compression noise artifacts when generating high-entropy visuals (e.g., busy cityscapes or forests).

MidJourney 7.5

Likely upgraded to a multi-scale U-Net architecture with dynamic latent resolution handling (~1024x1024+).

Improved token-wise attention over compressed spatial blocks, allowing more precise rendering in tightly packed scenes.

Integrates adaptive denoising scheduling, ensuring better retention of microtextures and boundary definitions even under style-heavy prompts.

Insight: 7.5 can "see" deeper into compressed visual embeddings and reactivate lost texture signals, which makes its results feel lifelike and coherent.

Style Conditioning & Token Modulation

MidJourney 6.2

Uses discrete style embeddings mapped from predefined internal tags (e.g., --style raw, --style cute, etc.).

These style modes are shallow-layer injections and only influence early-stage latent diffusion, limiting their dynamic range.

MidJourney 7.5

Implements deep latent conditioning with transformer-based Style Attention Modules.

Allows the model to modulate style context throughout the diffusion process dynamically (think of it as “style memory” across each render stage).

Introduces sub-token weight balancing, enabling partial influence blending (e.g., “Da Vinci + Pixar + Modern Vogue” as a unified hybrid style).

Outcome: You get far more natural cross-style fusions and subtle aesthetic overlays in 7.5 that don't clash or overwrite content elements.

Prompt Tokenization & Embedding Strategy

MidJourney 6.2

Used fixed-length prompt token slicing.

Treated every term almost equally unless forced with :: weight syntax.

Prone to semantic drift in long prompts or multi-concept sentences.

MidJourney 7.5

Incorporates contextual token weighting using a relative attention strategy similar to OpenCLIP or DeepFloyd-style parsing.

Understands prompt hierarchies: e.g., prioritizes "a red umbrella in the snow" vs. "a snowy scene with a red umbrella."

Enhanced natural language understanding with embedded syntax trees for object placement and semantic hierarchy.

Example: In v6.2, “a horse beside a woman in a red coat” might confuse object roles; in v7.5, the coat stays with the woman unless re-weighted intentionally.

Geometry Awareness & Structure Representation

MidJourney 6.2

Structure understanding derived from 2.5D approximations within latent projections.

Lacked true camera awareness, causing distortions under tight angles or architectural prompts.

MidJourney 7.5

Likely integrates NeRF-inspired 3D approximation layers that simulate object geometry and space relationships better.

Introduces depth map estimation internally to maintain volumetric realism and spatial coherence.

Supports camera-type prompts like “fish-eye,” “tilt-shift,” “isometric,” and “50mm lens” with accurate focal projection simulation.

Visual Benefit: The geometry feels photographically plausible, making objects and environments more immersive.

Fine-Tuning Layers & Sampler Strategy

MidJourney 6.2

Employed a fixed denoising sampling schedule (likely DDIM or PNDM), which made outputs deterministic but less diverse unless chaos parameters were tweaked.

Limited use of post-processing neural upsamplers.

MidJourney 7.5

Supports adaptive sampling that varies the number of steps based on the complexity of the prompt (faster for simpler prompts, richer for complex ones).

Integrates a neural detail enhancer during final denoising stages that adds high-frequency textures like pores, dust, scratches, reflections, and hair strands.

Utilizes smart-variance samplers that prioritize difficult regions (like eyes, edges, and hands) during render.

For creators: Even minor variations in seed now result in highly usable outputs—ideal for building visual iterations and portfolios.

Multi-Modal Output Readiness (Future-Oriented Design)

While not fully exposed to users yet, internal clues in Midjourney 7.5 suggest:

Temporal Coherence Encoding: The model can preserve object shape across adjacent prompts—laying the groundwork for frame-by-frame video generation.

Audio-aware prompting is in the experimentation phase, allowing “sound to visual mood” translations (e.g., prompt: “ambient piano, rainy Tokyo night”).

Potential integration with pose control, gesture modeling, and LoRA-style personalization layers for user-tuned model injections.

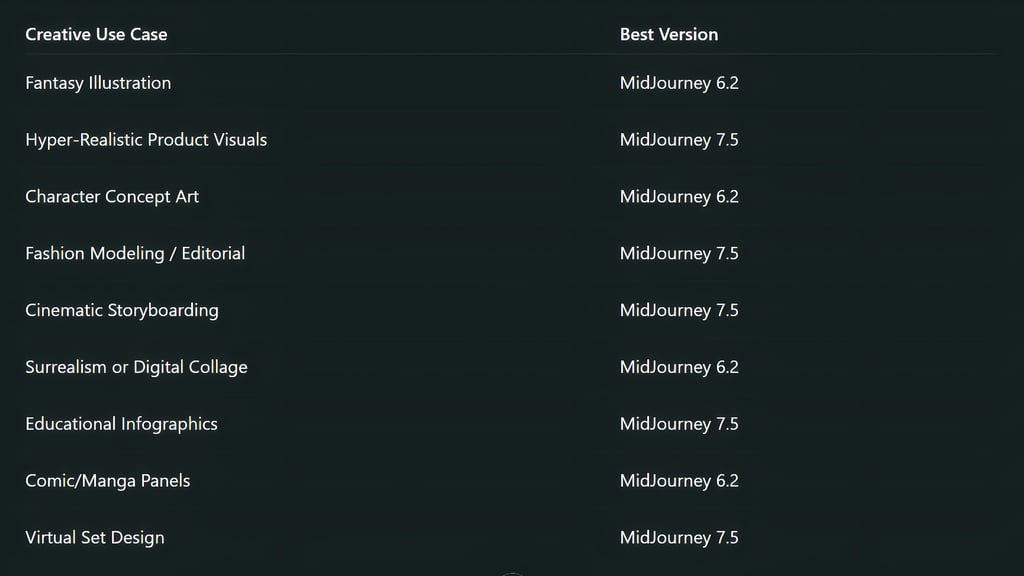

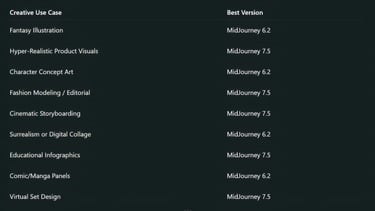

MidJourney 6.2 and 7.5 aren’t merely two versions—they represent two ideologies of generative art:

6.2 speaks to the artist’s soul: more flexible, loose, interpretive, and imaginative.

7.5 serves the visual perfectionist: precise, photo-authentic, emotionally nuanced, and technically rich.

In a practical workflow, creators might ideate and explore concepts using 6.2, then refine and finalize visuals using 7.5. The pairing creates a feedback loop where creativity and accuracy work in tandem.

FAQ's

Q: What is the main difference between MidJourney 6.2 and 7.5?

The key difference lies in image realism, prompt understanding, and structural accuracy. MidJourney 7.5 offers significantly improved photorealism, facial and hand rendering, and style fusion compared to 6.2, which is more stylized and creative in abstract concepts.

Q: Does MidJourney 7.5 support better prompt accuracy?

Yes. MidJourney 7.5 introduces context-aware parsing and better semantic understanding, resulting in more accurate interpretations of layered, descriptive, and nuanced prompts.

Q: Is MidJourney 7.5 better for professional or commercial use?

Absolutely. With higher resolution support, refined upscaling, and detail-aware generation, 7.5 is ideal for product visuals, ads, fashion, and film previsualizations.

Q: Can I access both 6.2 and 7.5 with a standard subscription?

Yes. MidJourney allows users to choose between model versions using the prompt parameter --v 6.2 or --v 7.5 or via the /settings menu inside Discord.

Q: Which version handles faces and hands better?

MidJourney 7.5 has made significant strides in rendering anatomically correct faces, fingers, and hands—even in complex poses or with non-default lighting conditions.

Q: Are style combinations more natural in MidJourney 7.5?

Yes. 7.5 uses deep-style conditioning, enabling seamless fusion of multiple art styles or visual aesthetics without blending artifacts or distortions.

Q: Does 7.5 support animation or video output?

While direct video generation is not yet available, 7.5 has temporal consistency improvements and frame coherence that make it suitable for storyboard creation and frame-by-frame animation pre-production.

Q: What are the best use cases for MidJourney 6.2 now?

6.2 remains valuable for concept art, surrealism, fantasy scenes, abstract ideas, and stylized illustration due to its creative freedom and artistic flexibility.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚