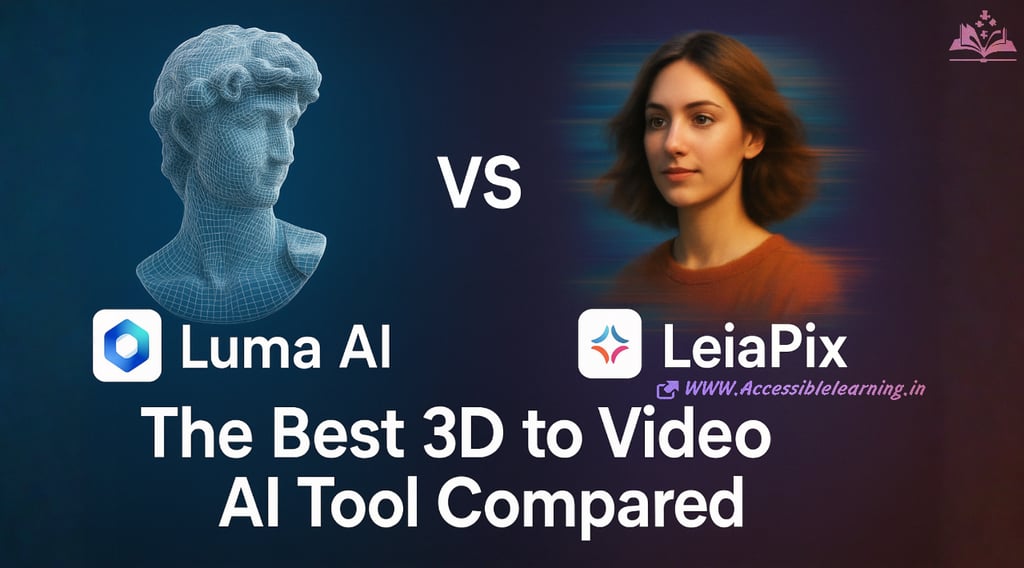

Luma AI vs LeiaPix: The Best 3D to Video AI Tool Compared

Discover the ultimate comparison between Luma AI and LeiaPix—two powerful AI tools revolutionizing the 3D-to-video landscape. Whether you're aiming for cinematic photorealism or fast animated depth, this in-depth guide explores technology, workflows, use cases, and expert-level insights to help you choose the right tool for your creative vision.

AI ASSISTANTA LEARNINGAI/FUTUREAI ART TOOLS

Sachin K Chaurasiya

6/24/20255 min read

The creative world is shifting rapidly from 2D visuals to immersive 3D storytelling. Among the tools driving this revolution are Luma AI and LeiaPix—both offering innovative ways to convert static images or 3D assets into dynamic videos. But while both are built to serve the same goal, their approach, audience, and underlying tech make them vastly different.

This in-depth comparison helps you decide which AI tool—Luma AI or LeiaPix—is better suited to your needs, whether you're an indie creator, marketer, filmmaker, or developer exploring next-gen visual content.

Core Technology: Neural Rendering vs Lightfield Transformation

Luma AI—Neural 3D Generation & Rendering

Luma AI uses Neural Radiance Fields (NeRFs), an AI technique that reconstructs real-world 3D scenes from standard video captures. It's deeply technical and captures not just the object geometry but also how light interacts with surfaces—leading to ultra-realistic renderings.

AI Foundation: NeRF + Gaussian Splatting (beta features)

Input: Regular smartphone video (360° or orbital)

Output: 3D mesh or cinematic 3D video

Best For: Product shots, digital twins, AR/VR content

Underlying AI Architecture

NeRF (Neural Radiance Fields): Luma reconstructs full volumetric scenes by training neural networks to predict color and density at every 3D coordinate given a viewing direction.

Sparse View NeRF: Optimized for mobile devices with minimal input data. Luma’s proprietary variant can reconstruct high-fidelity 3D from <30 frames.

Gaussian Splatting Integration (Beta): Offers a faster rendering alternative by projecting 3D Gaussians instead of ray marching. This technique allows real-time scene visualization with fewer resources and photoreal transitions.

LeiaPix—Lightfield-to-Depth Motion Conversion

LeiaPix, from Leia Inc., excels at converting 2D images into animated 3D depth motion images using a proprietary Lightfield algorithm and AI-driven depth estimation.

AI Foundation: Depth map extraction + parallax animation

Input: Single 2D photo or image

Output Animated depth video (3D motion) or field formats

Best For: Posters, portraits, album art, web visuals

Underlying AI Architecture

DepthNet: LeiaPix uses a CNN trained on synthetic lightfield datasets to predict layered depth from monocular inputs.

Layered Depth Parallax Animation: The system generates depth maps and breaks them into pseudo-3D planes, then applies multi-axis parallax motion using camera keyframes and Bézier curves.

Temporal Stabilization: Uses frame-wise temporal coherence to prevent flicker in animated exports, which is crucial when generating smooth GIFs or MP4s.

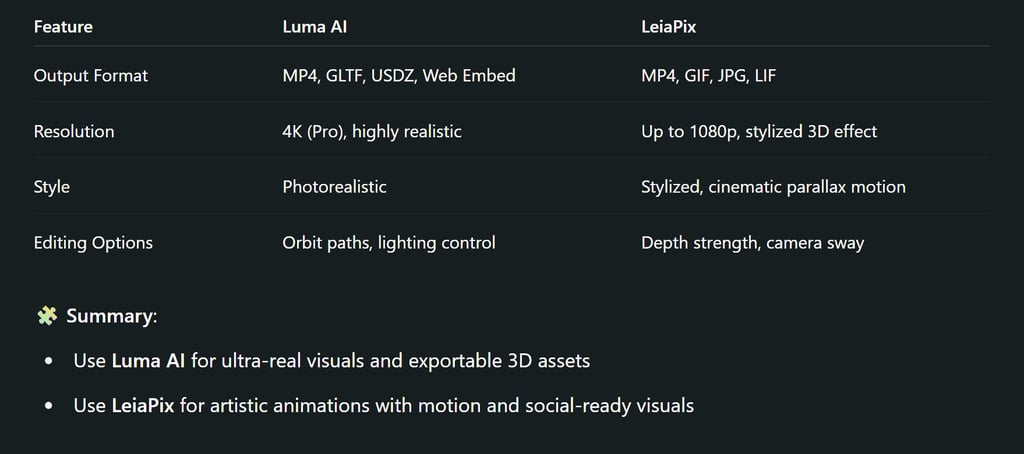

Summary

Luma AI is better for 3D scans and spatially accurate models

LeiaPix excels in fast 2D-to-3D conversions for visuals with motion depth

Workflow & Ease of Use

Luma AI Workflow

Capture video in-app (or upload a 360° shot)

AI reconstructs the 3D scene

Render as a video flythrough or interactive object

Pros: Realistic 3D scenes; suitable for filmmakers, product designers

Cons: Requires learning curve and decent video capture

LeiaPix Workflow

Upload a single image (JPG/PNG)

AI auto-generates depth + motion layers

Export as MP4, GIF, or Leia’s .LIF lightfield format

Pros: Instant results; extremely beginner-friendly

Cons: Less depth accuracy; limited 3D realism

Summary

Use Cases & Ideal Users

Luma AI Is Perfect For

Product designers and marketers

Game developers needing real 3D assets

Filmmakers wanting realistic object flythroughs

AR/VR environments and digital twins

LeiaPix Is Ideal For

Artists, photographers, and meme creators

Album covers, motion portraits, NFT-style visualizations

Marketers who want scroll-stopping social content

Anyone without 3D or video experience

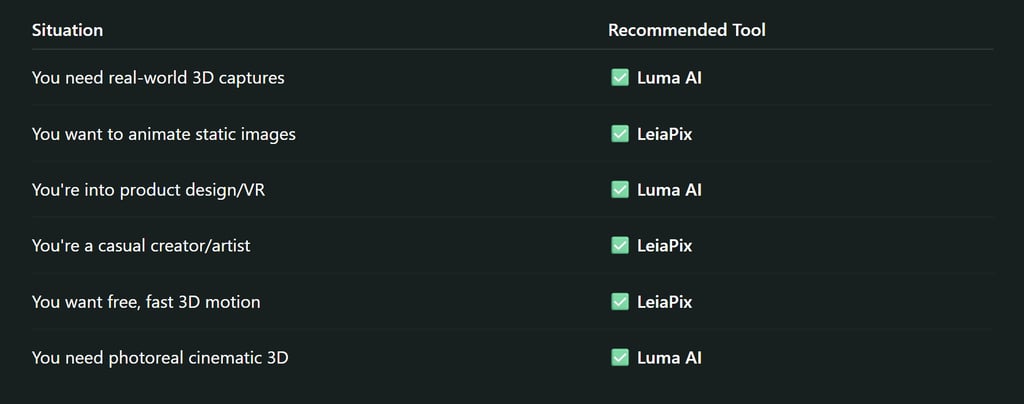

Summary

Luma AI suits professionals creating for 3D pipelines

LeiaPix suits casual creators and visual artists

Pricing & Accessibility

Luma AI

Free plan: Limited exports

Pro plan: Higher resolution, commercial use, faster rendering

Access: Mobile App (iOS, Android beta), Web

LeiaPix

Completely free

Export limits: None (as of now)

Access: Web-based; no install needed

Summary

Future Direction & Innovation

Luma AI's Roadmap

Expanded NeRF tools for web apps

Gaussian Splatting for smoother scene blending

Pro integration with platforms like Blender and Unreal Engine

LeiaPix's Vision

Lightfield display ecosystem (e.g., Leia Lume Pad)

Integration with mobile AR apps

AI stylization layers for creative motion rendering

Summary

Dataset & Training Techniques

Luma AI

Trained on high-diversity real-world capture datasets using Structure-from-Motion (SfM) pre-processing.

Employs Active Sampling: Prioritizes view angles with higher error during training to reduce convergence time.

Uses HDR lighting simulations and material reflectance modeling to capture photoreal scenes.

LeiaPix

Trained on synthetic lightfield datasets and stereo image pairs for high-quality depth inference.

Applies context-aware masking: Removes problematic regions like transparent objects or hair strands to avoid visual artifacts.

Supports style-transfer pre-processing to improve depth quality for artistic portraits.

Rendering Pipeline

Luma AI

Ray sampling → Neural radiance inference → Alpha compositing → Tonemapping

Uses Mipmapping & adaptive sampling in the Gaussian splatting variant to ensure smoother rendering under dynamic lighting.

Optionally outputs in USDZ and glTF formats with animation paths for XR/VR engines.

LeiaPix

2D Input → Depth Estimation → Scene Decomposition → Multi-plane Projection → Animation Curves

The output is optimized for Leia’s Lume Pad (lightfield tablet), which renders multiview stereo frames in real-time.

Compression, Performance & Latency

Luma AI

Pre-baked mesh simplification reduces model size up to 90% without major fidelity loss.

Rendering latency depends on compute resources (e.g., the M1 chip = 1.5x faster reconstruction than standard GPUs).

Supports on-device inference with edge AI optimizations for iOS.

LeiaPix

Web-based inference, so all depth estimation and animation rendering happens on cloud GPUs.

Uses keyframe caching and frame interpolation to reduce animation time from ~12s to ~3s.

Final exports are compressed using temporal motion-aware codecs, preserving parallax depth while minimizing artifacts.

Advanced Creative Controls & SDKs

Luma AI

Developers can control camera motion paths with Bezier curve keyframes.

Built-in API for ARKit/ARCore to place reconstructed 3D assets directly in AR scenes.

Blender plugin in development for seamless 3D artist workflows.

LeiaPix

Allows custom motion scripting via XML metadata injection (experimental).

In development: integration with stable diffusion-style depth hallucination, allowing users to create 3D motion from AI-generated 2D art.

Future Innovation & Ecosystem Integration

Luma AI

Heading toward multi-object scene synthesis, where multiple NeRFs interact within a unified spatial timeline.

May implement View Synthesis GANs to generate missing viewpoints for complex occlusions.

Goal: Seamless VR/AR asset pipeline for WebXR and Unity/Unreal.

LeiaPix

Working on a “Depth-as-a-Service” API, allowing any app or platform to convert static images into motion visuals via REST calls.

Lightfield integration with holographic displays, including potential automotive HUDs and 3D signage.

Plans for “”LeiaMix”—combining multiple depth animations into short-form vertical storytelling (ideal for reels, shorts, and ads).

Both Luma AI and LeiaPix are redefining what's possible in 3D-to-video workflows—but they do so from very different angles. If you're aiming for realism and control over spatial scenes, Luma AI will empower you like never before. But if you're looking for a fast, artistic way to bring depth and motion to your static images, LeiaPix is pure magic—no experience required.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚