Kling AI vs Viggle AI? Which AI Video Tool Delivers Better Results for Creators

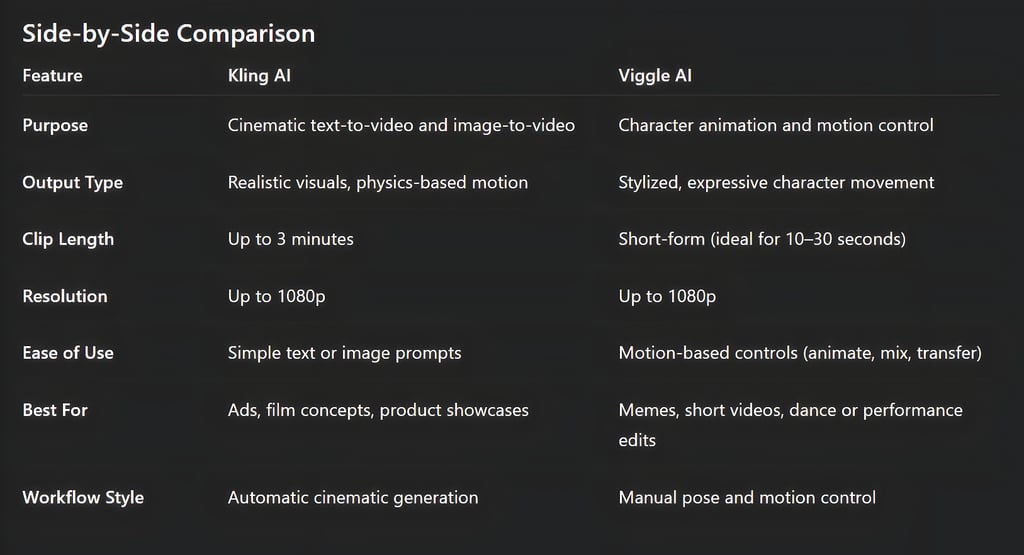

Kling AI vs Viggle AI Two of the most talked-about AI video generators in 2025. Kling focuses on cinematic, ultra-realistic video creation from text or images, while Viggle specializes in character animation, motion transfer, and expressive storytelling. This in-depth comparison explores their technology, performance, realism, workflow control, and creative potential, helping you choose the right AI video tool for your artistic or professional goals.

AI/FUTURECOMPANY/INDUSTRYEDITOR/TOOLSAI ART TOOLS

Sachin K Chaurasiya

11/14/20259 min read

Artificial intelligence has completely changed how creators make videos. Two platforms leading this change are Kling AI and Viggle AI—each powerful in its own way. While Kling focuses on creating realistic, cinematic videos from text or images, Viggle is all about character animation, motion control, and viral creativity.

Let’s look at both tools in detail to see which one fits your creative needs better.

What is Kling AI?

Kling AI is a next-generation text-to-video and image-to-video tool developed by Kuaishou. It’s known for producing realistic visuals, smooth motion, and scenes that follow real-world physics. Unlike many AI video tools that produce short, rough clips, Kling’s outputs feel cinematic—like a film scene created from a prompt.

Key Strengths

Realistic motion and detail: Objects and characters move naturally, with lifelike shadows, reflections, and camera movement.

Longer clip duration: Kling can create videos up to 3 minutes long, ideal for storytelling or ads.

High resolution: Supports up to 1080p video output.

Strong scene understanding: Responds accurately to detailed prompts about camera angles, lighting, and timing.

Kling AI is built for creators who want to produce premium visuals—product videos, cinematic scenes, and short narratives—without spending hours in video editing software.

Choose Kling AI if you want

Realistic, cinematic footage

Long-form or storytelling content

Product videos, ads, or trailers

Smooth motion and dynamic camera work

Underlying AI Architecture

Kling is built on a diffusion-transformer hybrid model, similar in structure to how models like Sora and Pika Labs generate continuous motion. It combines spatial-temporal diffusion with motion prediction layers, allowing the model to simulate realistic physics such as gravity, inertia, and object interaction.

What makes Kling stand out is its Temporal Consistency Engine, which ensures that lighting, shadows, and object positions remain stable frame-by-frame—critical for smooth cinematic output.

Input Processing and Prompt Understanding

Uses a multi-modal input layer, accepting text, reference images, or partial video frames. Its semantic parser can interpret detailed camera language such as “close-up,” “handheld,” or “dolly zoom.” The system converts these linguistic cues into visual behaviors, which is why Kling often produces film-like camera work automatically.

Performance and Rendering

Operates on cloud-based GPU clusters, optimized for rendering up to 1080p at 24–30 FPS. It can extend single scenes up to 180 seconds, maintaining frame stability through temporal coherence checkpoints. Kling’s rendering pipeline uses progressive refinement—starting from a rough structure and enhancing visual fidelity progressively.

Integration and Workflow Support

Offers API access for developers and advanced users.

Compatible with most standard video formats (MP4, MOV).

Can integrate with Adobe Premiere, After Effects, and DaVinci Resolve through export-friendly outputs.

Ideal for production pipelines that require quick AI-assisted footage before final compositing.

Creative Control and Fine-Tuning

Offers prompt modifiers for lens type, lighting quality, and cinematic tone (for example, “shot on 35mm lens, golden hour, handheld tracking”). You can fine-tune frame rate, motion intensity, and even narrative pacing. The newer versions include scene continuation, letting you extend existing videos seamlessly without breaking motion continuity.

AI Ethics and Data Training

Trained using large-scale licensed video datasets and synthetic motion data. Its framework enforces content filtering layers to prevent the generation of harmful or explicit material. Kling also uses context-weighting, which means it prioritizes realism while suppressing exaggerated or unsafe actions.

Community and Ecosystem Growth

The platform is expanding into a professional creative ecosystem. Studios and advertising agencies are starting to integrate Kling for pre-visualization and commercial production. Its cinematic strength and timeline expansion tools make it appealing for short films and marketing videos.

System Requirements and Accessibility

Primarily cloud-based, but performance depends on internet bandwidth. Requires a stable connection; rendering occurs on remote GPUs, so local hardware doesn’t limit output quality. Works through web browsers and mobile apps.

Future Development Direction

Moving toward full-scene continuity and AI storyboarding—allowing creators to link multiple shots into coherent mini-films. Future updates are expected to bring 4K rendering, multi-character scenes, and voice synchronization.

What is Viggle AI?

Viggle AI is designed for fun, fast, and highly controlled character animation. It lets users animate static images, transfer motion from one video to another, and remix characters into dynamic short clips. Its technology, powered by a physics-aware 3D video model, keeps movements natural and expressive.

Key Strengths

Character animation: Turn any image into an animated character with realistic motion.

Motion transfer: Apply movements from one clip to another seamlessly.

Creative freedom: Perfect for memes, dance videos, and short-form content.

Fast and interactive workflow: Create directly through the platform or integrate with Discord for real-time edits.

Viggle AI is built for social media creators who want quick, viral-ready animations with character-focused storytelling.

Choose Viggle AI if you want

Quick social content and character animations

Meme-style or dance videos

Control over pose and motion

Fast creative turnaround

Underlying AI Architecture

Viggle uses a video-based 3D generation model (JST-1) optimized for pose understanding. It processes motion in vectorized skeleton form, allowing you to remap or modify body movements precisely. The model employs keypoint tracking and depth mapping, which makes animation physics-aware (feet stay on the ground, hands move with believable weight).

This architecture is better suited for human-centric motion editing and rapid transformation of static images into living characters.

Input Processing and Prompt Understanding

Focuses on motion-conditioned input, meaning you provide a base image or video and a “motion reference.” The system extracts pose keyframes and maps them onto your subject. This results in precise, controlled animation that aligns with your chosen style. The AI also uses latent motion interpolation, so transitions between movements feel fluid.

Performance and Rendering

Generates short sequences (typically 4–15 seconds) but with extremely low latency. It uses motion vector caching, meaning if you reuse a pose or motion pattern, rendering becomes significantly faster. Its Live feature supports real-time preview, ideal for creators who want to adjust animation timing on the fly.

Integration and Workflow Support

Integrates natively with Discord for command-based animation creation (/animate, /mix, /transfer).

Exports in ready-to-share social media formats.

Supports motion reuse, meaning you can build a library of custom movement styles and apply them to multiple projects.

Also being integrated into mobile and web-based animation dashboards, allowing easy access for creators without technical expertise.

Creative Control and Fine-Tuning

Provides pose editing panels, where you can manually adjust character balance or limb positions before generating animation. Advanced users can use the motion editor to adjust trajectory curves, easing, and tempo. This gives more artistic control over character personality and rhythm—something most text-to-video tools lack.

AI Ethics and Data Training

Uses motion datasets and open 3D movement libraries to train its animation model. Its character-based approach respects pose realism and avoids unnatural deformation. Because it’s built for memes and creative animation, it allows broader stylistic freedom but within defined physics constraints.

Community and Ecosystem Growth

Viggle’s growth is fueled by its community-driven content. Its Discord ecosystem allows instant sharing of motion references, trends, and remixed projects. The platform has quickly become popular among YouTube creators, meme editors, and VTubers for its ability to turn still images into lively characters within seconds.

System Requirements and Accessibility

Accessible via web, mobile, and Discord. The desktop interface is lightweight since most computation occurs in the cloud. Rendering is optimized for speed rather than heavy realism, allowing users with average devices to generate animations easily.

Future Development Direction

Expanding into interactive animation, where users can directly manipulate poses using real-time body tracking or webcam-based motion capture. Upcoming releases may include custom 3D rig exports and style adaptation, letting you mimic animation styles from different artists or studios.

Performance and Realism

Kling AI focuses on physics and realism. Movements feel natural—hair sways, water ripples, and objects cast consistent shadows. It’s the go-to option if you want realistic storytelling and smooth camera work.

Viggle AI focuses on expressive motion. It’s more about bringing static characters or drawings to life with human-like movements and energy. Perfect for storytelling through emotion, expression, and rhythm.

Duration, Speed, and Efficiency

Kling AI handles longer scenes, making it better suited for creators producing ads, narrative videos, or visual experiments.

Viggle AI excels in quick turnaround—ideal for making multiple short clips or social content in minutes.

Workflow and Control

Kling AI: You provide a detailed text prompt or reference image, and the AI generates the entire sequence. The more descriptive your prompt, the better the scene. It’s like writing a mini film script and watching it come to life.

Viggle AI: You can animate characters using pose control, motion transfer, and blending tools. It’s hands-on, giving creators the freedom to fine-tune each move.

Pricing Overview

Kling AI offers free and paid versions depending on your platform or region. Its free plan is enough for basic video creation, but paid tiers unlock faster rendering and higher quality.

Viggle AI uses a credit-based system with Free, Pro, and Live plans. Higher tiers offer watermark-free exports, more credits, and access to real-time generation tools.

Tips for Better Results

Write precise prompts: Include camera direction, lighting, and movement details. Example: “Close-up of a girl walking through rain, cinematic lighting, slow-motion.”

Use reference images: Helps the AI understand the visual style or mood you want.

Iterate often: Generate short previews, refine the details, then produce the final output.

Focus on story flow: Even short clips benefit from a beginning, middle, and end.

If you want cinematic realism, go with Kling AI. It’s perfect for film-style storytelling, advertising, and high-quality visuals.

If you want dynamic character animation, choose Viggle AI. It’s ideal for social media creators who love crafting expressive, funny, or emotional short videos.

In truth, both tools complement each other. Many creators use Kling for polished hero shots and Viggle for energetic, viral moments. The best choice depends on your content style and how much control you want over motion and storytelling.

FAQs

Q: What is the main difference between Kling AI and Viggle AI?

The key difference is in their purpose. Kling AI is a text-to-video and image-to-video platform built for cinematic, realistic visuals, while Viggle AI focuses on character animation and motion control. Kling is best for storytelling and cinematic content, while Viggle is ideal for memes, dances, and social animations.

Q: Can Kling AI generate videos from text prompts only?

Yes. Kling AI can generate full videos from a single text prompt or a reference image. It automatically interprets your input, builds scenes, simulates motion, and applies lighting and camera movement, resulting in film-like outputs without manual animation.

Does Viggle AI require any animation skills?

No, it doesn’t. Viggle AI uses AI-driven pose and motion mapping, so you don’t need animation experience. You simply upload a character image or choose a motion reference, and Viggle handles the rest—creating smooth, physics-aware animation automatically.

Q: How long can videos be created with Kling AI?

Kling AI supports longer video outputs—up to 3 minutes in duration. This makes it one of the few AI tools capable of handling narrative-style or advertisement-length clips, not just short previews or GIF-like loops.

Q: What’s the maximum quality output of these tools?

Both tools support 1080p high-definition output. Kling prioritizes cinematic sharpness and environmental realism, while Viggle focuses on maintaining smooth, believable motion in animated characters.

Q: Can I animate a photo using Viggle AI?

Yes. Viggle allows you to animate still images directly. It maps your character’s body structure and applies natural movement, making it possible to turn drawings, photos, or art into living, moving clips with expressive gestures.

Q: Does Kling AI support multiple scenes or storytelling continuity?

Kling AI’s latest versions support scene continuation, allowing you to extend or link clips seamlessly. This feature helps in creating short stories, cinematic sequences, or ad-style narratives with consistent tone and lighting.

Q: How fast are video generations on both platforms?

Kling AI: Takes longer to render due to its higher realism and longer duration, but the quality payoff is worth it for professional projects.

Viggle AI: Much faster, optimized for short-form animations, and can deliver outputs within a minute depending on complexity and plan type.

Q: Which one is better for social media creators?

Viggle AI is more suited for social content, memes, and character-driven videos because of its quick generation, pose control, and trend-friendly features. Kling AI, on the other hand, is more suitable for cinematic storytelling or brand advertising.

Q: Can I use Kling AI and Viggle AI together?

Absolutely. Many creators use both in their workflow—Kling AI for generating environments or cinematic scenes and Viggle AI for animating characters. You can merge their outputs in a video editor like Premiere Pro or CapCut for a hybrid professional result.

Q: Are these tools free to use?

Both offer free versions:

Kling AI provides basic access for text-to-video generation with limited features.

Viggle AI offers free credits for standard animation but requires a paid plan for advanced features, watermark removal, and 1080p export.

Q: Can I use videos made with these tools commercially?

Yes, but you must review their terms of use before publishing commercially. Kling and Viggle allow commercial use under certain conditions, depending on your plan and generated content. Always check licensing details in their latest user policies.

Q: Which tool provides better physics realism?

Kling AI handles environmental and physical realism—gravity, water flow, camera movement, and light behavior—more naturally. Viggle AI, however, focuses on human motion realism, ensuring body balance, limb coordination, and physical poses look natural during animation.

Q: Does either tool support voice or lip-syncing?

Currently, neither Kling nor Viggle supports full voice synchronization. However, future updates are expected to introduce voice and facial sync features, allowing direct dialogue or lip animation with pre-recorded audio.

Q: Which AI model is more suitable for professional filmmakers?

Kling AI is more aligned with filmmakers and advertising professionals due to its high-fidelity visuals, cinematic framing, and realistic motion. Viggle AI is better suited for digital artists, animators, and meme creators who prioritize expression and engagement over realism.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚