Is Sora the Future of Filmmaking? What We Know So Far!

Explore how OpenAI's Sora is reshaping the future of filmmaking with AI-generated videos. Discover its features, limitations, upcoming updates, and how it's empowering creators, directors, and storytellers with revolutionary visual storytelling tools.

AI ART TOOLSARTIST/CREATIVITYCOMPANY/INDUSTRYAI/FUTURE

Sachin K Chaurasiya

6/9/20254 min read

The fusion of artificial intelligence with creativity has always sparked debate, excitement, and a fair share of skepticism. In 2024, OpenAI introduced Sora, a groundbreaking AI video generation model that can turn simple text prompts into high-quality, photorealistic video clips. But beyond the tech demo allure and cinematic quality, a bigger question looms: Is Sora the future of filmmaking?

Let’s explore what makes Sora revolutionary, where it currently stands, its real-world limitations, and what the future might hold for AI-powered video generation in cinema.

What is Sora? A New Chapter in AI Video

Sora is OpenAI’s most advanced text-to-video model yet. It can generate video sequences up to one minute long with stunning realism and coherence — not just in visuals, but also in physics, depth, and temporal logic.

Sora understands prompts such as

"A cinematic shot of a futuristic Tokyo street, neon-lit, with heavy rain and people walking under umbrellas."

The result? A short film clip that looks like it was crafted by a digital artist or visual effects (VFX) studio, complete with lighting, animation, and motion consistency.

Key Features of Sora That Filmmakers Are Eyeing

Text-to-Video Simplicity

Users only need to input a natural language prompt — no code, no cameras. This opens up video creation to writers, marketers, educators, and filmmakers alike.High Resolution and Realism

Sora generates 1080p video with accurate reflections, human movements, and environments that obey physics. It’s not just AI art — it’s visual storytelling.Multiple Angles & Scene Dynamics

The model is capable of understanding camera motions, changes in perspective, and object permanence, offering directors a way to visualize complex scenes in seconds.Integration Potential

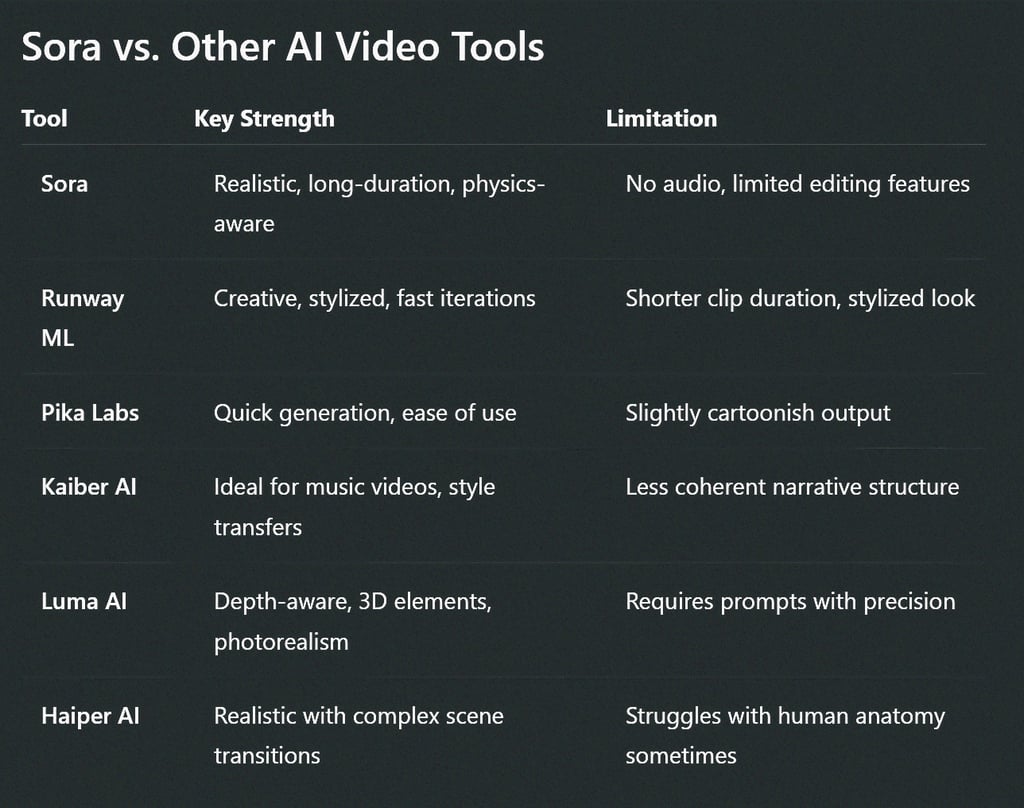

Sora can be used alongside tools like Runway, Pika Labs, Kaiber AI, Luma Dream Machine, and Adobe Premiere, integrating AI-generated assets into full productions.

How Sora Could Transform Filmmaking

1. Previsualization & Concept Testing

Directors can use Sora to mock up scenes before committing to expensive shoots. Think of it as a storyboard that moves — rich in detail and flexibility.

2. Low-Budget Filmmaking Revolution

Independent filmmakers without studio budgets can craft visual narratives, trailers, or music videos without expensive VFX teams or physical sets.

3. AI-Enhanced Creativity

Sora doesn’t replace human imagination — it amplifies it. Screenwriters can see their ideas play out in real-time, animators can build references, and editors can visualize transitions or moods.

4. Real-Time Feedback Loops

Want to test alternate endings or scene dynamics? Sora can create multiple variants for audience testing or internal iteration, saving both time and cost.

Current Limitations of Sora

While impressive, Sora isn’t quite ready to direct an entire feature film. Some key challenges include

Audio is Missing: As of now, Sora only generates video. Dialogue, sound effects, and scoring must be added manually or through other AI tools like ElevenLabs or Soundraw.

Narrative Control is Limited: Long-form storytelling with consistent characters, continuity, and emotional arcs is still far from reliable.

Uncanny Valley Risks: Human faces and expressions can sometimes appear slightly off or surreal in motion.

Ethical and Copyright Concerns: What happens when you mimic real people or copyrighted styles? These debates are still unfolding.

What’s Coming Next: Sora's Evolving Capabilities

1. Multimodal Integration (Video + Audio)

Insider previews suggest that OpenAI may soon integrate Sora with audio-generation models such as Whisper and Jukebox. This could allow future versions to support dialogue, soundtracks, and synced sound effects.

2. Character & Emotion Continuity

OpenAI is actively researching ways to bring consistent characters with facial expression tracking and emotional arcs to generated videos — making episodic content or mini-films more feasible.

3. Longer Duration Videos

Sora currently supports up to one-minute clips, but expansion to longer durations is in development. This leap will be crucial for short films, commercials, and serialized storytelling.

4. Interactive Prompt Refinement

Future updates are expected to include frame-level editing and prompt chaining, allowing creators to refine specific sections of a video without regenerating the entire clip.

5. Public Access and Creator Tools

While Sora is in limited access mode, OpenAI plans to gradually open the tool to a wider audience with safety features and monetization models, empowering indie creators and content studios.

So, Is Sora the future of filmmaking?

Yes — but not alone.

Sora is poised to be a game-changer in visual storytelling, especially in areas like pre-production, concept development, and short-form video. However, human creativity, storytelling, acting, and sound design remain essential. Sora is not a filmmaker — it’s a filmmaking assistant, with vast potential but clear boundaries (for now).

As Sora evolves, we may see hybrid workflows where AI handles visual drafts and humans refine the emotional and narrative arcs. This synergy could redefine how stories are imagined, shared, and experienced.

FAQs

Is Sora publicly available now?

No, as of mid-2025, Sora is still in private testing. OpenAI is gradually giving access to select partners, researchers, and creatives for controlled experimentation.

Can Sora generate both video & audio?

Not yet. Sora only creates silent videos. For audio, users must integrate tools like ElevenLabs for voice, Soundraw or Mubert for music, and Adobe AI tools for sound effects.

How long can a video created with Sora be?

Currently, Sora can generate videos up to 60 seconds long with dynamic scenes and camera movement. Longer durations are expected in future updates.

Will Sora support character consistency across scenes?

OpenAI is actively researching this. Maintaining consistent characters across multiple shots or scenes is a challenge, but character memory and identity tagging are being explored for future releases.

Is Sora better than Runway or Pika Labs for filmmakers?

Sora excels in realism, depth, and physics-aware rendering. However, Runway and Pika Labs are still favored for speed, animation styles, and easier access. Each tool serves different creative purposes.

Can I use Sora content commercially?

Once access is granted, you may use Sora-generated content commercially, but you must adhere to OpenAI’s content policies, which include safety, moderation, and licensing rules.

Are there risks of deepfake misuse with Sora?

Yes. OpenAI is placing heavy safeguards to prevent misuse, such as mimicking real people or producing misleading content. Future releases may include watermarking and authenticity detection.

Sora is not here to end filmmaking — it’s here to expand it. As with any powerful tool, its future depends on how responsibly and creatively we wield it. For now, it's safe to say:

Sora is not replacing directors — it’s giving them new ways to dream.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚