Introduction to Multimodal AI: OpenCLIP vs ALIGN!

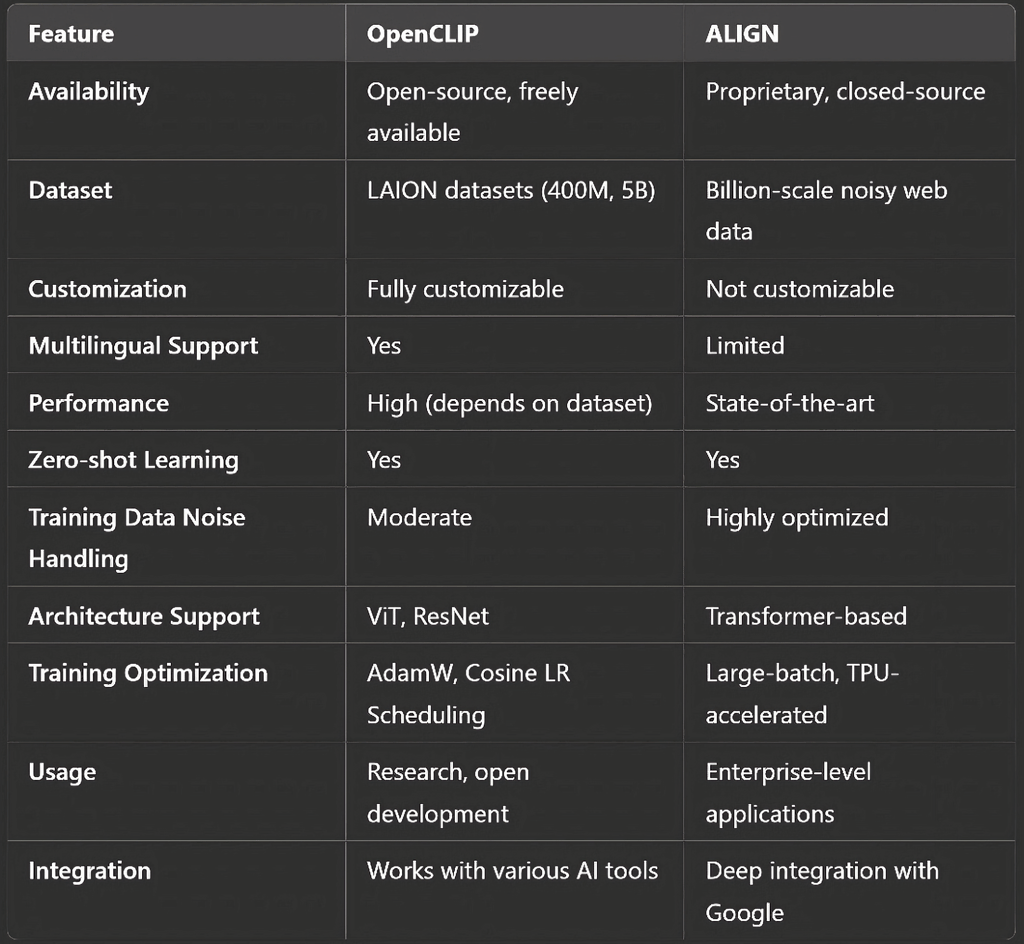

OpenCLIP vs ALIGN: A comprehensive comparison of two powerful vision-language models. Explore their architectures, training methodologies, performance benchmarks, strengths, limitations, and ideal use cases to determine which model best suits your AI needs.

EDUCATION/KNOWLEDGEAI/FUTUREAI ART TOOLSAI ASSISTANT

Sachin K Chaurasiya

2/15/20254 min read

The rise of multimodal AI models has revolutionized the way artificial intelligence understands and processes images and text. Two key models leading this transformation are OpenCLIP and ALIGN. Both are designed to create powerful vision-language embeddings, enabling machines to interpret images and text in a highly sophisticated manner. But how do they differ? Which one is better suited for specific applications? In this article, we’ll explore the differences, advantages, and limitations of OpenCLIP and ALIGN to help you understand their impact on AI-driven applications.

What is OpenCLIP?

OpenCLIP (Open Contrastive Language-Image Pretraining) is an open-source implementation of OpenAI’s CLIP (Contrastive Language-Image Pretraining) model. Developed by LAION (Large-scale Artificial Intelligence Open Network), OpenCLIP aims to democratize access to high-quality vision-language models by providing publicly available models trained on diverse datasets.

Key Features

Open-source and customizable—Unlike CLIP, which was developed by OpenAI with proprietary datasets, OpenCLIP is fully open-source, allowing researchers and developers to modify and train their own models.

Large-scale training datasets—OpenCLIP is trained on datasets like LAION-400M and LAION-5B, which contain billions of image-text pairs.

Multilingual support—It supports multiple languages, making it a powerful tool for global applications.

Efficient zero-shot learning—OpenCLIP enables AI models to recognize and describe images without the need for extensive labeled training data.

Community-driven development—OpenCLIP continues to evolve thanks to contributions from researchers and developers worldwide.

Flexible model architectures—OpenCLIP supports multiple architectures and model sizes, making it adaptable to various hardware and performance needs.

Integration with other AI tools—OpenCLIP can be used alongside other AI frameworks and libraries, enabling seamless interoperability.

Technical Details

Model Architectures: OpenCLIP supports ViT (Vision Transformer) and ResNet-based architectures, providing flexibility in model selection based on performance and computational efficiency.

Training Methodology: Uses contrastive learning, where image-text pairs are embedded into a shared space and trained to maximize similarity for matched pairs while minimizing similarity for unmatched pairs.

Optimization Techniques: Employs AdamW optimizer with cosine learning rate scheduling for efficient model convergence.

Scalability: Can be trained on distributed GPU clusters, making it ideal for large-scale AI research.

Pros

Fully open-source and accessible.

Allows model training with custom datasets.

Supports multiple languages.

Strong community support and continuous improvement.

Ideal for researchers and AI enthusiasts.

Supports diverse model architectures and configurations.

Can be deployed across different platforms and environments.

Cons

Performance depends on dataset quality and training setup.

Requires substantial computational resources for training.

Not as optimized for noisy datasets as ALIGN.

Lacks seamless integration with proprietary AI services.

Use Cases

Academic Research & Experimentation—Open-source flexibility allows researchers to fine-tune and test different model architectures.

Custom AI Model Development—Developers can modify OpenCLIP to suit unique use cases, such as domain-specific image-text tasks.

Multilingual AI Applications—Supports multiple languages, making it useful for global AI solutions.

Open-Source AI Projects—Ideal for startups, AI communities, and developers building transparent and accessible AI models.

Fine-Tuning for Niche Applications—Businesses can train OpenCLIP on specific datasets for improved performance in specialized domains (e.g., medical imaging, fashion, or cultural heritage).

What is ALIGN?

ALIGN (A Large-scale Image and Noisy-text embedding) is a proprietary model developed by Google Research. It is designed to align images and text into a shared embedding space, similar to CLIP but trained on an even larger dataset with a different optimization approach.

Key Features

Trained on large-scale datasets—ALIGN was trained on over a billion noisy image-text pairs collected from the web.

Superior generalization capabilities—Due to its diverse training data, ALIGN achieves impressive zero-shot performance on a variety of benchmarks.

Proprietary and closed source—Unlike OpenCLIP, ALIGN is not publicly available for fine-tuning or modification.

Optimized noise management—ALIGN is specifically designed to handle noisy web data, making it highly flexible in real-world scenarios.

High-performance embedding—Google’s ALIGN model achieves state-of-the-art results in tasks such as image retrieval, captioning, and scene understanding.

Deep integration with Google’s ecosystem—ALIGN powers various AI-powered Google products such as Google Lens, image search, and multimodal AI applications.

High scalability and efficiency—ALIGN benefits from Google's vast computational resources, making it highly optimized for enterprise-level AI applications.

Technical Details

Model Architectures: Utilizes advanced transformer-based architectures optimized for large-scale vision-language learning.

Training Methodology: Leverages contrastive learning similar to CLIP but with a larger dataset and advanced pre-processing techniques to handle noise.

Optimization Techniques: Uses large-batch training with distributed TPU (Tensor Processing Units) acceleration for faster convergence.

Scalability: Designed to run on Google’s cloud infrastructure, making it highly efficient for enterprise applications.

Pros

Optimized for large-scale noisy web data.

Achieves superior zero-shot performance.

Designed by Google Research, ensuring high-quality training.

Excels in real-world applications like image retrieval and captioning.

Highly scalable and efficient for enterprise use.

Integrated into Google’s AI ecosystem, enhancing practical applications.

Cons

Closed-source and not accessible for modification.

No transparency on exact dataset details.

No support for fine-tuning by third parties.

Limited adaptability outside of Google’s proprietary ecosystem.

Use Cases

Enterprise-Level AI Solutions—ALIGN is highly optimized for large-scale applications such as Google Search, Lens, and AI-driven recommendation systems.

Web-Scale AI Applications—Due to its massive training dataset, ALIGN performs exceptionally well in real-world noisy data environments.

AI-Powered Image and Text Retrieval—ALIGN’s superior embeddings help in applications like content moderation, image classification, and large-scale search engines.

Zero-Shot Learning for Commercial Use—Businesses that need cutting-edge AI models for e-commerce, social media platforms, and automated content generation benefit from ALIGN’s pre-trained embeddings.

Google AI Ecosystem Integration—ALIGN is deeply embedded in Google’s AI products, making it ideal for companies that rely on Google’s infrastructure.

Which One Should You Choose?

The choice between OpenCLIP and ALIGN depends on your specific needs and priorities:

If you need a fully open, customizable, and community-driven model, OpenCLIP is the best choice.

If you require state-of-the-art performance with superior noise-handling capabilities, ALIGN is a powerful alternative, albeit inaccessible for direct use.

Both models contribute significantly to the advancement of vision-language AI, paving the way for more intelligent, multimodal applications in the future. Whether you are an AI researcher, developer, or business owner, understanding these models can help you make informed decisions when building AI-powered solutions.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚