How ALIGN, MetaCLIP, and DFN Are Shaping the Next-Gen AI Models

Discover the key differences between ALIGN, MetaCLIP, and DFN (Data Filtering Networks) in this in-depth comparison. Learn about their architectures, advantages, limitations, and best use cases for AI-driven vision-language models.

AI/FUTURECOMPANY/INDUSTRYEDITOR/TOOLS

Sachin K Chaurasiya

3/22/20254 min read

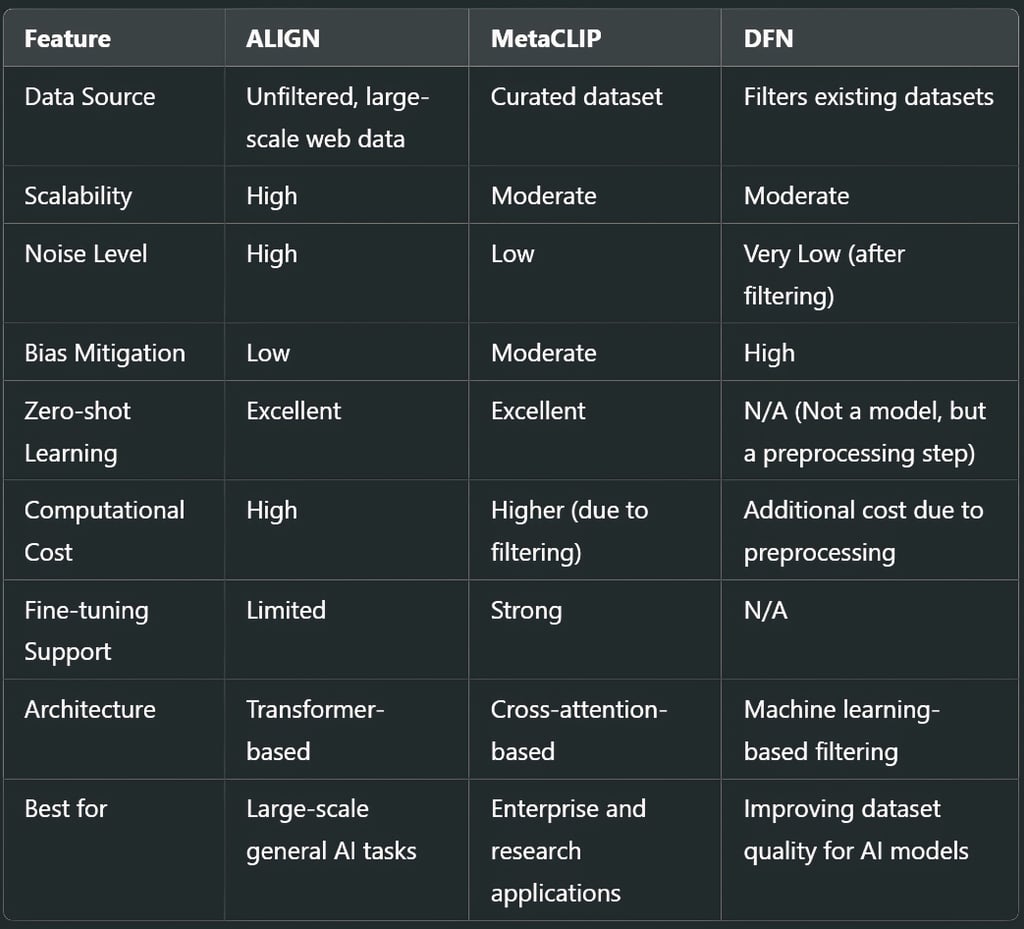

In the rapidly evolving landscape of AI and deep learning, image-text models play a crucial role in enabling machines to understand and generate meaningful connections between textual descriptions and visual data. Three notable approaches—ALIGN, MetaCLIP, and Data Filtering Networks (DFN)—stand out for their unique methodologies in improving AI's ability to process and filter high-quality data. This article delves into a comparative analysis of these models, highlighting their strengths, applications, and key differences.

What is ALIGN?

ALIGN (A Large-scale Image and Noisy-Text Dataset) is a model developed by Google that aims to enhance visual-language learning by leveraging large-scale image-text pairs from the internet.

Key Features

Uses massive datasets sourced from the web, without manual annotations.

Employs contrastive learning, aligning images with their respective textual descriptions.

Trains on noisy and diverse data, making it highly scalable.

No explicit filtering is performed on training data, relying on model learning to differentiate noise from valuable information.

Utilizes dual-encoder architectures that improve the ability to match images with descriptive text efficiently.

Supports zero-shot learning, allowing the model to generalize across different tasks without fine-tuning.

Uses Transformer-based architectures for robust text and image embedding representations.

Leverages Vision Transformers (ViTs) to enhance object detection and representation learning.

Strengths

Highly scalable due to extensive data utilization.

Generalizes well across different domains without requiring fine-tuning.

Achieves state-of-the-art zero-shot performance on various benchmarks.

Handles diverse and multilingual datasets, making it effective for global applications.

Supports multi-modal learning, enhancing performance across various AI applications.

Limitations

Noisy datasets can lead to inconsistencies and misalignments.

The lack of human curation may introduce biases and irrelevant associations.

May require additional post-processing to filter out low-quality alignments.

High computational cost due to large-scale data processing.

What is MetaCLIP?

MetaCLIP is Meta’s adaptation of OpenAI’s CLIP (Contrastive Language-Image Pretraining), designed to improve robustness and efficiency in vision-language models.

Key Features

Utilizes a filtered and curated dataset, reducing the noise present in raw web-scraped data.

Maintains contrastive learning methodology but enhances dataset quality.

Optimized for multi-modal learning, making it better suited for downstream tasks like retrieval, classification, and captioning.

Focuses on removing bias and improving data integrity.

Leverages large-scale GPU and TPU clusters to enhance training efficiency and reduce computation bottlenecks.

Supports fine-tuning capabilities for domain-specific applications.

Uses self-supervised learning techniques to improve label efficiency and model accuracy.

Implements cross-attention mechanisms to strengthen the alignment of text and image embeddings.

Strengths

More reliable results due to data filtering and preprocessing.

Better alignment of images and text, improving overall performance.

Lower bias risks, making it preferable for responsible AI applications.

Offers better interpretability through curated dataset training.

Uses adaptive learning rate techniques for efficient model optimization.

Limitations

Less scalable compared to ALIGN since it depends on curated datasets.

It requires more computational resources for data filtering.

Filtering can sometimes lead to a loss of valuable diversity, reducing generalization ability.

Limited scalability for massive real-time AI systems compared to ALIGN.

What is DFN (Data Filtering Networks)?

Data Filtering Networks (DFN) is a specialized approach designed to improve dataset quality by filtering out irrelevant, biased, or low-quality data before training AI models.

Key Features

Acts as a pre-processing step, ensuring higher-quality input data.

Uses machine learning algorithms to identify and remove noisy or misleading data.

Helps reduce hallucinations and biases in AI-generated content.

Can be integrated with models like ALIGN and MetaCLIP to enhance data quality.

Implements automated anomaly detection to discard outlier data that could negatively impact training.

Adapts self-supervised learning techniques to refine filtering over time.

Uses Bayesian optimization methods to fine-tune data selection strategies.

Integrates with Federated Learning approaches, allowing decentralized model training with enhanced data privacy.

Strengths

Significantly reduces noise, leading to cleaner and more effective training data.

Helps remove biases by identifying problematic dataset patterns.

Enhances model robustness, ensuring better alignment and fewer misinterpretations.

Can be applied across multiple AI models, improving the overall pipeline efficiency.

Supports synthetic data generation techniques for improved dataset augmentation.

Limitations

It requires additional computational overhead for filtering.

Filtering criteria need to be carefully designed to avoid removing valuable diversity.

May require continuous monitoring and updates to remain effective with evolving datasets.

Not a standalone model but a pre-processing step that requires integration with existing architectures.

Use Cases & Best Applications

ALIGN is ideal for applications requiring large-scale, general-purpose learning, such as image retrieval, unsupervised vision-language training, and multi-language AI tasks.

MetaCLIP is best for scenarios demanding high accuracy and curated datasets, such as enterprise AI solutions, research projects, and responsible AI applications.

DFN is a crucial tool for AI practitioners aiming to improve dataset quality, especially in models prone to biases, hallucinations, or errors due to noisy data.

Choosing between ALIGN, MetaCLIP, and DFN depends on specific needs. ALIGN offers raw scalability but at the cost of noisier data; MetaCLIP refines this approach with better filtering; and DFN enhances any AI model’s data quality by removing biases and irrelevant information. A hybrid approach, combining ALIGN’s scalability, MetaCLIP’s curated learning, and DFN’s data refinement, may be the optimal path for creating the next-generation AI models. As AI continues to evolve, these approaches will play an essential role in shaping the future of vision-language understanding.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚