Green AI – Can Artificial Intelligence Be Eco-Friendly?

Can Artificial Intelligence Be Eco-Friendly? Discover the rise of Green AI, a sustainable approach to developing and deploying AI with minimal environmental impact. Learn about energy-efficient models, carbon-aware computing, and how AI can protect the planet instead of harming it.

AI ASSISTANTENVIRONMENTCOMPANY/INDUSTRY

Sachin K Chaurasiya

7/9/20255 min read

Artificial Intelligence (AI) has become the engine behind today's most transformative technologies—from smart assistants to climate prediction models. However, as AI grows, so does its environmental footprint. Training large-scale models like GPT, BERT, or image generators consumes massive computational power and energy—raising a critical question: Can AI ever be eco-friendly?

The answer lies in a powerful new movement—Green AI—a philosophy and practice aimed at making AI more sustainable, efficient, and responsible.

What Is Green AI?

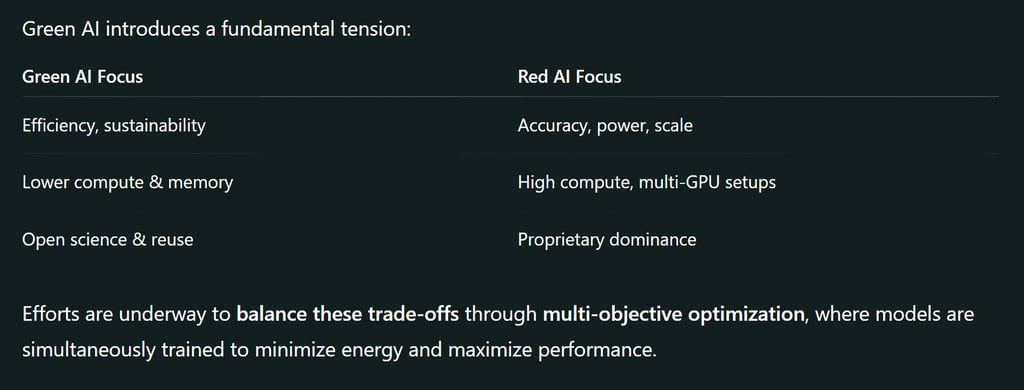

Green AI refers to the development and deployment of artificial intelligence technologies in an energy-efficient, resource-conscious, and environmentally friendly manner. Coined in a 2019 research paper by Roy Schwartz et al., Green AI contrasts with "Red AI," which prioritizes accuracy and performance at any cost—often with huge energy consumption.

Key Goals

Lower computational costs

Reduce carbon emissions

Promote transparency in energy usage

Encourage eco-conscious innovation

Environmental Impact of Traditional AI

While AI is often hailed as a tool for combating climate change, it also contributes to it in surprising ways.

Energy Consumption

Training a single large language model (like GPT-3) can consume

Over 284,000 kg of CO₂ emissions—equivalent to 5 round-trip flights between New York and London.

Resource Demands

Data centers powering AI models consume massive amounts of electricity, mostly from non-renewable sources.

Hardware production (GPUs, TPUs) involves mining rare earth metals, causing habitat destruction and pollution.

How AI Can Become Eco-Friendly

Green AI is not just a theoretical concept. Several innovations and practices are driving the movement toward sustainable AI.

Efficient Algorithms

Pruning, quantization, and distillation help shrink AI models while retaining performance.

Smaller models = less computation = lower emissions.

Energy-Aware Model Training

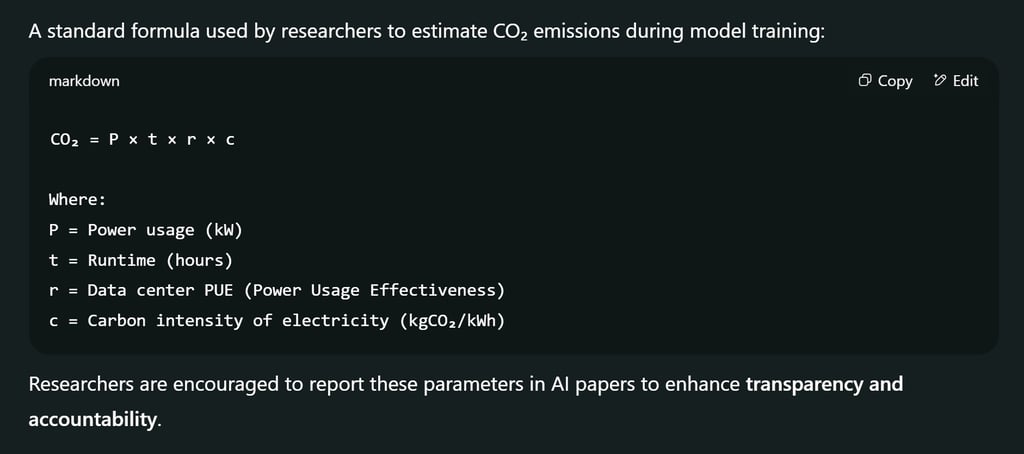

New research focuses on tracking energy usage during training and inference.

Encouraging the use of “energy labels” in published AI models.

Renewable-Powered Data Centers

Companies like Google and Microsoft are shifting to solar, wind, and hydro-powered AI operations.

Some facilities are now running on 100% carbon-neutral energy.

Decentralized & Edge AI

Running AI models locally (on smartphones, IoT) reduces data transfer and centralized computing needs.

On-device AI is far more sustainable for daily tasks.

Benchmarking for Green Performance

Tools like the ML CO2 Impact Estimator help researchers and developers assess their model's carbon footprint.

Platforms like Papers With Code now allow comparison based on efficiency, not just accuracy.

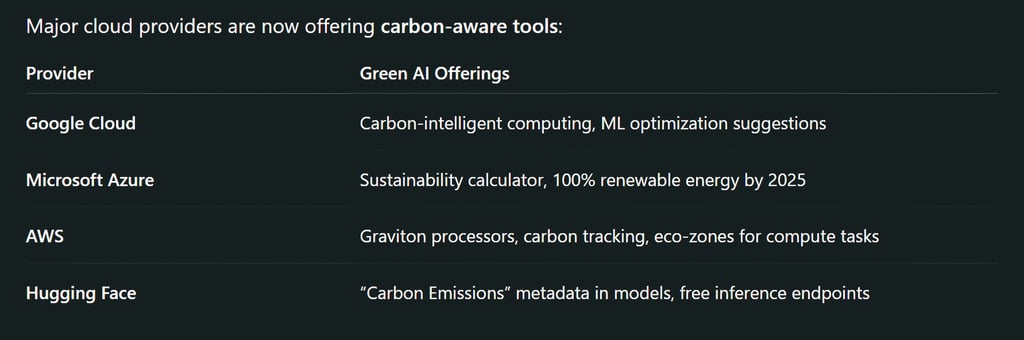

Real-World Examples of Green AI in Action

Google DeepMind

Uses AI to optimize energy consumption in data centers, cutting energy usage for cooling by up to 40%.

Hugging Face

Introduced the “Optimum” library for efficient transformers and encourages model sharing to avoid redundant training.

OpenAI

While GPT models are computationally heavy, OpenAI has released smaller versions like GPT-3.5 Turbo that balance power and efficiency.

Can AI Help the Environment Too?

Absolutely. Despite its footprint, AI can also be a force for environmental good:

Climate Prediction

AI enhances the accuracy of weather and climate models, helping governments and communities prepare for extreme events.

Smart Grids

AI helps balance power loads, forecast energy demand, and integrate renewables into national grids more efficiently.

Agriculture & Conservation

AI drones and sensors monitor soil health, crop disease, and deforestation in real time.

Wildlife tracking via AI vision systems aids endangered species protection.

Model Optimization Techniques

Model efficiency is the core driver of Green AI. The more optimized an AI model is, the less energy and hardware resources it consumes. Here are the key techniques:

Knowledge Distillation

Trains a smaller model (student) to mimic a larger model (teacher).

Reduces inference costs while preserving predictive power.

Example: DistilBERT reduces 40% of model size and inference time compared to BERT.

Pruning

Removes redundant neurons and weights post-training.

Maintains model performance while reducing computation.

Types: Magnitude pruning, structured pruning, and lottery ticket hypothesis.

Quantization

Converts high-precision weights (e.g., float32) to lower precision (e.g., int8).

Reduces memory usage, speeds up inference, and lowers power consumption.

Often used in edge AI models for mobile and IoT deployment.

Low-Rank Factorization

Decomposes weight matrices into products of smaller matrices.

Reduces parameter count and computational complexity.

Hardware-Level Innovations

AI Accelerators

TPUs (Tensor Processing Units) and FPGAs offer energy-efficient alternatives to GPUs.

TPUs consume up to 80% less power than equivalent GPU configurations for certain tasks.

Neuromorphic Computing

Mimics the human brain's energy-efficient processing.

Chips like Intel’s Loihi can operate with orders of magnitude lower energy per inference.

Analog AI

Utilizes analog circuits instead of digital logic.

Significantly lowers energy consumption for edge inference.

Geographical & Cloud-Aware Scheduling

AI training efficiency depends on where and when computation is run.

Geographical Scheduling

Train models in regions with low-carbon energy grids.

Example: Data centers in Iceland use 100% renewable geothermal/hydro energy.

Time-of-Day Scheduling

Train during low-load hours to utilize surplus renewable power.

Integrating with real-time grid carbon intensity APIs (e.g., WattTime).

Benchmarking Green AI: Beyond Accuracy

Traditional benchmarks (e.g., ImageNet accuracy) are no longer sufficient. New benchmarking includes:

Energy Efficiency Score (EES): Accuracy per unit energy.

GreenScore: Combines compute cost, memory usage, and carbon emissions.

CarbonScore (by MLCommons): Assesses power draw, carbon impact, and inference efficiency.

These encourage researchers to submit low-emission models alongside SOTA architectures.

Green AI Research & Open Initiatives

Research Projects

AllenNLP Interpret: Offers interpretability without retraining massive models.

BigScience (by Hugging Face): Collaborative effort focusing on transparent, open, and efficient large models like BLOOM.

Open Datasets for Sustainable AI

ClimateNet: Deep learning-ready dataset for extreme weather classification.

TCO Benchmarks: Evaluates models based on Total Cost of Ownership and emissions.

Challenges to Achieving Green AI

Lack of transparency in reporting carbon footprints of models.

Profit-driven AI races often prioritize performance over sustainability.

Accessibility gap—smaller organizations may lack resources for Green AI practices.

Future of Green AI: A Call to Collective Responsibility

The future of AI doesn’t have to be one of unchecked emissions and waste. With better incentives, open research, and ethical standards, the industry can pivot toward a more balanced, greener future.

It’s not just about what AI can do. It’s about how responsibly we choose to use it.

FAQs

Q. Why is AI considered harmful to the environment?

AI models require high computational power, which often relies on fossil-fuel-generated electricity—leading to significant carbon emissions.

Q. Can AI ever be truly eco-friendly?

With advancements in efficient model design, green data centers, and renewable energy, AI can be significantly more sustainable.

Q. What is the difference between Green AI and Red AI?

Green AI prioritizes energy efficiency and environmental impact, while Red AI focuses purely on accuracy and performance.

Q. How can developers contribute to Green AI?

By optimizing code, using open-source pre-trained models, monitoring energy use, and selecting sustainable cloud providers.

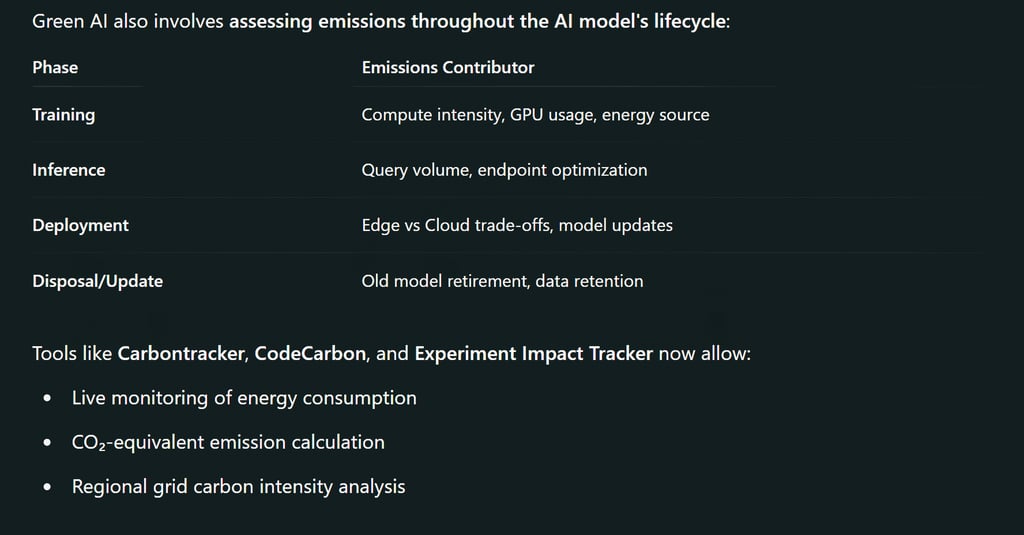

Q. Are there certifications or tools to measure AI’s carbon footprint?

Yes. Tools like ML CO2 Impact, Carbontracker, and CodeCarbon help monitor the energy and emissions impact of model training.

As artificial intelligence continues to revolutionize industries, Green AI offers a necessary compass guiding us toward a more ethical, sustainable future. By being mindful of the environmental costs, supporting energy-efficient practices, and embracing open science, we can ensure AI’s growth doesn’t come at the Earth’s expense.

Let’s build a future where intelligence isn’t just artificial—but consciously sustainable. 🌍

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚