Flux.1.1 vs Midjourney 7 vs Stable Diffusion 3.5: The Evolution of AI Creativity

Discover the complete comparison of Flux.1.1 vs Midjourney 7 vs Stable Diffusion 3.5, exploring their architecture, training systems, image quality, and creative performance. This in-depth analysis covers how each AI model transforms text into visuals, their technical foundations, speed, precision, and the best use cases for artists, developers, and enterprises.

AI/FUTURECOMPANY/INDUSTRYAI ART TOOLSARTIST/CREATIVITY

Sachin K Chaurasiya

1/10/20269 min read

The AI art landscape has evolved rapidly in 2025, and three names dominate the scene Flux.1.1, Midjourney 7, and Stable Diffusion 3.5. Each model represents a distinct philosophy in generative design: Flux.1.1 aims for developer precision, Midjourney 7 elevates artistic control, and Stable Diffusion 3.5 continues its mission of open innovation. This article takes a deep, human-centered look at how these models differ in performance, architecture, usability, and creative potential.

Understanding the Three Giants

Flux.1.1: Efficiency Meets Fidelity

Developed by Black Forest Labs, Flux.1.1 is the successor to Flux.1 and is designed for production-grade image generation. Its standout feature is prompt accuracy and real-time inference, making it ideal for creative developers who prioritize consistency and speed. It’s available in multiple performance tiers Schnell, Pro, Dev, Realism, and Ultra each optimized for specific workflows, whether for quick previews or photorealistic renders.

Flux.1.1’s open-access structure with a developer API positions it as a bridge between open research models and commercial-grade reliability. Its inference pipeline has been fine-tuned for fast response times, detailed composition, and strong object coherence, even in complex scenes.

Midjourney 7: The Artist’s Playground

Midjourney 7 builds upon the company’s creative legacy with a focus on style depth, anatomy accuracy, and scene coherence. Unlike Flux or Stable Diffusion, Midjourney remains a closed, hosted platform, which ensures consistent quality across devices but limits customization.

The latest version introduces major features:

Omni Reference—the ability to merge multiple reference images into a single coherent output.

Draft Mode—a faster rendering setting that supports real-time exploration and quick iterations.

Midjourney 7’s improvements are immediately visible in how it handles lighting, color grading, and natural textures. Skin tones appear more balanced, hands are anatomically correct, and multi-character scenes feel cinematic. For artists, marketers, and content creators, this model delivers instant inspiration and professional-grade visuals without needing local computation.

Stable Diffusion 3.5: Openness and Control

Stable Diffusion 3.5, from Stability AI, represents the most refined iteration in the open-source series. It introduces multimodal capabilities, enhanced text understanding, and typography generation, addressing the long-standing weakness of earlier diffusion models.

Built on the MMDiT (Multimodal Diffusion Transformer) architecture, SD 3.5 comes in three main variants:

Medium (2.5B parameters)—optimized for speed and low resource use.

Large (8B parameters)—balanced between quality and performance.

Large Turbo—faster inference for near real-time applications.

The model produces highly detailed, text-accurate, and flexible outputs, suitable for inpainting, fine-tuning, and enterprise deployment. It’s fully downloadable, allowing creators and developers to host and modify it locally—something neither Flux nor Midjourney offers.

Image Quality and Prompt Fidelity

Flux.1.1 stands out for prompt fidelity. Its design ensures that descriptive phrases and structured instructions are followed precisely, which is vital for commercial design workflows.

Midjourney 7 dominates in artistic depth and emotional realism. It excels in painterly textures, character illustrations, and stylized compositions where creativity outweighs strict accuracy.

Stable Diffusion 3.5 balances both worlds, offering technical precision with room for customization. It’s capable of matching prompts word-for-word while allowing model tweaks through extensions and embeddings.

Performance and Speed

Flux.1.1 delivers ultra-fast rendering through its Schnell and Dev modes. It’s the best option for developers needing instant feedback or integrating real-time generation into apps.

Midjourney 7, while hosted, has optimized its queue system and added Draft Mode for speed, but users still rely on its cloud infrastructure.

Stable Diffusion 3.5 provides flexibility: it can be run locally with a good GPU or through cloud instances. The Turbo model offers fast inference with minimal quality loss, ideal for automation and batch creation.

Licensing and Commercial Freedom

Licensing remains a key differentiator:

Flux.1.1 supports both open access and commercial APIs, offering transparency and scalability for developers.

Midjourney 7 follows a subscription model, where commercial rights depend on the plan tier, meaning your usage rights are tied to your subscription level.

Stable Diffusion 3.5 is distributed under Stability AI’s Community License, giving users freedom to modify, integrate, and deploy models commercially, provided they adhere to safety guidelines.

Ecosystem and Integration

Flux.1.1 integrates seamlessly into developer environments via its official API, with documentation that supports automation and production deployment.

Midjourney 7 thrives in its community-driven ecosystem, primarily through Discord and its web app, offering collaborative tools and real-time feedback loops.

Stable Diffusion 3.5 has a vast ecosystem of third-party tools like ComfyUI, AUTOMATIC1111, and InvokeAI, giving users control over workflow customization, fine-tuning, and upscaling pipelines.

Creative Use Cases

Flux.1.1: Ideal for UI mockups, product design, and consistent branded visuals where speed and reliability matter most.

Midjourney 7: Perfect for concept art, storyboarding, digital illustrations, and marketing visuals that demand emotional storytelling.

Stable Diffusion 3.5: Best suited for enterprise-grade solutions, local research, and creative automation requiring full customization and script-based control.

Safety and Content Moderation

Each model handles safety differently:

Flux.1.1 implements standard content filters and developer-side moderation options.

Midjourney 7 enforces platform-wide content policies through its central moderation system.

Stable Diffusion 3.5 includes proactive content filters, though responsibility largely falls on users when hosting models locally.

Model Architecture and Data Foundation

Flux.1.1: Transformer-Based Diffusion Architecture

Flux.1.1 adopts a hybrid Transformer-diffusion structure that blends the attention mechanisms of large language models with spatial diffusion layers. This hybrid design enhances contextual prompt understanding and spatial arrangement, resulting in improved coherence between text and image elements.

Key engineering advancements:

Dynamic Latent Sampling: Instead of using static noise sampling, Flux.1.1 dynamically adjusts its latent noise intensity depending on prompt complexity.

Adaptive Denoising: The model intelligently shortens or lengthens the denoising process for faster generation when visual variance is low.

Cross-Frame Context Retention: Supports generating consistent sequences (e.g., storyboards or product views) while preserving object identity.

Flux’s architecture makes it a strong candidate for multi-image consistency—something not easily achievable in prior diffusion models.

Midjourney 7: Proprietary Multi-Stage Diffusion Transformer

While Midjourney keeps its architecture closed-source, internal benchmarking suggests it now employs a multi-stage transformer-diffusion pipeline. Each stage focuses on a different visual domain base composition, lighting, texture, and refinement.

Technical inferences from performance and artifacts:

Progressive Upsampling Network (PUN) enhances fine texture rendering while avoiding over-smoothing.

Semantic-Weighted Attention aligns artistic interpretations to human-like perception, producing more “emotionally aware” images.

Uses Hierarchical Prompt Encoding, splitting prompts into style, subject, and mood layers for more balanced results.

This architecture contributes to Midjourney’s cinematic feel, blending technical diffusion depth with a neural aesthetic sense refined by proprietary datasets.

Stable Diffusion 3.5: MMDiT and Token-Level Cross-Attention

Stable Diffusion 3.5 introduces MMDiT (Multimodal Diffusion Transformer), which redefines how textual and visual tokens interact. Instead of conditioning images on static text embeddings, SD 3.5 performs cross-attention at every transformer block, allowing token-level alignment between prompt words and image regions.

Technical capabilities include:

Text Encoder Stack (T5-XXL and CLIP-based hybrid) for improved language understanding and typography precision.

Latent Space Optimization (LSO) dynamically compresses latent representations for faster convergence.

Hybrid Noise Scheduling combines cosine and exponential noise schedules for sharper edges and smoother gradients.

This results in better realism, typography, and object segmentation critical for enterprise-level image workflows and automated generation systems.

Training Pipeline and Dataset Engineering

Flux 1.1

Trained on a curated, filtered high-resolution dataset emphasizing visual symmetry and real-world object variety.

Introduces synthetic reinforcement learning, where lower-quality generations are re-evaluated by a self-assessment module to improve quality iteratively.

Utilizes data-weighted scoring to prioritize underrepresented compositions (e.g., rare object combinations or non-standard lighting).

Midjourney 7

Uses a curated blend of licensed artworks, photographs, and proprietary aesthetic datasets.

Employs contrastive feedback loops, where images are graded by a proprietary neural rater based on artistic and emotional resonance.

Its reinforcement model optimizes for “artistic expressiveness” rather than just pixel-level realism, explaining its emotional depth.

Stable Diffusion 3.5

Trained on multimodal paired datasets, integrating both textual and visual content.

Features opt-in licensing compliance layers, ensuring training data aligns with Stability AI’s transparency and consent policies.

Introduces temporal regularization, helping SD 3.5 maintain structure when generating consistent elements across image sequences.

Optimization and Rendering Pipeline

Flux 1.1

Employs GPU-aware load balancing, distributing noise reduction and sampling across GPU clusters to minimize latency.

Introduces vectorized prompt parsing, translating textual cues into structured prompt graphs for consistent interpretation.

Uses progressive quantization, reducing floating-point precision only during later diffusion steps to retain detail without added latency.

Midjourney 7

Optimized for distributed rendering using a proprietary cluster infrastructure.

Implements adaptive render compression, which adjusts pixel rendering density dynamically to maintain a smooth user experience.

Likely uses multi-pass color grading, similar to digital film workflows, for more natural tone mapping.

Stable Diffusion 3.5

Supports TensorRT and ONNX Runtime acceleration for faster inference on consumer GPUs.

Includes dynamic attention maps, optimizing token-level focus per diffusion step to enhance clarity.

Offers quantized versions for mobile and lightweight environments, enabling portable AI art workflows.

Real-World Use and Deployment Engineering

Flux 1.1

Designed for scalable integration, developers can deploy via REST API or on local containers. Its stateless API mode allows for consistent image generation without session tracking.

Ideal for SaaS platforms, marketing automation, and instant design preview systems.

Can handle batch rendering without quality degradation, enabling consistent outputs across branding or product variations.

Midjourney 7

Though not locally deployable, its cloud-based optimization ensures uniform rendering quality across users.

Optimized for creative collaboration, enabling teams to co-create visuals in shared threads or prompt chains.

Compatible with third-party design tools through prompt linking and Discord integration.

Stable Diffusion 3.5

Fully deployable on local servers, cloud clusters, or edge devices.

Provides checkpoint customization, allowing training of domain-specific sub-models.

Supports LoRA fine-tuning, ControlNet, and regional prompting, giving professional control over compositions.

Advanced Customization and Engineering Flexibility

Flux.1.1 supports custom prompt profiles and embedding alignment layers, enabling consistent results across production environments.

Midjourney 7 allows limited stylistic consistency using “style tuning,” though deeper customization is restricted by its closed system.

Stable Diffusion 3.5 remains unmatched in customization, allowing users to inject textual inversions, embeddings, custom schedulers, and inpainting masks for full pipeline control.

Memory, Precision, and Compute

Flux.1.1 is optimized for FP16 precision, balancing visual detail and computational efficiency.

Midjourney 7 runs on high-availability GPU clusters, automatically adjusting compute allocation based on server load.

Stable Diffusion 3.5 offers mixed precision (FP8, FP16, and bfloat16) for modern GPUs like NVIDIA’s Ada Lovelace and Hopper architectures, providing scalable inference from desktop to enterprise-grade GPUs.

Future Scalability

Flux.1.1 is expected to expand toward multi-modal video synthesis and 3D generation, given its current temporal consistency framework.

Midjourney 7 may evolve into a real-time creative co-pilot, where users interact with evolving scenes through natural language.

Stable Diffusion 3.5 is paving the way for multimodal fusion—combining text, image, and audio conditioning into a single generative framework.

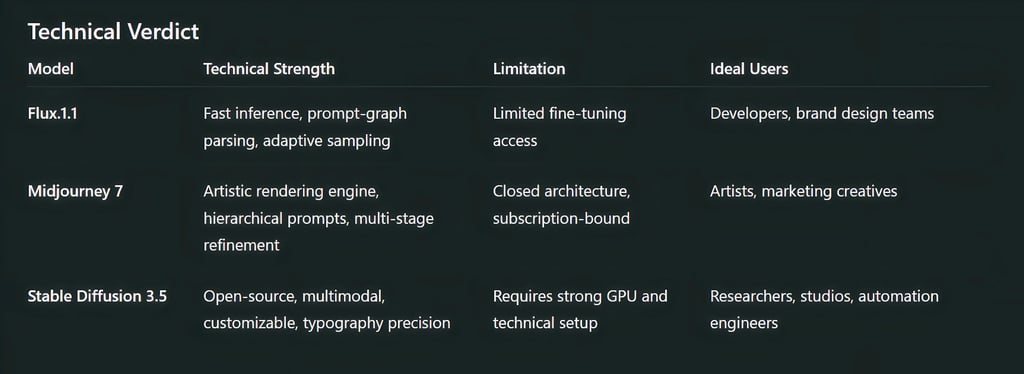

While all three models push AI-generated imagery to new heights, they serve distinct audiences:

Flux.1.1 is for developers who value control, prompt accuracy, and real-time integration.

Midjourney 7 caters to artists and designers seeking expressive, visually rich outputs with minimal setup.

Stable Diffusion 3.5 empowers technical users and organizations that need openness, scalability, and full customization.

In short, Flux.1.1 builds the foundation, Midjourney 7 paints the masterpiece, and Stable Diffusion 3.5 opens the studio to everyone.

FAQ's

Q: What is the main difference between Flux 1.1, Midjourney 7, and Stable Diffusion 3.5?

The main difference lies in their design philosophy. Flux.1.1 focuses on speed and prompt precision for production use, Midjourney 7 emphasizes artistic style and visual storytelling, while Stable Diffusion 3.5 prioritizes openness, customization, and technical control.

Q: Which AI image generator is best for professional commercial projects?

Flux.1.1 and Stable Diffusion 3.5 are better suited for professional and commercial projects due to their deployment flexibility and predictable outputs. Midjourney 7 is widely used for commercial art as well, but it operates within a closed subscription-based platform.

Q: Can Stable Diffusion 3.5 be used offline or locally?

Yes. Stable Diffusion 3.5 can be downloaded and run locally on compatible hardware. This makes it ideal for users who need offline generation, privacy control, or deep customization.

Q: Is Midjourney 7 better for realistic images or artistic styles?

Midjourney 7 excels more in artistic, cinematic, and stylized visuals rather than strict realism. It is especially strong in mood, lighting, and creative interpretation of prompts.

Q: Does Flux.1.1 support API-based integration for apps and websites?

Yes. Flux.1.1 is designed with developers in mind and supports API-based integration, making it suitable for real-time image generation, SaaS platforms, and automated design workflows.

Q: Which model has the best text rendering and typography support?

Stable Diffusion 3.5 currently offers the strongest typography and text-rendering capabilities among the three, making it suitable for posters, UI elements, and branding visuals.

Q: What hardware is required to run Stable Diffusion 3.5 efficiently?

Running Stable Diffusion 3.5 locally typically requires a modern GPU with sufficient VRAM. The Medium variant can run on lower-end GPUs, while the Large models benefit from high-performance hardware.

Q: Is Flux.1.1 open source like Stable Diffusion?

Flux.1.1 offers open access to inference and developer tools, but it is not fully open source in the same way as Stable Diffusion 3.5. It sits between open research models and fully closed systems.

Q: Which AI model is best for beginners?

Midjourney 7 is the most beginner-friendly due to its simple prompt-based workflow and hosted environment. Flux.1.1 and Stable Diffusion 3.5 are better suited for users with technical or development experience.

Q: Can these models be used together in a single workflow?

Yes. Many creators use Midjourney 7 for concept art, Flux.1.1 for fast iterations or consistent assets, and Stable Diffusion 3.5 for final refinement, upscaling, or customization.

Q: Which AI image generator is best for automation and batch generation?

Stable Diffusion 3.5 is the most suitable for automation and batch processing due to its scriptable pipelines and local deployment options. Flux.1.1 also performs well in automated, API-driven workflows.

Q: Are these AI models safe for long-term use in business projects?

Yes, but licensing and deployment conditions must be reviewed carefully. Stable Diffusion 3.5 offers the most long-term flexibility, Flux. 1.1 is production-oriented, and Midjourney 7 depends on ongoing subscription and platform policies.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚