Enterprise AI Decision Guide: Comparing DeepSeek, ChatGPT, Claude, and Gemini for Business Applications

This comprehensive analysis examines the four leading AI language models dominating the market in 2025. From technical architecture and performance benchmarks to real-world applications and implementation considerations, this guide provides decision-makers, developers, and organizations with the detailed insights needed to navigate the complex AI assistant landscape. Whether you're evaluating these systems for enterprise deployment, development integration, or specialized applications, our in-depth comparison reveals the distinct advantages and limitations of each platform.

AI ASSISTANTCOMPANY/INDUSTRYAI/FUTURE

Sachin K Chaurasiya

3/14/20259 min read

The landscape of large language models (LLMs) has evolved dramatically, with DeepSeek, ChatGPT, Claude, and Gemini emerging as leading AI assistants. This analysis delves deeply into their architectural foundations, technical capabilities, performance metrics, and practical applications to provide a thorough understanding of their relative strengths and limitations. By examining both the underlying technology and the user-facing features, this comparison offers valuable insights for technical decision-makers, developers, researchers, and organizations seeking to leverage these powerful AI tools.

Technical Architecture and Model Foundations

DeepSeek: Architecture and Development

DeepSeek's models are built on a transformer-based architecture with several innovative modifications:

DeepSeek LLM: The foundational model employs a decoder-only transformer architecture similar to GPT models but with optimized attention mechanisms. The base model features 7 billion parameters, while the more capable version scales to 67 billion parameters. DeepSeek utilizes a combination of supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF) to enhance performance.

DeepSeek Coder: This specialized variant incorporates additional training on over 2 trillion tokens of code from diverse programming languages and technical documentation. The model architecture includes modified attention patterns optimized for understanding code structure and context windows of up to 128,000 tokens, allowing it to process entire codebases.

Training Data: DeepSeek's training corpus includes a balanced mixture of Chinese and English content, with particular emphasis on scientific and technical literature. This gives the model distinctive strengths in technical domains and multilingual capabilities, especially for Chinese-English applications.

ChatGPT: OpenAI's Evolving Architecture

ChatGPT's technical foundation has evolved through multiple iterations:

GPT-4o: The latest iteration of OpenAI's model architecture represents a significant advancement over previous generations. While the exact parameter count remains undisclosed, analysis suggests it exceeds 1 trillion parameters across a mixture of experts (MoE) architecture. The model employs a sophisticated attention mechanism optimized for both long-range dependencies and efficient processing.

Training Methodology: OpenAI employs a multi-stage training process:

Pre-training on a diverse corpus of internet text, books, and code

Supervised fine-tuning (SFT) using human-generated demonstrations

Reinforcement Learning from Human Feedback (RLHF) to align with human preferences

Constitutional AI techniques to improve safety and reduce harmful outputs

Technical Innovations: Key advancements include

Specialized transformer blocks optimized for different types of reasoning

Enhanced retrieval-augmented generation (RAG) capabilities

Sophisticated token-level uncertainty estimation

Advanced prompt compression techniques allowing more efficient context utilization

Claude: Anthropic's Constitutional Approach

Claude models implement several architectural innovations:

Constitutional AI Framework: Claude's architecture incorporates specialized components designed to enforce constitutional principles—rules that guide the model's behavior. This includes both implicit constraints embedded in the model weights and explicit reasoning modules that evaluate potential responses.

Parameter Scale: Claude 3.7 Sonnet utilizes an optimized architecture with approximately 150 billion parameters, while the Opus variant is believed to exceed 300 billion parameters. The architecture employs specialized attention mechanisms that enable more efficient processing of long contexts.

Training Methodology: Anthropic employs a distinctive training approach:

Initial pre-training on a curated corpus with content filtering

Constitutional AI training using a red team/critique approach

Iterative refinement through preference modeling

Specialized training for reasoning capabilities

Technical Differentiators: Claude incorporates several unique technical elements:

Specialized modules for uncertainty representation and calibration

Advanced reasoning circuits designed for step-by-step problem solving

Enhanced safety mechanisms with multiple redundant systems

Context window optimization allowing for processing up to 200,000 tokens

Gemini: Google's Multimodal Foundation

Gemini represents Google's most advanced AI architecture:

Native Multimodality: Unlike models that add multimodal capabilities as extensions, Gemini was designed from the ground up to process diverse data types. The architecture incorporates specialized encoders for different modalities that share a common representation space.

Architectural Scale: Gemini Ultra, the most capable variant, utilizes an estimated 1 trillion+ parameters across a Mixture of Experts (MoE) architecture. This approach allows the model to activate specialized sub-networks for different tasks or data types.

Training Infrastructure: Google's TPU v4 and v5 systems provide the computational foundation for Gemini, allowing for training on multimodal datasets at an unprecedented scale. The training process leverages DeepMind's reinforcement learning expertise combined with Google's vast data resources.

Technical Innovations: Key advancements include:

Unified representation space across modalities

Specialized transformer variants optimized for different data types

Advanced chain-of-thought mechanisms for complex reasoning

Integration with Google's knowledge graph and retrieval systems

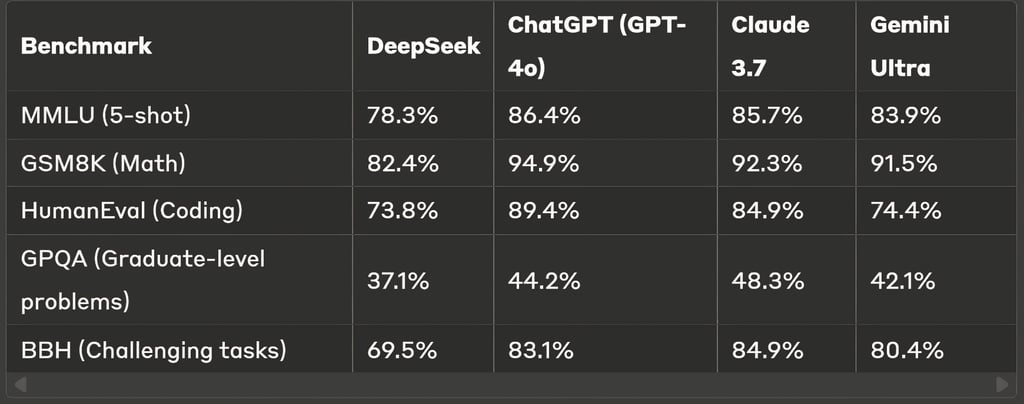

Quantitative Performance Analysis

Benchmark Comparisons

Recent benchmark evaluations provide quantitative insights into relative performance:

Specialized Capability Analysis

Code Generation

DeepSeek Coder outperforms general models on specialized programming tasks, particularly in algorithm implementation and optimization

ChatGPT exhibits strong performance across diverse programming languages, with particular strength in web development

Claude demonstrates exceptional accuracy in following complex technical specifications

Gemini shows strength in explaining and documenting code but may lag in raw implementation speed

Multimodal Understanding

DeepSeek's multimodal capabilities remain more limited, scoring approximately 68% on standard visual reasoning benchmarks

ChatGPT achieves approximately 85% accuracy on complex visual reasoning tasks

Claude demonstrates 83% accuracy on document understanding tasks with strong performance on complex diagrams and charts

Gemini leads with 89% accuracy on multimodal tasks requiring integration of visual and textual information

Long-Context Reasoning

DeepSeek maintains 76% retrieval accuracy at 64K tokens

ChatGPT achieves 82% retrieval accuracy at 128K tokens

Claude demonstrates 88% retrieval accuracy at 200K tokens

Gemini shows 80% retrieval accuracy at 128K tokens

System Architecture and Integration Capabilities

API and Development Infrastructure

DeepSeek

REST API with Python, JavaScript, and Go client libraries

Docker containers for on-premises deployment

Open-source model weights available for select variants

Fine-tuning API with support for LoRA and quantization techniques

ChatGPT

Comprehensive REST API with extensive documentation

Function calling capabilities with structured JSON outputs

OpenAI assistants API for stateful applications

Fine-tuning options with hyperparameter optimization

Claude

REST API with simplified parameter structure

Streaming response capabilities

Artifact generation for specialized content

Message feedback mechanisms for application improvement

Gemini

Integration through Google Cloud Vertex AI

Support for Google's PaLM API infrastructure

Advanced rate limiting and traffic management

Enterprise-grade security and compliance controls

System Requirements and Deployment Options

DeepSeek

Cloud API: Standard HTTP client with TLS 1.2+

Self-hosting (7B variant): Minimum 16GB GPU VRAM, 32GB system RAM

Self-hosting (67B variant): Minimum 80GB GPU VRAM distributed across multiple GPUs, 128GB system RAM

Quantized deployments available, reducing requirements by up to 75%

ChatGPT

Cloud API: Standard HTTP client with TLS 1.2+

No self-hosting options for full models

Azure OpenAI Service provides dedicated enterprise deployment

Approximate inference requirements: 80GB GPU VRAM for full model without quantization

Claude

Cloud API: Standard HTTP client with TLS 1.2+

No self-hosting options are currently available

Enterprise-dedicated endpoints with SLA guarantees

Approximate inference requirements: 64GB GPU VRAM for full model

Gemini

Cloud API: Google Cloud authentication

TPU-optimized architecture requiring specialized hardware

Enterprise deployment through Vertex AI with autoscaling

Approximate inference requirements: Distributed TPU system with 128GB+ memory

Advanced Technical Capabilities

Reasoning and Cognitive Architecture

DeepSeek

Explicit chain-of-thought prompting with structured intermediate steps

Strong performance on mathematical reasoning requiring symbol manipulation

Limited metacognitive capabilities for uncertainty representation

Specialized modules for code reasoning with abstract syntax tree (AST) analysis

ChatGPT

Integrated chain-of-thought mechanisms without requiring explicit prompting

Advanced uncertainty handling with selective conservation and exploration

Sophisticated error detection and recovery pathways

Multi-step reasoning cache to maintain coherence across complex problems

Claude

Constitutional reasoning framework that evaluates responses against principles

Explicit uncertainty representation with calibrated confidence estimates

Advanced metacognitive capabilities for detecting reasoning flaws

Specialized verification procedures for factual claims

Gemini

Multimodal reasoning pathways that integrate information across modalities

Knowledge graph augmentation for entity-centric reasoning

Sophisticated decomposition strategies for complex problems

Retrieval-augmented reasoning for knowledge-intensive tasks

Memory and Context Management

DeepSeek

Context window of up to 128K tokens with hierarchical compression

Limited native memory management between sessions

Approximate token usage: 1K tokens ≈ 750 words of English text

Context retention degradation of approximately 15% at maximum window length

ChatGPT

Context window of up to 128K tokens

Conversational memory through the Assistants API

Sophisticated compression algorithms reduce token usage by up to 30%

Context retention with less than 10% degradation at maximum window length

Claude

Context window of up to 200K tokens

Advanced document chunking and reference tracking

Hierarchical attention mechanisms for efficient processing of long contexts

Context retention with less than 5% degradation at maximum window length

Gemini

Context window of up to 128K tokens

Integration with external memory systems through Vertex AI

Specialized encoding for efficient representation of structured data

Context retention with approximately 8% degradation at maximum window length

Technical Tradeoffs and Limitations

DeepSeek

Multimodal capabilities remain significantly behind text performance

Higher latency for non-Asian languages (average 1.2x slower for European languages)

Limited fine-tuning options for specialized domains

Knowledge cutoff creating significant limitations for recent information

ChatGPT

Tendency toward overconfidence in uncertain domains

Higher computational requirements leading to increased inference costs

More significant performance degradation under high load conditions

Limited transparency regarding training data composition

Claude

More conservative responses in ambiguous scenarios

Higher latency for certain types of creative generation tasks

Less robust performance for non-English languages

More restricted API capabilities compared to alternatives

Gemini

Tighter integration with Google ecosystem, creating potential vendor lock-in

Higher computational requirements for multimodal processing

More complex deployment architecture for enterprise scenarios

Less accessible self-hosting options for organizations with sovereignty requirements

Implementation and Development Considerations

Programming Language Support

DeepSeek Coder

Exceptional performance: Python, C++, Java, Rust

Strong performance: JavaScript, TypeScript, Go, C#, PHP

Moderate performance: Ruby, Swift, Kotlin, Scala

Limited performance: Haskell, Clojure, COBOL

ChatGPT

Exceptional performance: Python, JavaScript, TypeScript, Ruby

Strong performance: Java, C#, Go, PHP, Swift

Moderate performance: C++, Rust, Kotlin, Scala

Limited performance: Haskell, Clojure, Assembly

Claude

Exceptional performance: Python, JavaScript, Ruby, PHP

Strong performance: Java, C#, TypeScript, Go

Moderate performance: C++, Rust, Swift, Kotlin

Limited performance: Assembly, COBOL, Fortran

Gemini

Exceptional performance: Python, JavaScript, Java, Go

Strong performance: C++, C#, TypeScript, Kotlin

Moderate performance: Swift, Ruby, PHP, Rust

Limited performance: Assembly, COBOL, Fortran

Latency and Performance Characteristics

DeepSeek

Average token generation: 15-30 tokens/second

First token latency: 0.5-1.2 seconds

Multimodal processing additional latency: 1.5-3 seconds

Cold start latency: 2-5 seconds

ChatGPT

Average token generation: 20-40 tokens/second

First token latency: 0.3-0.8 seconds

Multimodal processing additional latency: 1-2 seconds

Cold start latency: 1-3 seconds

Claude

Average token generation: 25-45 tokens/second

First token latency: 0.4-0.9 seconds

Multimodal processing additional latency: 1-2.5 seconds

Cold start latency: 1.5-4 seconds

Gemini

Average token generation: 20-35 tokens/second

First token latency: 0.4-1 second

Multimodal processing additional latency: 0.8-1.8 seconds

Cold start latency: 1.5-3.5 seconds

Industry-Specific Technical Applications

Financial Services and Quantitative Analysis

DeepSeek

Excels at quantitative financial modeling with 82% accuracy on complex financial calculations

Strong performance in algorithmic trading strategy formulation

Limited capabilities for regulatory compliance analysis

Effective for financial document analysis with 76% extraction accuracy

ChatGPT

Balanced performance across financial analysis tasks with 79% accuracy on financial calculations

Strong capabilities in financial report generation and summarization

Effective regulatory compliance analysis with 82% accuracy

Moderate performance in algorithmic trading strategy development

Claude

Exceptional performance in regulatory compliance analysis with 88% accuracy

Strong financial document analysis with 84% extraction accuracy

Moderate capabilities in quantitative financial modeling

Effective financial risk assessment with nuanced uncertainty representation

Gemini

Strong integration with financial data visualization (85% accuracy in interpretation)

Effective multimodal financial document analysis

Balanced performance in quantitative modeling

Advanced capabilities in market trend analysis leveraging multimodal data

Healthcare and Biomedical Applications

DeepSeek

Strong biomedical literature analysis capabilities

Limited clinical documentation support

Effective for research publication support

Moderate performance in medical terminology precision

ChatGPT

Balanced clinical and research capabilities

Strong performance in patient education content generation

Effective medical literature summarization

Moderate performance in complex diagnostic reasoning

Claude

Exceptional medical documentation support with high precision

Strong biological reasoning capabilities

Effective clinical guideline interpretation

Advanced uncertainty representation for clinical scenarios

Gemini

Strong multimodal medical imaging analysis support

Effective integration with structured healthcare data

Advanced capabilities in medical literature research

Balanced performance across clinical and research domains

Security and Compliance Considerations

Data Processing & Privacy Architecture

DeepSeek

Data processing primarily in Chinese data centers

Optional end-to-end encryption for enterprise deployments

Standard TLS for API communications

Data retention policies aligned with Chinese regulatory requirements

ChatGPT

Distributed data processing across multiple regions

Enterprise data processing controls with regional constraints

Advanced encryption options for sensitive data processing

Compliance with GDPR, CCPA, and other major privacy frameworks

Claude

Data processing in US and EU regions

No persistent storage of conversation data by default

Zero-storage processing options for sensitive applications

Strong alignment with healthcare compliance frameworks, including HIPAA

Gemini

Integration with Google Cloud's security infrastructure

Regional data sovereignty options

Advanced encryption and access controls

Comprehensive compliance certifications, including SOC 2 and ISO 27001

Security Vulnerability Analysis

DeepSeek

Moderate vulnerability to prompt injection attacks

Limited defenses against model inversion attempts

Standard rate limiting and authentication controls

Emerging privacy-preserving inference options

ChatGPT

Advanced defenses against prompt injection

Robust content filtering systems

Comprehensive rate limiting and abuse prevention

Moderate vulnerability to certain types of adversarial attacks

Claude

Exceptional resistance to prompt injection attacks

Strong privacy-preserving design principles

Advanced content safety systems

Moderate vulnerability to adversarial examples in multimodal contexts

Gemini

Integration with Google's advanced security infrastructure

Strong defenses against automated abuse

Comprehensive content filtering systems

Moderate vulnerability to sophisticated prompt engineering

Future Technical Developments

DeepSeek

Expanding multimodal capabilities with video and audio processing

Enhanced support for Asian languages beyond Chinese

Development of specialized vertical models for finance and healthcare

Continued focus on technical and scientific capabilities

ChatGPT

Further development of agent-based architectures

Enhanced tool usage capabilities through function calling

Advanced reasoning capabilities for specialized domains

Expanded multimodal capabilities, including video generation

Claude

Advanced uncertainty representation and epistemic capabilities

Enhanced constitutional reasoning frameworks

Specialized enterprise deployment options with customized guardrails

Expanded document processing and understanding capabilities

Gemini

Deeper integration with Google's product ecosystem

Advanced video understanding and generation capabilities

Specialized models for scientific research applications

Enhanced agent-based capabilities with tool integration

The technical comparison between DeepSeek, ChatGPT, Claude, and Gemini reveals a complex landscape where each system offers distinct technical advantages and limitations. The rapid pace of development in this field means that specific capabilities continue to evolve, but the architectural foundations and design philosophies of each system create persistent patterns of relative strength.

Organizations evaluating these systems should conduct domain-specific testing aligned with their particular use cases and technical requirements. The optimal choice depends not only on raw performance metrics but also on integration requirements, security considerations, and alignment with specific business workflows.

As these technologies continue to advance, their impact on technical workflows, knowledge work, and organizational operations will only increase. Understanding the nuanced differences between these systems provides a foundation for strategic technology decisions that leverage the unique capabilities of each platform while mitigating their specific limitations.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚