DeepSeek vs Azure OpenAI: A Comprehensive Comparison

Discover the key differences between DeepSeek and Azure OpenAI — from model architecture, performance benchmarks, training data, and APIs to deployment options and security standards. This in-depth, developer-friendly comparison breaks down each platform's strengths for researchers, engineers, and AI enthusiasts.

AI ASSISTANTAI/FUTURECOMPANY/INDUSTRYEDITOR/TOOLS

Sachin K Chaurasiya

4/24/20255 min read

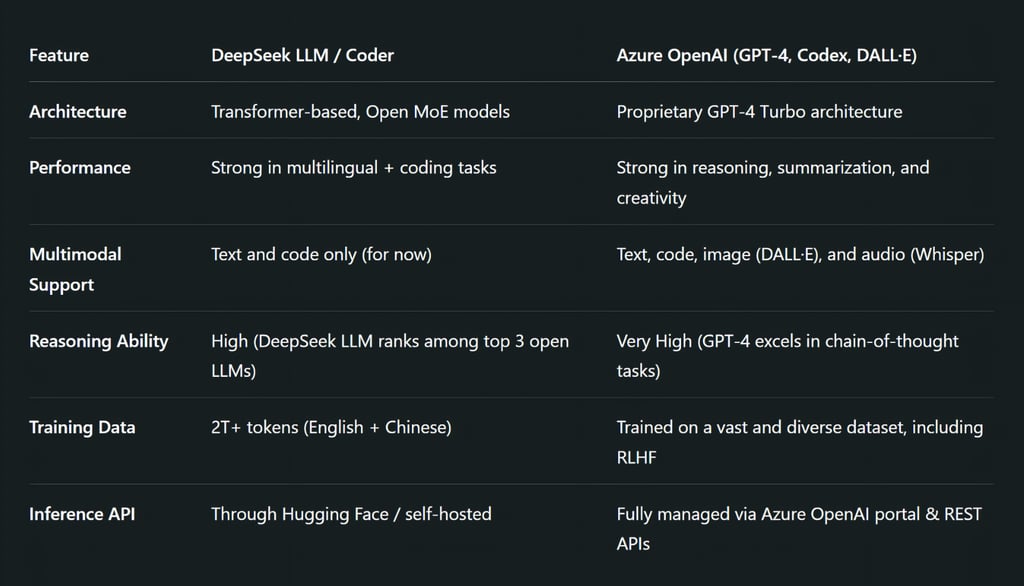

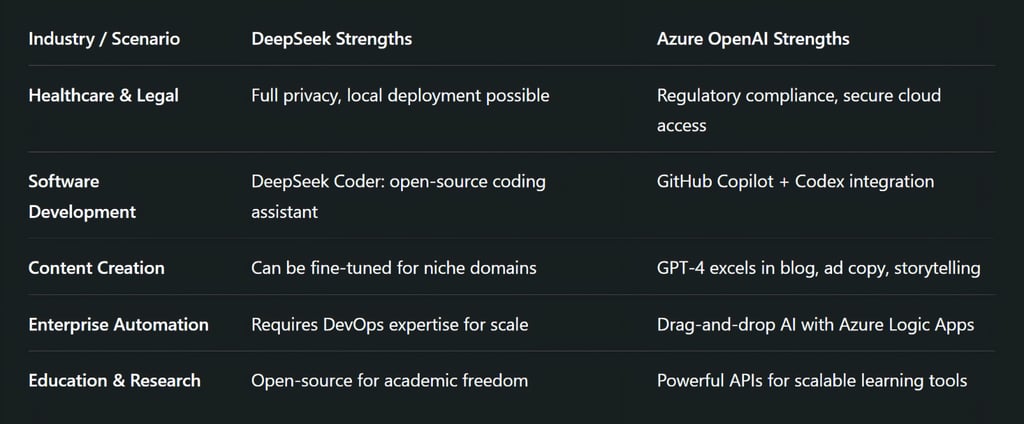

As artificial intelligence becomes an essential driver of innovation, businesses, researchers, and developers are actively seeking the best platforms to build, deploy, and scale their AI solutions. Two compelling contenders in this space are DeepSeek and Azure OpenAI. While one champions the open-source movement, the other offers premium access to industry-leading proprietary models.

In this comprehensive comparison of DeepSeek vs. Azure OpenAI, we’ll break down their technical architecture, model offerings, ecosystem support, security protocols, and more—so you can choose the right solution for your goals.

DeepSeek: Open-Source with a Mission

Launched by researchers in China, DeepSeek is quickly gaining recognition for its bold attempt to democratize AI. It offers open-source large language models (LLMs) that compete with giants like GPT-4 and Claude while putting an emphasis on accessibility, reproducibility, and transparent development.

Key Innovations

DeepSeek LLM: A general-purpose model trained on over 2 trillion tokens. It's optimized for reasoning, language understanding, summarization, and instruction following.

DeepSeek Coder: Fine-tuned for software development tasks like code completion, debugging, and generation in multiple programming languages.

Technical Specs

Architecture inspired by transformer-based models (like LLaMA and GPT).

Supports MoE (Mixture of Experts) variants for better efficiency.

Trained on bilingual datasets (English + Chinese), making it strong in multilingual use cases.

Model Architecture

Base Architecture: Transformer-based (similar to LLaMA and GPT).

Variants:

DeepSeek-LLM: General-purpose instruction-following model.

DeepSeek-Coder: Code-generation model with natural language and programming understanding.

Mixture of Experts (MoE):

Some models use sparse MoE for performance optimization.

For example, DeepSeek-Coder 33B MoE has 8 experts with only 2 active per token, which improves efficiency while reducing compute cost.

Ideal For

AI researchers

Independent developers

Startups looking for customization

Enterprises with data privacy concerns

Community & Support

Growing developer community

Available on Hugging Face and GitHub

Active discussions on open-source forums

Training Dataset & Pipeline

Training Data Size: Over 2 trillion tokens

Languages: Bilingual training (English + Chinese)

Data Sources:

Publicly available text data

Code datasets (for Coder)

Tokenizer: Custom BPE tokenizer optimized for bilingual input

Training Hardware:

DeepSeek LLM models trained on A100 GPU clusters

MoE model optimization reduces active compute load

Training Framework: Likely PyTorch + DeepSpeed/FSDP (open inference suggests this.)

APIs and Integration Tools

Hugging Face Inference API: Available for quick testing and deployment.

Self-hosting:

Docker containers or Python scripts using transformers or vLLM.

Quantized models (INT4/INT8) available for lower RAM/GPU usage.

Frameworks Supported:

PyTorch

TensorRT

DeepSpeed (for training)

vLLM or Hugging Face Transformers (for inference)

Security & Compliance

Open-source license (Apache 2.0 or MIT, varies by model).

No built-in content filters or moderation (customizable).

Full control over user data and logs.

Ideal for air-gapped, sensitive systems (e.g., military, medical research).

Azure OpenAI: Power Meets Convenience

Azure OpenAI, a product of Microsoft’s partnership with OpenAI, provides seamless access to powerful models like GPT-4, DALL·E, and Codex—fully hosted and managed on Microsoft’s secure cloud infrastructure.

Key Features

Latest OpenAI Models: GPT-4 Turbo, GPT-3.5, Codex, Whisper, and DALL·E 3.

Enterprise-Ready: Built-in compliance with ISO, SOC, GDPR, HIPAA, and more.

Scalable Infrastructure: Instantly deploy models at scale without worrying about GPUs or server maintenance.

Hybrid Cloud Flexibility: Available on Azure’s public cloud or through Azure Arc for on-prem deployments.

Model Architecture

Base Architecture: Proprietary Transformer architecture from OpenAI.

Variants Available:

GPT-3.5, GPT-4, GPT-4 Turbo

Codex (code generation), DALL·E 3 (image generation), Whisper (speech-to-text)

GPT-4 Turbo Enhancements:

Lower latency, higher throughput.

Longer context window (up to 128K tokens).

Fine-tuned with Reinforcement Learning from Human Feedback (RLHF).

Optimized for multi-turn conversations and reasoning.

Deep Microsoft Ecosystem

Azure OpenAI easily integrates with:

Microsoft 365 Copilot

Power BI for Natural Language Queries

Azure Cognitive Services

Azure DevOps + GitHub Copilot

Community & Support

24/7 Microsoft Support

Extensive documentation & tutorials

Integration with Microsoft Learn, GitHub Discussions

Training Dataset & Pipeline

Training Data Size: Estimated in tens of trillions of tokens

Languages: Multilingual training (100+ languages)

Data Sources:

Proprietary large-scale datasets (web text, books, code, Wikipedia, user interactions)

Tokenizer: tiktoken (Byte Pair Encoding tokenizer used by OpenAI)

Training Framework: Proprietary distributed training framework across superclusters

APIs and Integration Tools

Azure REST API:

Available via secure HTTPS endpoint.

Supports endpoints for chat, completions, embeddings, vision, etc.

Authentication: API key or Azure Active Directory (AAD)

SDKs Available:

Python (openai + azure-ai)

C#, Java, JavaScript, PowerShell

Tokenization Tool: tiktoken for estimating usage and token cost

Security & Compliance

Enterprise-grade security protocols.

Compliance Certifications:

ISO 27001, 27701, 27018

SOC 1, 2, 3

HIPAA, FedRAMP, GDPR

Built-in Content Filtering & Safety Moderation API.

Data isn’t used to retrain foundation models unless explicitly allowed.

Frequently Asked Questions

What is the key difference between DeepSeek and Azure OpenAI?

DeepSeek is an open-source AI model series focused on flexibility, self-hosting, and code generation, while Azure OpenAI offers enterprise-level access to proprietary OpenAI models like GPT-4 and DALL·E through a secure cloud platform.

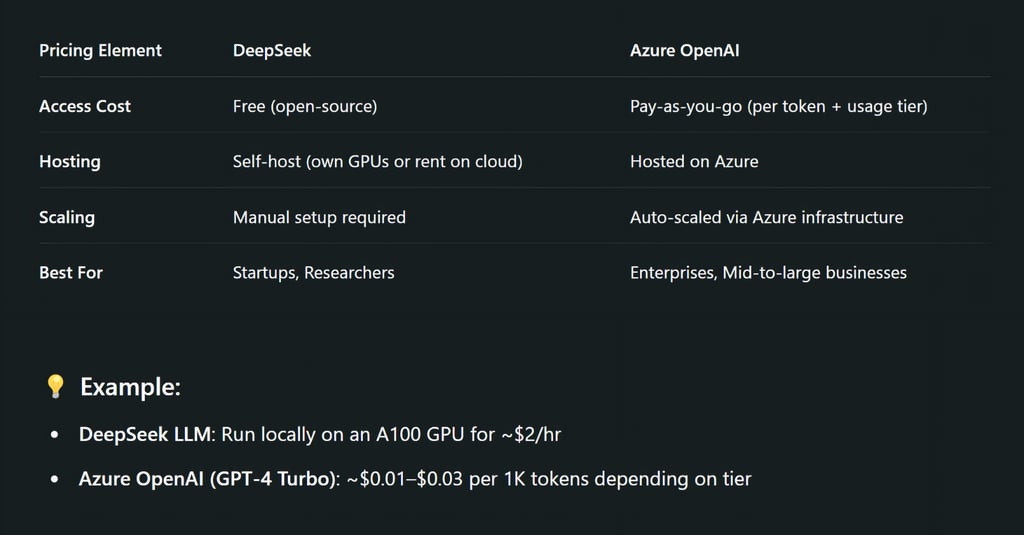

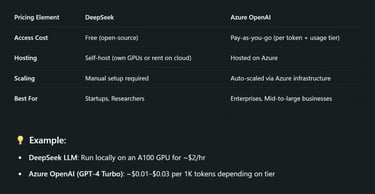

Is DeepSeek free to use?

Yes, DeepSeek models are open-source and free to use, even commercially. You can download them from Hugging Face and deploy them locally or on your own servers.

Can I host Azure OpenAI models locally?

No, Azure OpenAI models like GPT-4 are only accessible through Microsoft’s cloud. You can't self-host them, but you can integrate them via secure APIs.

Which one is better for coding tasks: DeepSeek or Azure OpenAI?

DeepSeek-Coder is optimized for code generation and understanding across 80+ programming languages, while Azure OpenAI’s Codex (and GPT-4 Turbo) offers stronger overall reasoning and task performance. Your choice depends on your project’s complexity and hosting needs.

Does DeepSeek support multilingual input like Azure OpenAI?

DeepSeek supports both English and Chinese natively. Azure OpenAI supports over 100 languages, making it a better choice for truly global applications.

How secure is Azure OpenAI for enterprise use?

Very secure. Azure OpenAI complies with strict industry standards like ISO, SOC, HIPAA, and GDPR. It also includes content filtering and moderation APIs out-of-the-box.

What is a Mixture of Experts (MoE) model in DeepSeek?

MoE allows DeepSeek models to activate only a subset of “expert” layers per input, reducing computation cost while maintaining performance. It's a smart efficiency feature not used in OpenAI models.

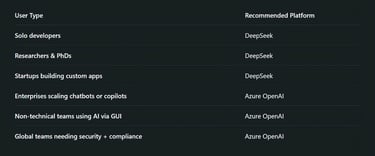

The battle between DeepSeek vs Azure OpenAI isn’t about who wins—it’s about what you value most.

If you want freedom, flexibility, and full control, DeepSeek is your ideal playground.

If you need powerful models at your fingertips with zero setup and enterprise support, Azure OpenAI is your go-to.

No matter which you choose, both platforms represent the next wave of intelligence—one with roots in openness, the other in scale.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚