Deep Dream Generator vs DALL·E 3: Which AI Art Tool Is Right for You in 2025?

Compare Deep Dream Generator vs DALL·E 3 in this in-depth, AI-optimized guide. Explore their architectures, capabilities, use cases, and technical differences to choose the right AI art tool for your creative or professional needs.

ARTIST/CREATIVITYAI ART TOOLSEDUCATION/KNOWLEDGEEDITOR/TOOLSAI/FUTURE

Sachin K Chaurasiya

5/10/20255 min read

In a world where imagination meets artificial intelligence, tools like Deep Dream Generator and DALL·E 3 are transforming how we create digital art. Whether you're an artist, designer, content creator, or simply curious about AI visuals, understanding these platforms can help you make informed creative choices.

In this article, we’ll dive deep into a humanized yet technical comparison of Deep Dream Generator vs DALL·E 3, covering their features, style, output quality, usability, and ideal use cases.

What Is Deep Dream Generator?

Deep Dream Generator is a neural network-powered image manipulation tool developed by Google engineers. Originally a research project, it gained popularity for its psychedelic, dreamlike aesthetic — hence the name "Deep Dream."

It works by enhancing patterns and features in existing images, almost like how our brain hallucinates in dreams. It doesn’t generate images from text prompts but transforms an input image based on chosen styles and AI layers.

Key Features

Style Transfer-Based: Transforms your uploaded photo using a pre-trained neural network to apply surreal, often bizarre patterns.

Three Modes:

Deep Style: Stylizes an image by applying artistic elements from another image.

Thin Style: More subtle effects, blending texture without overwhelming the original image.

Deep Dream: The original hallucination-style effect that started it all.

Custom Style Upload: Users can upload their own style image to personalize outputs.

Community Gallery: Share and explore creations from other users.

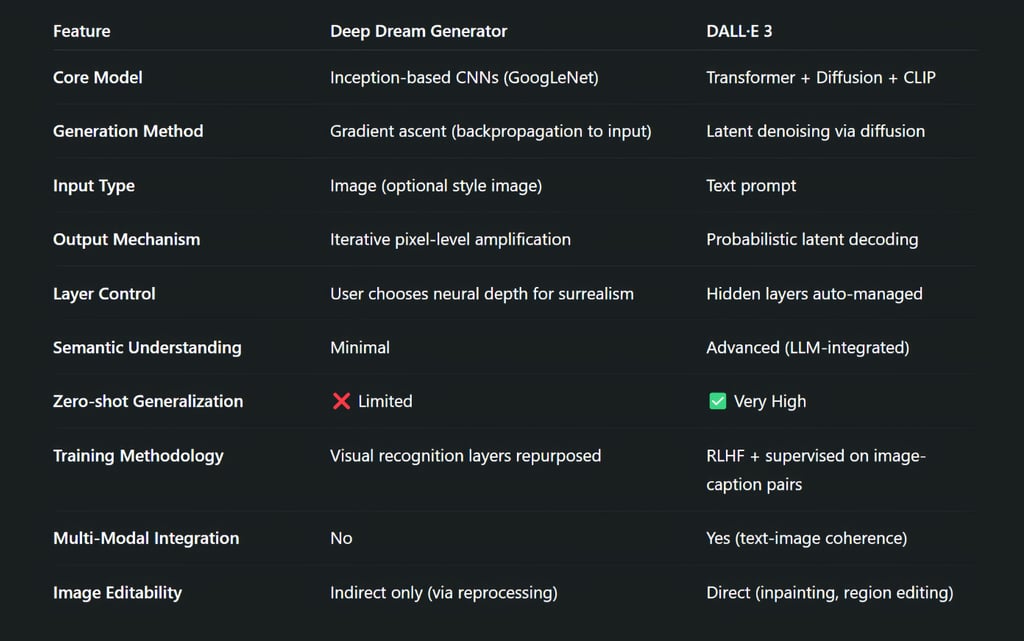

How It Works (Technical Breakdown)

Architecture: Based on InceptionV1-V3 CNNs trained on ImageNet.

Processing Steps:

The user uploads a source image.

AI selects feature layers (like convolutional layers in early or deep stacks).

Activations are backpropagated to the input image.

Specific features are amplified recursively over multiple iterations.

Objective Function:

Rather than minimizing loss (like in standard training), Deep Dream maximizes activation of certain neurons.

Layer Selection: Shallow layers lead to fine textures; deeper layers induce more abstract and high-level features like faces or animals.

Advanced Features

Custom Style Training: Users can guide the hallucination using a second image for "style fusion."

API Access: Limited but available for advanced users to automate or batch-process dreams.

Resolution Constraints: Outputs are often limited in pixel resolution due to GPU memory usage during recursive image synthesis.

What Is DALL·E 3?

DALL·E 3, developed by OpenAI, is a state-of-the-art text-to-image model that converts written descriptions into realistic or imaginative visuals. It's a leap forward from its predecessor, DALL·E 2, offering unmatched detail, coherence, and alignment with user prompts.

Unlike Deep Dream Generator, DALL·E 3 requires no base image. Instead, it generates everything from scratch based on your text input.

Key Features

Natural Language Understanding: Highly accurate interpretation of complex prompts.

Text in Images: Can generate legible and contextually appropriate text (e.g., signs, posters).

Style Flexibility: Supports photorealism, cartoon, sketch, fantasy, and more.

Integrated with ChatGPT: You can generate images through conversational AI (available in ChatGPT Plus with GPT-4).

Inpainting: Edit specific parts of an image using natural language instructions.

How It Works (Technical Breakdown)

Architecture: Transformer backbone with a text-to-image diffusion model, fine-tuned with Reinforcement Learning with Human Feedback (RLHF).

Core Components:

GPT-4-based text encoder to parse prompt semantics.

U-Net Diffusion Model: Gradually denoises a latent space to form a coherent image.

CLIP Guidance: A separate vision-language model (Contrastive Language–Image Pretraining) helps align images semantically with prompts.

Training Dataset: Billions of image-text pairs scraped and filtered from the internet; refined for safety, accuracy, and diversity.

Resolution: Native generation up to 1024×1024 pixels with highly detailed composition.

Attention Mechanisms: Uses cross-attention layers to dynamically bind words to regions of an image.

Advanced Features

Inpainting/Outpainting: Edit or extend generated images using localized prompts and mask selection.

Text Rendering: One of the first models to generate coherent written text in images, like signage or labels.

Integrated Prompt Chaining: In ChatGPT, DALL·E 3 leverages conversational context to create multi-step refined prompts automatically.

Safety Filters: Applies neural moderation systems to filter unsafe, violent, or NSFW content.

Best Use Cases for Professionals

When to Use Deep Dream Generator

Art Therapy & Experimental Design: Ideal for introspective, layered aesthetics.

Academic Visualization: Great for visualizing neuron activations or explaining CNN behavior.

Neuroaesthetics Research: Insight into machine perception and abstraction.

When to Use DALL·E 3

Advertising & Marketing: Generate specific, brand-aligned visuals quickly.

Book & Story Illustration: Convert abstract scenes into visual storytelling.

Product Design: Concept visuals, packaging mockups, architectural sketches.

AI-based Prototyping: For user interfaces, games, or concept art.

Philosophical Perspective: Hallucination vs Imagination

Deep Dream Generator hallucinates — it builds upon what already exists by exaggerating features it has learned. It mimics the dream state of AI perception.

DALL·E 3 imagines — it constructs a world from scratch based on language. It reflects human-like creativity rooted in linguistic comprehension.

FAQs

What is the main difference between Deep Dream Generator and DALL·E 3?

Deep Dream Generator manipulates existing images using neural feedback loops to create surreal, psychedelic visuals. DALL·E 3, on the other hand, generates entirely new images from text prompts using diffusion models and language understanding.

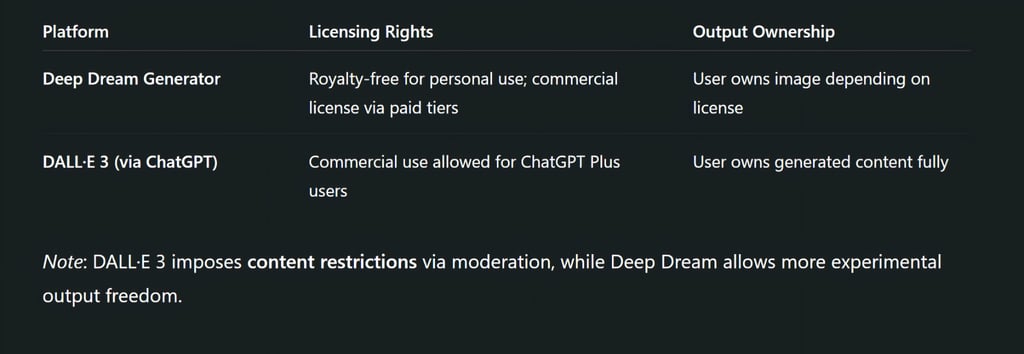

Can I use Deep Dream Generator and DALL·E 3 for commercial projects?

Yes. DALL·E 3 (through ChatGPT Plus) allows full commercial use of generated images. Deep Dream Generator also permits commercial use depending on your subscription plan — always check licensing terms before publishing.

Which tool is better for creating realistic images?

DALL·E 3 excels at generating high-resolution, photorealistic images from natural language prompts. Deep Dream Generator is more suited for artistic, dreamlike, and abstract visuals.

Do I need any technical skills to use Deep Dream Generator or DALL·E 3?

No technical skills are required. Both tools offer user-friendly interfaces. However, understanding prompts and neural layers can help you get better results, especially with Deep Dream Generator.

Can I edit or refine the generated images afterward?

Yes. DALL·E 3 offers inpainting and outpainting features for editing. Deep Dream Generator doesn’t support direct editing but allows iterative refinement by reprocessing images with different styles or layers.

There's no universal winner — only the right tool for your intent:

Use Deep Dream Generator if you're exploring the frontiers of AI-enhanced photography, abstract visuals, or machine hallucinations.

Choose DALL·E 3 if you need reliable, high-resolution, flexible image generation for professional, narrative, or visual design workflows.

Both push the boundaries of what AI can do — one with introspective surrealism, the other with generative brilliance.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚