DALL·E 3 vs Stable Diffusion 3 vs Runway ML: Which AI Tool Wins in 2025?

Compare DALL·E 3, Stable Diffusion 3, and Runway ML in this in-depth 2025 guide. Discover their strengths, differences, use cases, and which AI art tool suits your creative goals best.

AI ART TOOLSAI/FUTUREEDITOR/TOOLS

Sachin K Chaurasiya

5/4/20256 min read

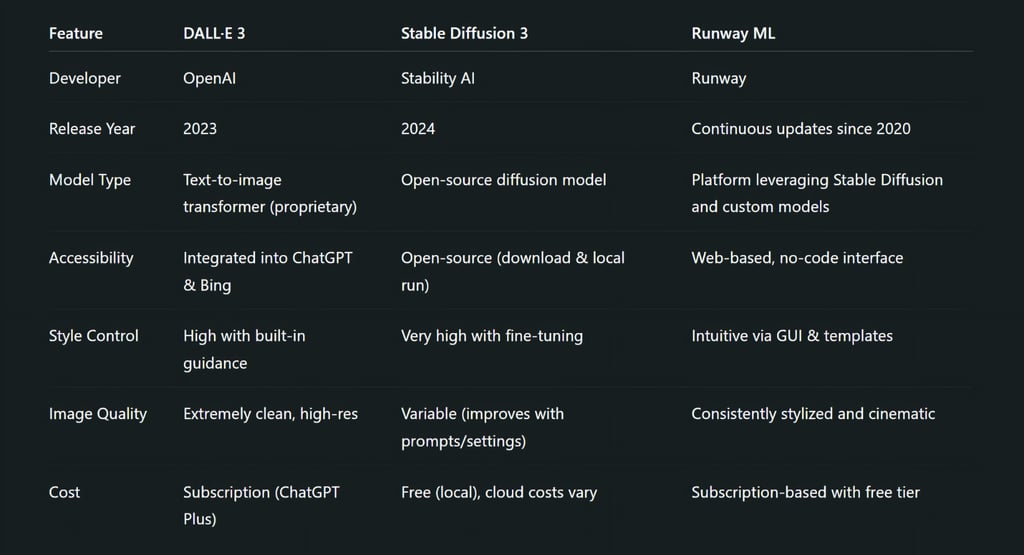

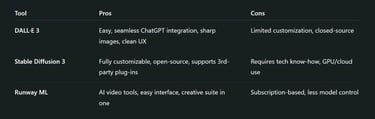

In the ever-evolving landscape of AI-generated art, three major players have captivated creators, designers, and marketers alike: DALL·E 3, Stable Diffusion 3, and Runway ML. Each brings something powerful and distinct to the table, yet choosing the right one often depends on your creative goals, technical expertise, and how hands-on you want to be.

In this article, we’ll explore these tools in-depth—breaking down how they work, what makes each one special, and which might be the best fit for your creative process.

What’s New in 2025?

Let’s start with the buzz. All three platforms have rolled out significant updates that elevate their capabilities:

DALL·E 3: Now supports inpainting, prompt refinements via ChatGPT, and cleaner, copyright-safe outputs.

Stable Diffusion 3: Launched in 2024 and updated in 2025 for better prompt alignment, multimodal vision capabilities, and more ethical outputs.

Runway ML: Integrated video-to-video, real-time style transfer, and 3D asset generation, making it a full AI studio in your browser.

DALL·E 3: The Polished Visionary from OpenAI

DALL·E 3 is OpenAI’s flagship image-generation model, known for its tight integration with ChatGPT, especially for users on ChatGPT Plus. It has earned a reputation for remarkable prompt understanding, smooth renderings, and creative accuracy. Compared to its predecessors, DALL·E 3 feels more intuitive—like talking to an artist who just “gets” you.

Key Highlights

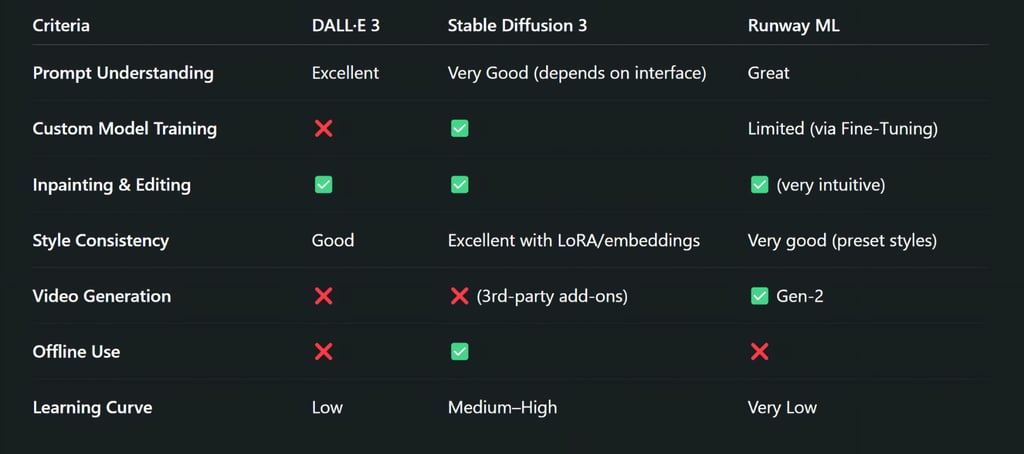

Prompt Interpretation: DALL·E 3 can comprehend complex prompts and generate relevant details, making it ideal for storytelling, marketing visuals, and concept art.

ChatGPT Integration: You can tweak or regenerate images through conversations, making the process feel fluid and natural.

Inpainting (Editing Images): As of early 2024, it also supports inpainting—editing parts of generated images—which boosts its versatility.

Technology Under the Hood

Model Type: Transformer-based diffusion model.

Training Data: Curated datasets with filtering to reduce copyrighted and inappropriate material.

API/Access: Only accessible through ChatGPT Plus, Microsoft Designer, and Bing Image Creator.

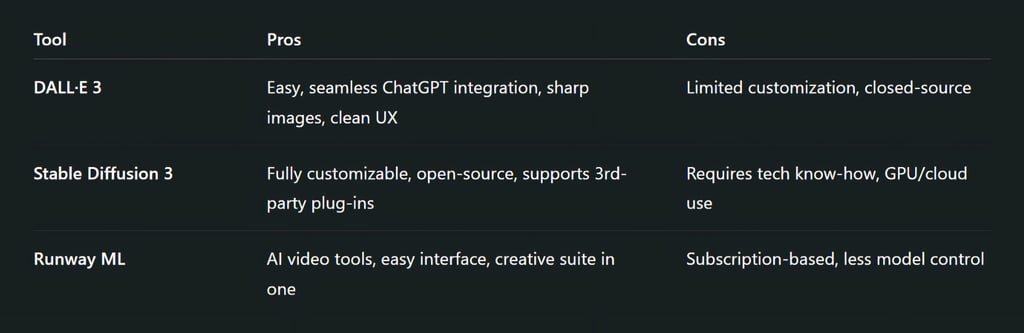

Strengths: Best-in-class at generating visually accurate and semantically aligned images. Handles long or complex prompts gracefully.

Limitations: Limited style transfer, less control over image size/output, closed-source.

Ideal For

Writers, marketers, and creatives who want to visualize ideas fast without technical setup.

Stable Diffusion 3: The Open-Source Powerhouse

Stable Diffusion 3 is the most recent evolution in the wildly popular Stable Diffusion series from Stability AI. It continues the tradition of being open-source, which means you can download it, modify it, and even train your own versions.

This model delivers higher image fidelity, better understanding of nuanced prompts, and reduced bias, setting it apart from previous versions.

Key Highlights

Customizability: Users can train models on specific styles or datasets, making it perfect for bespoke art generation.

Community & Ecosystem: A vast community supports it with plug-ins, UIs (like AUTOMATIC1111), and prompt-sharing platforms.

Fine-Grained Control: Offers full control over generation settings like sampling steps, CFG scale, negative prompts, and more.

Technology Under the Hood

Model Type: Diffusion model with transformer architecture improvements.

Training Data: Open datasets (e.g., LAION-5B), with increased focus on filtering for ethics and quality.

API/Access: Run locally or via third-party front-ends like InvokeAI, AUTOMATIC1111, and DreamStudio.

Strengths: Highly modular, custom-trainable, supports ControlNet, LoRA, embeddings, and fine-tuning.

Limitations: Requires GPU power or cloud credits. Steeper learning curve.

Ideal For

Developers, artists, and hobbyists who want deep control and local ownership of their creations.

Runway ML: The Creative Director in Your Browser

Runway ML is a browser-based creative suite powered by AI, designed for video creators, designers, and multimedia storytellers. It uses Stable Diffusion under the hood but wraps it in a user-friendly interface with powerful add-ons—like text-to-video, inpainting, motion brush, and more.

Key Highlights

No-Code, Pro-Level Tools: You can animate images, remove backgrounds, and even make videos from text.

AI Video Magic: Runway’s Gen-2 model lets you create stunning AI-generated videos.

Cloud-Based Simplicity: No need to download models or use terminals—just sign in and start creating.

Technology Under the Hood

Model Type: Wraps Stable Diffusion and other proprietary models with an intuitive UI.

Training Data: Licensed and curated for commercial use.

API/Access: Fully cloud-based. Browser-accessible with real-time collaboration options.

Strengths: Multi-modal AI tools (text-to-video, image-to-video, inpainting, background removal). Runs on any device.

Limitations: Limited fine-tuning; monthly subscription can be pricey for heavy users.

Ideal For

Filmmakers, content creators, and designers looking for professional-grade AI tools without coding.

Which One Should You Use?

Use DALL·E 3 if:

You want fast, clean visuals with minimal fuss.

You're already using ChatGPT and love conversational AI.

You need professional images for blogs, covers, or branding.

Use Stable Diffusion 3 if:

You enjoy experimenting and tweaking your AI workflows.

You value open-source ethics and complete freedom.

You’re into training your own models or exploring niche styles.

Use Runway ML if:

You’re a content creator looking to animate, edit, or enhance visuals with zero coding.

You want AI videos and motion graphics.

You need a creative all-in-one suite that runs in the cloud.

SEO-Focused Segment: Which Tool Ranks Best in Popularity?

According to search trends and community forums (like Reddit, Hugging Face, and Discord):

DALL·E 3 leads in general popularity and ease of access.

Stable Diffusion 3 dominates on GitHub and among AI art communities.

Runway ML is rapidly gaining ground in the video AI generation niche.

Keyword Trends (as of Q2 2025)

“DALL·E 3 vs Stable Diffusion 3” – 22k+ searches/month

“AI text-to-video tool 2025” – 30k+ searches/month

“Best AI art generator free” – 40k+ searches/month

FAQs

Which is better: DALL·E 3, Stable Diffusion 3, or Runway ML?

It depends on your needs. DALL·E 3 is best for simplicity and accurate prompts, Stable Diffusion 3 is ideal for customization and open-source control, and Runway ML shines for video generation and all-in-one creative workflows.

Is DALL·E 3 free to use?

DALL·E 3 is accessible through ChatGPT Plus ($20/month), Microsoft Designer, and Bing Image Creator. Some versions allow a limited number of free generations per day.

Can I use Stable Diffusion 3 without coding?

Yes! Platforms like DreamStudio, Mage, and InvokeAI offer no-code interfaces. However, for full customization and advanced workflows, some technical know-how helps.

Does Runway ML support video generation?

Absolutely. Runway ML offers text-to-video and video editing via Gen-2, making it a top choice for creators looking to animate or transform footage.

Which AI tool is best for commercial use?

All three can be used commercially, but Stable Diffusion 3 and Runway ML provide more clarity and control over licensing. DALL·E 3 uses filtered datasets to avoid copyright issues but doesn’t guarantee full ownership.

Can I train my own style or model on these platforms?

You can train or fine-tune models with Stable Diffusion 3 using tools like LoRA and DreamBooth. DALL·E 3 and Runway ML don’t support personal model training directly.

Each of these AI tools is not just a product—they’re creative companions, tuned for different needs:

DALL·E 3 is best if you’re looking for an easy, reliable, and refined image generator for storytelling, branding, or prototyping.

Stable Diffusion 3 is your go-to for open-ended creation, ideal for digital artists and developers who want deep customization.

Runway ML is a dream if you’re into video, animation, and real-time AI creativity, especially as a content creator or designer.

The Future of AI Art: Where It’s All Going

By 2025, we’re not just using AI to generate images—we’re co-creating visual worlds, blending text, sound, video, and interactivity. The line between artist and algorithm is blurring, and platforms like DALL·E 3, Stable Diffusion 3, and Runway ML are at the center of this creative revolution.

Choose wisely, but also—don’t be afraid to explore them all. Each has its own flavor of magic.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚