Complete Guide to Artbreeder, RunwayML & Midjourney: Tools, Tech & Use Cases (2025)

Discover how Artbreeder, RunwayML, and Midjourney are revolutionizing digital creativity. Explore their advanced AI models, technical features, and ideal use cases in art, video production, and design.

AI ART TOOLSEDITOR/TOOLSAI/FUTUREARTIST/CREATIVITY

Sachin K Chaurasiya

4/30/20255 min read

In recent years, AI has made a remarkable impact on the creative industry, empowering artists, designers, filmmakers, and storytellers to create with unprecedented ease and innovation. Among the most talked-about platforms in this space are Artbreeder, RunwayML, and Midjourney. Each platform serves a unique niche and offers groundbreaking capabilities in generative design, multimedia production, and AI-powered creativity. In this detailed article, we will explore each tool, examine its features, pros and cons, and understand how they stack up against each other—including their underlying technology, models, and performance considerations.

Artbreeder: Evolving Visuals with AI Genetics

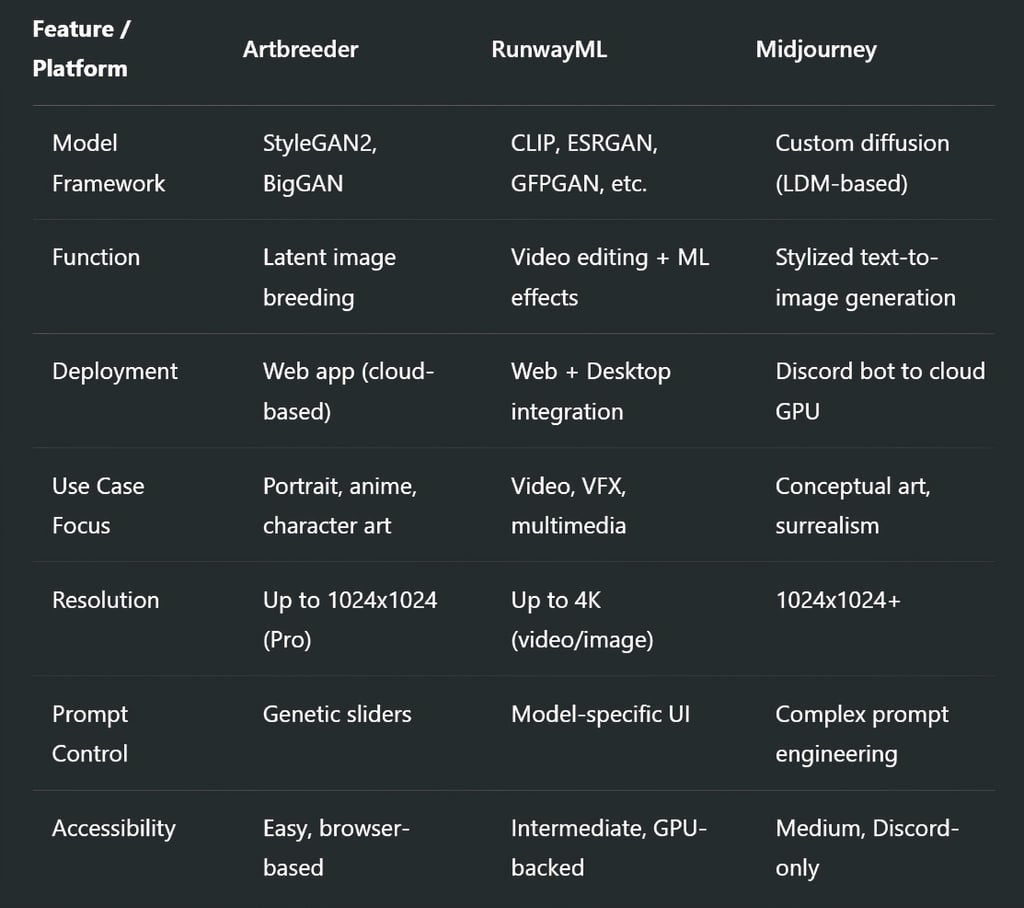

Artbreeder is a collaborative AI-based platform that allows users to generate and modify images through the concept of "genetic breeding." It leverages StyleGAN2 and BigGAN models to let users create visual art by mixing and blending features (referred to as "genes").

How It Works (Technical Overview)

Artbreeder operates on a latent space manipulation approach. It takes advantage of the latent vectors generated by StyleGAN2 models and allows interpolation and arithmetic operations across these vectors. Each slider correlates with a principal component of the latent space, giving users control over facial expressions, gender, age, hair, and artistic styles.

Model: StyleGAN2 (for portraits and faces), BigGAN (for general objects)

Frameworks: TensorFlow, PyTorch

Image Format: Progressive growing resolution (up to 1024x1024 for portraits)

Core Features

Latent space image editing

Facial attribute blending

High-resolution image synthesis (Pro users)

Community gene pools and remix functionality

Common Use Cases

Character and avatar design for games and novels

Image dataset augmentation

Visual concept evolution for AI storytelling

Pros

No technical expertise required

Fine control over genetic traits

Supports high-resolution downloads (Pro version)

Cons

Limited model diversity outside StyleGAN-based datasets

Low variation for abstract or surreal scenes

Strengths

Extremely intuitive, no technical knowledge needed

Allows deep genetic-style manipulation of portraits and landscapes

Great for character design and iterative creativity

Built on robust GAN models (StyleGAN2, BigGAN)

Collaborative community with remixable content

Weaknesses

Limited output quality compared to modern diffusion models

Lacks realism or abstract flexibility beyond its trained datasets

No text-to-image capability

High-resolution export is locked behind paywalls

RunwayML: AI Tools for Modern Multimedia Creation

RunwayML is a browser-based creative suite that integrates over 30+ machine learning models, tailored for video, image, and audio manipulation. It is geared towards creatives who want AI-augmented production tools without writing code.

How It Works (Technical Overview)

RunwayML connects to a cloud infrastructure running models trained in frameworks like TensorFlow, PyTorch, and ONNX. It uses GPU acceleration via services like AWS and GCP to provide near-real-time inference for tasks such as segmentation, keying, text-to-video, and object detection.

Model Hub: Stable Diffusion, CLIP, GFPGAN, ESRGAN, DALL·E, etc.

Deployment: WebRTC-based streaming from server-side GPU inference

Integration: RESTful APIs, Adobe plugin, Python SDK

Core Features

Text-to-video generation using diffusion models

Green Screen: U-2-Net based background removal

Frame interpolation via RIFE (Real-Time Intermediate Flow Estimation)

Motion tracking using DeepSORT

Upscaling and face restoration using ESRGAN and GFPGAN

Common Use Cases

Short-form video generation

Virtual production and VFX compositing

AI-driven storytelling pipelines

Pros

Professional-grade tools for post-production

API access for integration with pipelines

Compatible with NLE software (Adobe, FCPX)

Cons

May require GPU credits or subscriptions

Learning curve for advanced modules

Strengths

All-in-one suite for AI-powered video, image, and audio editing

Real-time background removal, motion tracking, and frame interpolation

Supports Stable Diffusion, CLIP, GFPGAN, ESRGAN, and more

Professional-grade results with no programming needed

Offers API, integrations (Adobe, Python), and training model import

Weaknesses

Free tier is limited; heavy use requires credits or a paid plan

More complex UI may overwhelm casual creators

Some features can have noticeable latency depending on GPU load

Not designed for pure artistic exploration like Midjourney

Midjourney: The Visionary Artist Among AI Tools

Midjourney is an independent research lab and creative platform that specializes in text-to-image generation through proprietary diffusion models. It focuses on delivering highly stylized, cinematic visuals, often exceeding photorealistic rendering in imaginative depth.

How It Works (Technical Overview)

Midjourney uses a custom diffusion model, likely influenced by Stable Diffusion and CLIP-guided synthesis, fine-tuned on curated datasets for aesthetic quality. It operates via a Discord interface, which acts as the front end to GPU clusters running image synthesis tasks.

Model Type: Latent Diffusion Model (LDM) with proprietary aesthetic tuning

Interface: Discord bot (slash commands for prompt generation)

Performance: Outputs four 1024x1024 or higher images in under 60 seconds (varies by queue)

Prompt Parsing: Deep prompt weight and negative prompt control for nuanced imagery

Core Features

Text-to-image synthesis with stylization control

Multi-model versioning (v1-v6, including stylization options)

Prompt modifiers: aspect ratio, stylization degree, chaos, seed locking

Community-generated image galleries

Common Use Cases

Visual development for games and animations

Abstract and fine art compositions

Marketing and ideation mockups

Pros

Output quality superior to most public models

Strong aesthetic alignment for artists

Customizable image structure via prompt engineering

Cons

Discord's interface can feel limiting for some users

Style bias may override specific realism prompts

Strengths

Produces stunning, cinematic, stylized visuals from text prompts

High fidelity and coherence across varied themes

Fast rendering and continual model improvements (v6 and beyond)

Strong community and shared prompt styles

Incredible at interpreting abstract or poetic descriptions

Weaknesses

Discord-only interface can be limiting or unintuitive

Stylization may overpower detailed prompt control

No direct editing tools (e.g., inpainting, variations by mask)

Outputs may require post-editing for commercial use

Frequently Asked Questions

What is the main difference between Artbreeder, RunwayML, and Midjourney?

Artbreeder is focused on generative image blending using GANs, ideal for character and portrait design. RunwayML is a professional AI platform for video, image, and audio editing, built for creators and developers alike. Midjourney is an AI art generator that uses text prompts to create stunning, stylized visuals, often used for concept art and creative illustrations.

Can I use Artbreeder, RunwayML, or Midjourney for commercial projects?

Yes, but each platform has its own terms:

Artbreeder allows commercial use with attribution or through Pro plans.

RunwayML supports commercial use depending on your subscription and model usage rights.

Midjourney permits commercial use with a paid plan, but attribution is recommended.

Which tool is best for beginners with no coding background?

All three are beginner-friendly, but in different ways:

Artbreeder is extremely simple and intuitive.

Midjourney is also easy but requires basic knowledge of Discord.

RunwayML has a steeper learning curve but is still accessible with no coding required.

Do these platforms use the same AI technology?

Not exactly:

Artbreeder uses GANs (StyleGAN2, BigGAN).

RunwayML supports multiple models like Stable Diffusion, CLIP, and custom ML workflows.

Midjourney uses a proprietary diffusion model optimized for stylized text-to-image generation.

Is RunwayML suitable for video production?

Yes! RunwayML is one of the best AI tools for video creators. It offers features like automatic background removal, motion tracking, text-to-video (Gen-2), and more—all without traditional editing software.

Can I customize outputs in Artbreeder or Midjourney?

In Artbreeder, you can customize the genes, style, and attributes of the image.

In Midjourney, you can refine results with parameters, stylization levels, and remix options, but it’s less editable than a layer-based tool like Photoshop.

Which is better for creating realistic human faces?

Artbreeder is best for realistic human face blending.

RunwayML can enhance or generate faces via models like GFPGAN or StyleGAN.

Midjourney tends to lean toward artistic or stylized representations of faces.

Choosing the Right AI Creative Companion

Choose Artbreeder for intuitive genetic image editing, especially for character artists and authors.

Choose RunwayML if you're a filmmaker or content creator seeking AI tools for editing, masking, and generative video.

Choose Midjourney if you're a visionary artist or concept designer aiming for stunning text-to-image results with cinematic flair.

Each of these tools represents a leap toward the democratization of high-end creativity through AI. By understanding the technology behind them, creatives can make informed decisions about which platform aligns best with their artistic vision and workflow.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚