Comparing Sora AI, Emu AI Video, and Viggle AI: Which AI Video Tool is Best?

Sora AI vs Emu AI Video vs Viggle AI— A detailed comparison of the top AI-powered video generation tools. Discover their features, strengths, limitations, and best use cases for filmmakers, content creators, and animators.

AI ART TOOLSEDUCATION/KNOWLEDGEEDITOR/TOOLSAI/FUTUREAI ASSISTANT

Sachin K Chaurasiya

2/13/20255 min read

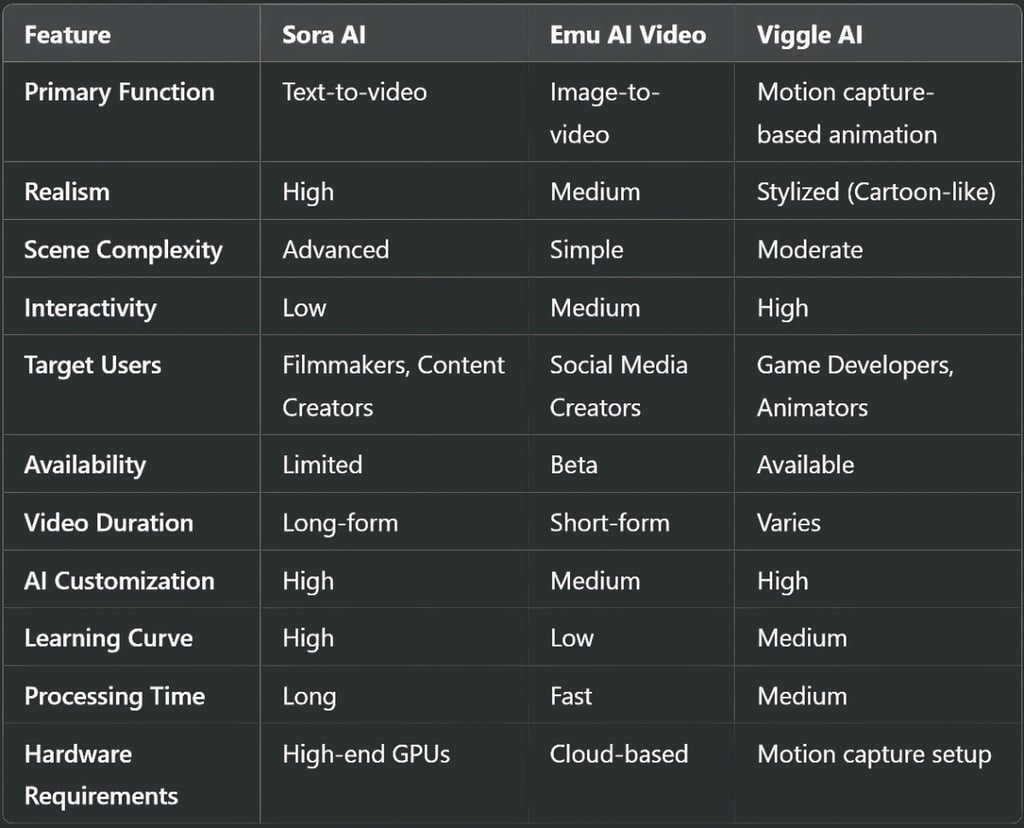

The world of AI-powered video generation is evolving rapidly, with advanced models redefining content creation. Three major players—Sora AI (by OpenAI), Emu AI Video (by Meta), and Viggle AI (by Tencent)—are making significant strides in this field. Each of these AI models has unique capabilities, strengths, and limitations. This article provides an in-depth comparison to help you understand which one stands out for different use cases.

What is Sora AI?

Sora AI is OpenAI’s groundbreaking text-to-video model capable of generating high-quality, realistic, and dynamic videos from textual prompts. It is designed to push the boundaries of AI-generated video by creating detailed scenes with complex motion, physics accuracy, and high visual fidelity.

Key Features

Text-to-Video Generation: Converts textual descriptions into visually appealing videos.

Realistic Motion and Physics: Ensures natural movements, object interactions, and scene consistency.

High Resolution: Capable of generating HD-quality videos with impressive detail.

Scene Understanding: Can comprehend and replicate complex prompts accurately.

Multi-Object Interaction: Generates scenes where multiple entities interact seamlessly.

Longer Video Durations: Capable of producing longer, continuous video sequences compared to competitors.

Advanced AI Models: Uses deep learning models trained on vast datasets to understand complex prompts.

Frame-by-Frame Consistency: Maintains smooth transitions between frames for a natural look.

Strengths

High-quality, natural motion in video generation.

Handles complex scenes with multiple objects and interactions.

Longer video duration than competitors.

Limitations

Compute Intensive: Requires significant computational power, making it inaccessible for small-scale users.

Limited Availability: Not yet widely available to the public.

Ethical Concerns: Potential misuse for deepfakes and misleading content.

High Data Requirement: Requires vast amounts of high-quality training data to function effectively.

Longer Processing Time: Due to the high complexity, video rendering takes more time compared to simpler models.

Use Cases

Filmmaking & Pre-Visualization: Directors and cinematographers can use Sora AI to generate realistic storyboards and pre-visualization sequences.

Advertising & Marketing: Brands can create visually stunning ad campaigns without expensive production.

Content Creation for Social Media: Influencers can generate high-quality video content from simple text descriptions.

Educational & Training Videos: AI-generated content can be used for simulations and e-learning.

Gaming & Virtual Worlds: Developers can quickly generate cinematic cutscenes or game environment previews.

Technology Stack

Deep learning models (likely diffusion-based or transformer models).

Large-scale training on high-quality video datasets.

Advanced physics simulation for realistic motion.

Model Type: Transformer-based AI trained for text-to-video generation.

Input Type: Text prompts describing scenes, motion, and environment.

Output Type: HD video clips with realistic physics and movement.

What is Emu AI Video?

Meta’s Emu AI Video is an image-to-video AI model that generates videos from still images. It is designed to bring static visuals to life with added motion and animation, making it a powerful tool for content creators and marketers.

Key Features

Image-to-Video Transformation: Converts a single image into a moving video.

Creative Animation Capabilities: Adds dynamic elements like movement and effects to still images.

Designed for Social Media: Optimized for platforms like Instagram and Facebook.

Fast Processing: Generates short clips quickly with minimal input.

AI-Powered Effects: Enhances visual content with AI-generated transitions and special effects.

User-Friendly Interface: Accessible for beginners with an intuitive design.

Style Customization: Allows users to apply different animation styles, such as slow-motion effects and artistic filters.

Cloud-Based Processing: Does not require local hardware for video generation.

Strengths

Fast video generation from static images.

Social media-friendly format.

User-friendly and accessible to non-technical users.

Limitations

Limited Customization: Relies heavily on the provided image and cannot generate full-length videos from scratch.

Less Realistic Motion: While animations are smooth, they may lack the natural motion physics of Sora AI.

Short Video Lengths: Mostly suitable for short clips, making it less ideal for cinematic productions.

Resolution Constraints: Output resolution is lower than that of Sora AI, limiting its use in high-quality productions.

Use Cases

Social Media Content: Users can bring static images to life for Instagram, TikTok, and Facebook.

E-commerce & Product Visualization: Businesses can animate product images for better engagement.

Short-Form Storytelling: Creators can transform still visuals into engaging motion-based narratives.

Interactive UI/UX Animations: Designers can animate product demos or interfaces for better presentation.

Technology Stack

GANs (Generative Adversarial Networks) for animation synthesis.

AI-powered visual effects engine.

Optimized for social media platforms (Meta ecosystem).

Model Type: Image-to-video transformer with AI-driven motion synthesis.

Input Type: Still images with optional movement descriptions.

Output Type: Short animated videos with basic transitions and effects.

What is Viggle AI?

Viggle AI, developed by Tencent, is focused on AI-powered motion capture and video animation. It specializes in turning static images into animated avatars or making real-time motion-based video edits.

Key Features

Motion Capture-Based Animation: Can animate characters using motion tracking data.

Real-Time Character Rigging: Helps users animate avatars instantly.

Highly Interactive: Enables video game and virtual avatar applications.

Ideal for the Entertainment Industry: Used for gaming, virtual influencers, and interactive content.

Pose Estimation and Gesture Recognition: Accurately translates human movements into digital animations.

Multi-Character Animation Support: Can animate multiple avatars simultaneously.

AI-Driven Lip Syncing: Synchronizes character mouth movements with voice input for realistic animations.

Augmented Reality (AR) Integration: Supports AR-based applications in gaming and social media.

Strengths

Ideal for gaming and interactive applications.

Supports real-time motion capture.

High accuracy in tracking body movements.

Limitations

Not Designed for General Video Generation: Unlike Sora AI, it does not generate videos from scratch.

Limited Realism: Geared more toward stylized or cartoonish animations rather than hyper-realistic video.

Hardware Dependency: Requires specialized equipment for the best motion capture accuracy.

Limited Scene Complexity: More suitable for character animations rather than full cinematic experiences.

Use Cases

Gaming & Character Animation: Developers can create motion-tracked animated characters in real-time.

Virtual Influencers & Avatars: AI-generated influencers can interact dynamically with audiences.

Live Streaming & Augmented Reality (AR): Content creators can use AI to power animated avatars during live streams.

3D Animation & VFX Industry: Studios can use AI-driven motion capture for movies, commercials, and visual effects.

Gesture-Based UI Interactions: AI-generated animations can enhance user experiences in VR/AR applications.

Technology Stack

AI-driven motion tracking algorithms.

Pose estimation and gesture recognition technology.

Machine learning models for facial and body movement synthesis.

Model Type: AI-based motion tracking and character animation system.

Input Type: Static images, live human motion, and gesture-based inputs.

Output Type: Animated avatars and motion-tracked digital characters.

Which AI Video Model is Best for You?

If you want ultra-realistic, high-quality AI-generated videos → Sora AI is the best choice.

If you need to animate still images quickly for social media → Emu AI Video is the most effective option.

If you're into gaming, virtual influencers, or interactive media → Viggle AI excels in motion-based animation.

For complex scene creation and long-duration videos → Sora AI is ideal.

For quick and visually appealing animations → Emu AI Video is a great fit.

For real-time animation with motion tracking → Viggle AI is the go-to choice.

For high-end production studios → Sora AI provides the most cinematic results.

For social media marketers looking for engagement-focused videos → Emu AI Video is the best pick.

Sora AI, Emu AI Video, and Viggle AI are each revolutionizing AI-driven video generation in unique ways. Sora AI leads in realism and complexity, Emu AI Video enhances social media content, and Viggle AI empowers motion-based animations. As these technologies continue to evolve, we can expect even more sophisticated, accessible, and efficient AI-powered video tools in the near future.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚