Baby AGI, LangChain, and Auto-GPT: The Trio Powering the Future of Autonomous AI Agents

Discover the power of Baby AGI, LangChain, and Auto-GPT—three revolutionary AI frameworks transforming the way we build autonomous agents. Dive deep into their architecture, tools, real-world use cases, and advanced capabilities to unlock the future of intelligent automation. Perfect for developers, tech enthusiasts, and innovators.

AI ASSISTANTCOMPANY/INDUSTRYAI/FUTURE

Sachin K Chaurasiya

4/9/20255 min read

In the fast-moving world of artificial intelligence, we're entering an era where AI systems don't just respond to queries—they think, plan, and act autonomously. Leading this transformation are three groundbreaking open-source tools: Baby AGI, LangChain, and Auto-GPT.

These frameworks have become the foundation for building autonomous agents, often referred to as "AI workers" or "digital employees," capable of performing complex, multi-step tasks with minimal or no human input.

This article takes you beyond the buzzwords to explore the technical foundations, unique functionalities, and real-world potential of these tools.

Baby AGI: A Minimalist Autonomous Agent Framework

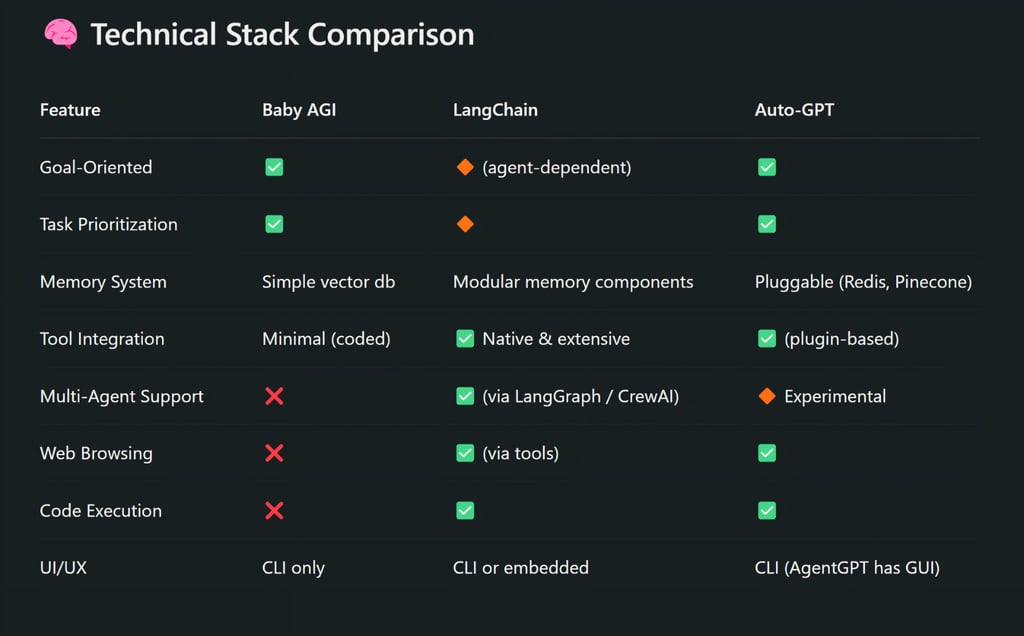

Baby AGI was developed by Yohei Nakajima as an experiment in task automation using LLMs. Despite its simplicity, it has become a reference implementation of an AI agent capable of recursive task generation and execution.

Core Architecture

Language Model: Typically GPT-3.5 or GPT-4 (via OpenAI API).

Vector Store: Stores task results and context for memory recall using Pinecone, Chroma, FAISS, or Weaviate.

Task List Manager: Handles dynamic creation, reordering, and deletion of tasks.

Execution Agent: Executes tasks by prompting the LLM and storing the output.

Prompt Engineering: Uses templated prompts with variables for goals, tasks, context, and priorities.

Technical Flow

Input: A single high-level goal, e.g., "Build a competitor analysis of top SaaS products."

Create Task: Generate a prioritized task queue.

Execute: Pass the current task to the LLM and retrieve the result.

Store: Save the output in a vector database with metadata.

Enrich Queue: Generate new tasks based on output.

Repeat: Continue until no tasks remain or a goal is met.

Use Case Highlights

Project planning and management automation

SEO content ideation workflows

Research compilation agents

Autonomous report generators

LangChain: The Infrastructure Layer for LLM Applications

LangChain is a Python and JavaScript framework for developing applications powered by language models and connected tools. Created by Harrison Chase, it lets developers chain LLMs with external data sources, tools, logic, and memory to build complex, interactive, and dynamic applications.

Building Blocks

Chains: Modular blocks that run sequences of prompts or calls (e.g., LLMChain, SequentialChain, MapReduceChain).

Agents: Autonomous, decision-making units that select which tools or functions to use.

Memory Modules:

Toolkits: Pre-built integrations like

Web scraping (Selenium/SerpAPI)

File systems

Shell commands

Code interpreters (e.g., Python REPL)

Technical Features

Prompt Templates: Modular prompts with Jinja-like formatting.

Callbacks: Built-in monitoring for debugging and logging tool calls.

Streaming Support: Token-by-token output for real-time applications.

Multi-agent Support: Coordinated agent workflows with cooperative goals.

Developer Use Cases

Intelligent chatbots with persistent memory

PDF or SQL data querying agents

Autonomous research tools

AI agents that use real-time APIs (e.g., financial market data)

LangChain is essentially the middleware that allows LLMs to become powerful software agents capable of interfacing with the world.

Auto-GPT: Autonomous Goal-Driven Execution Engine

Auto-GPT is an advanced AI agent built on GPT-4 that autonomously breaks down goals into sub-tasks, uses tools and memory, and self-corrects along the way. Developed by Significant Gravitas (Toran Bruce Richards), it's the first widely used proof-of-concept for a fully autonomous GPT agent.

How It Works

Goal Input: User provides a primary objective.

Planning Module: Auto-GPT creates a list of tasks and subtasks.

Execution Loop: It self-prompts and feeds results into memory.

Reflection: Reviews outcomes and revises tasks accordingly.

Tooling: Can run Python code, browse the web, write files, etc.

Technical Stack

OpenAI GPT-4 API

Memory Integration: Pinecone, Redis, or local vector stores.

Tool Usage:

File I/O

Web Browsing (via headless Chrome or requests)

API calls

Shell scripting

Voice Output (optional): Uses pyttsx3 for TTS output.

Practical Applications

Automated market analysis reports

Full-stack content creators (writing + SEO + publishing)

Coding agents (write + test + debug)

Business automation bots (CRM updates, scheduling, reminders)

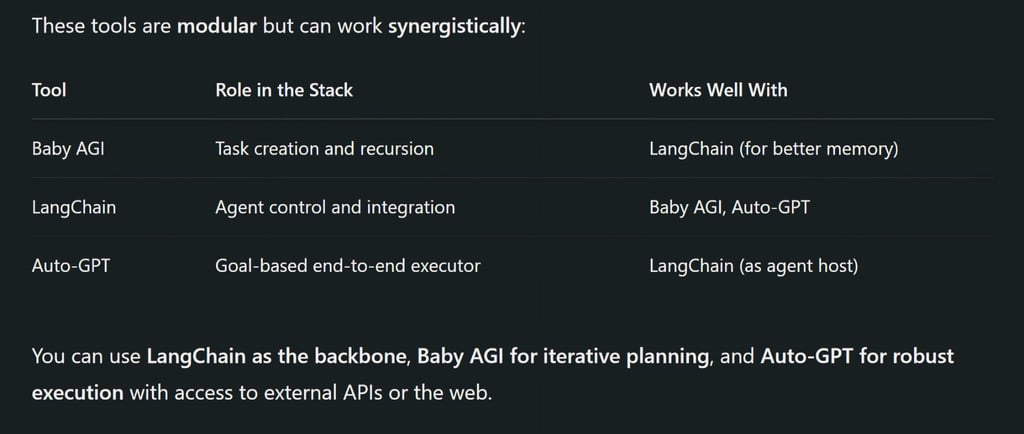

Interoperability: How Baby AGI, LangChain, and Auto-GPT Complement Each Other

Deeper Technical Insights and Add-Ons

Advanced Memory Management in Agents

All three frameworks rely heavily on memory systems to function effectively. Here's how memory can be supercharged:

Memory Types

Episodic Memory: Stores task results in chronological order.

Semantic Memory: Organizes memory based on meaning and concept similarity (often stored in vector DBs).

Working Memory: Used during a single run/session.

Long-Term Memory: Persisted across sessions using tools like:

ChromaDB

Weaviate (offers GraphQL-like semantic search)

FAISS (Facebook AI Similarity Search, fast for local use)

Pinecone (scalable and cloud-native)

Tip: When building agents with Baby AGI or LangChain, use chunking + embeddings (OpenAI or HuggingFace) + cosine similarity search for best memory retrieval accuracy.

LangChain's Specialized Agents and Chains

LangChain has a vast library of chains and agent types. Some that deserve a spotlight:

Specialized Chains

RetrievalQAChain: Integrates document Q&A with semantic search.

MapReduceChain: Useful for summarizing large sets of documents.

ConversationalRetrievalChain: Chatbots with context memory.

Specialized Agents

Tool-Using Agent: Decides when to use external tools (web browsing, APIs).

ChatAgent: For multi-turn interactions with memory and intermediate steps.

MRKL Agent (Modular Reasoning, Knowledge, and Language): Picks the right tool from a toolset based on the task.

Auto-GPT’s Plugins and Tools Ecosystem

As Auto-GPT evolved, developers have started contributing plugins and enhancements. Some highlights include:

Popular Plugins

Auto-GPT-Plugins (community repo):

Web Browser (using Playwright or Selenium)

File Management (create/read/update local files)

Weather API, Crypto Data, GitHub Access

Autonomous Trading Bots

Text-to-Speech & Speech-to-Text (Whisper, pyttsx3)

Task Execution Strategies

Chain of Thought prompting: Improves step-by-step logic.

ReAct (Reason + Act): The LLM reasons through tasks, decides on action, executes, and observes the outcome.

Related & Alternative Projects You Should Know

AgentGPT

Web-based interface for deploying Auto-GPT-like agents in your browser.

More visual and beginner-friendly.

Uses LangChain + GPT-4.

SuperAGI

A complete developer-first platform for building and deploying autonomous agents.

Comes with GUI, analytics, scheduling, agent marketplace, and tool/plugin framework.

OpenAgents

Open-source project for managing multi-agent systems.

Enables collaborative agents with role-based assignments.

CrewAI

A new LangChain-based framework for deploying teams of agents (like a project manager, coder, or researcher).

Agent Orchestration and Multi-Agent Collaboration

Instead of just one agent, imagine several AI personas collaborating on a project. That’s where Agent Orchestration comes in.

LangGraph (by LangChain): Enables multi-agent systems using a graph-based execution plan.

AutoGen (by Microsoft): Framework for building collaborative LLM agents with defined roles, message passing, and feedback loops.

Agent Roles

Manager Agent: Assigns and reprioritizes tasks.

Worker Agents: Perform specialized jobs (coding, research, writing).

Critic Agent: Reviews and refines outputs.

Memory Agent: Tracks everything and stores facts.

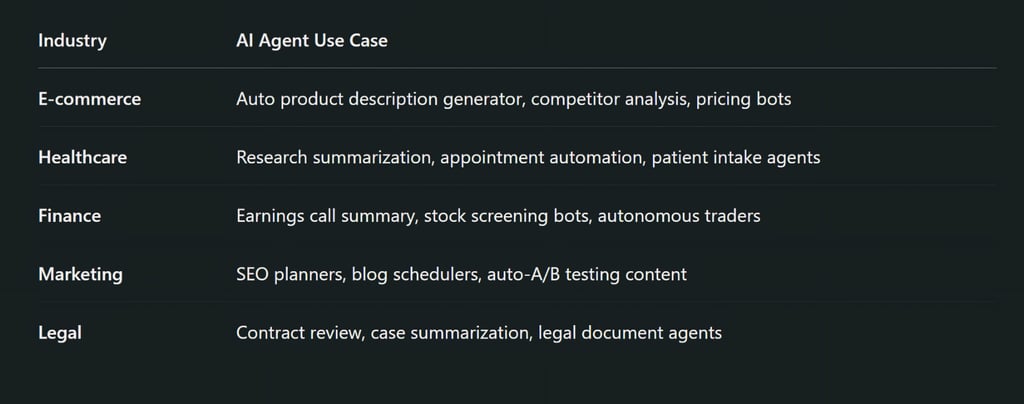

Enterprise & Commercial Use Cases

Suggested Developer Projects (Beginner to Advanced)

Beginner:

Build a GPT-powered note-taker using LangChain + Pinecone.

Set up Baby AGI to generate content ideas for blog posts.

Intermediate:

Create an Auto-GPT agent that creates YouTube titles, scripts, and thumbnails from trends.

Develop a LangChain agent that can analyze financial data and generate summaries.

Advanced:

Build a LangGraph multi-agent system (researcher + writer + editor).

Deploy a serverless Auto-GPT clone using Docker + Redis + Vercel.

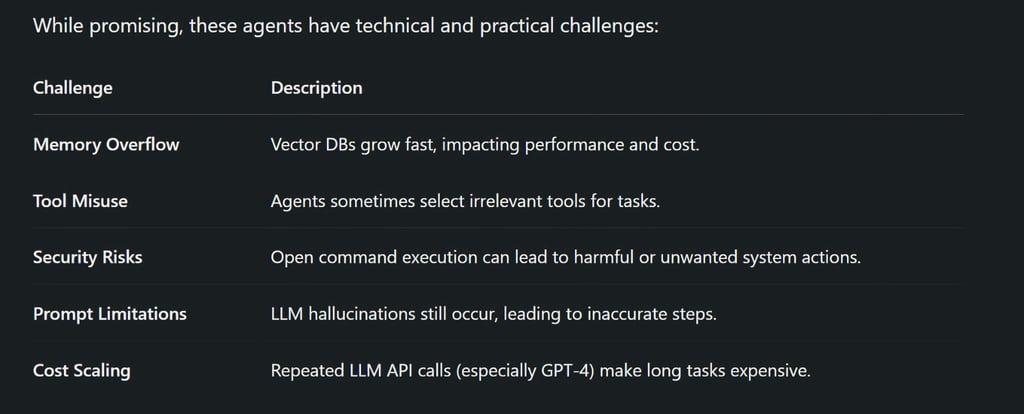

Current Limitations of Autonomous Agents

Advanced developers mitigate these with:

rate limiting

prompt tuning

guardrails

task validation models

The Road Ahead: Towards Real General-Purpose Agents

With the upcoming advancements in GPT-5, multi-modal models, and context window expansion (100k+ tokens), we’re moving closer to building truly autonomous, general-purpose AI agents.

Future upgrades may include:

Self-healing agents: Debug and fix their own logic.

Inter-agent collaboration: Teams of agents working on large goals.

Embedded emotional intelligence: Better understanding of human intent.

Cross-platform deployment: Running on mobile, desktop, and cloud.

Baby AGI, LangChain, and Auto-GPT are not just tools —they’re the DNA of the autonomous AI future. They bridge the gap between passive, reactive chatbots and proactive, thinking agents.

Whether you're a researcher, developer, or entrepreneur, understanding how these tools work—and how they work together—is key to staying ahead in the new age of AI.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚