AI Tools Compared: How DALL·E 3, Stable Diffusion 3, and Runway Gen-3 Stand Out!

Explore the ultimate comparison between DALLE 3, Stable Diffusion 3, and Runway Gen-3. Uncover their unique features, strengths, limitations, and ideal use cases to determine the best AI tool for your creative needs.

AI/FUTUREEDITOR/TOOLSAI ART TOOLSARTIST/CREATIVITY

Sachin K Chaurasiya

1/15/20256 min read

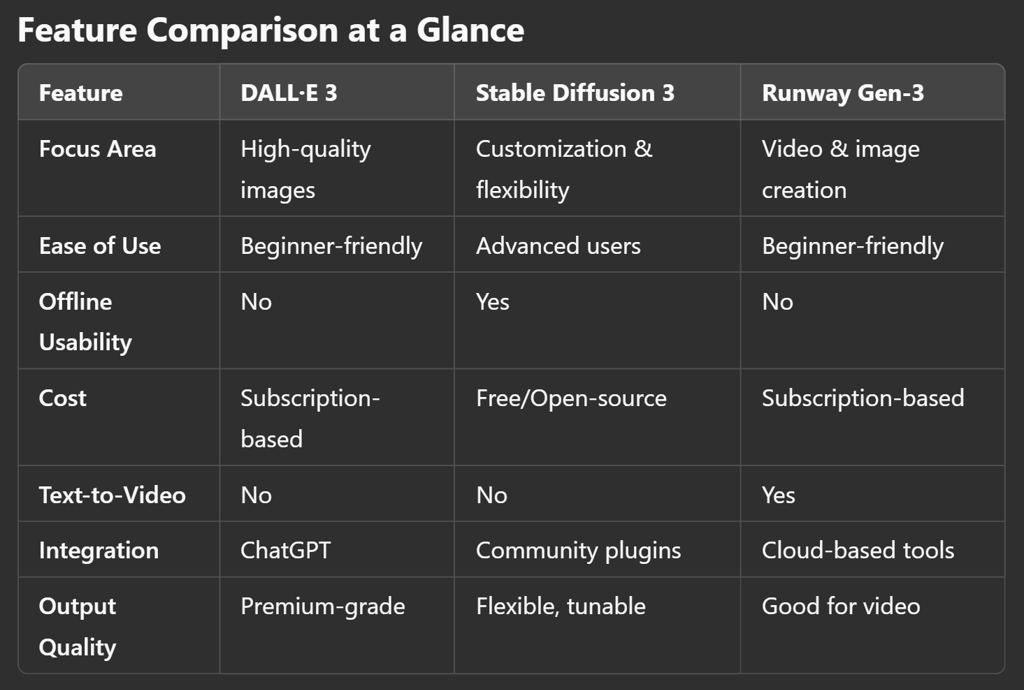

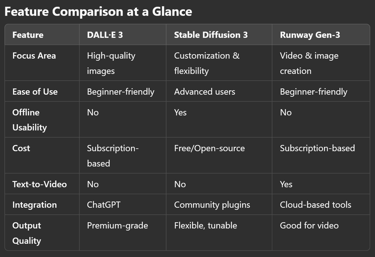

In the rapidly evolving landscape of AI-powered image generation, three frontrunners stand out: DALL-E 3 by OpenAI, Stable Diffusion 3 by Stability AI, and Runway Gen-3 by Runway ML. Each model represents a unique approach to generative AI, catering to different user needs and creative workflows. This article delves into a detailed comparison, examining their core features, strengths, limitations, and ideal use cases to help you decide which tool best aligns with your creative goals.

DALL-E 3: Pioneering Precision & Integration

DALL-E 3 builds upon the foundation of its predecessors, offering remarkable advancements in detail, coherence, and the ability to follow complex prompts. Developed by OpenAI, it has become a favorite among professionals for generating high-quality, artistically nuanced visuals.

Key Features

Enhanced Prompt Understanding: DALL-E 3 excels in interpreting intricate text prompts, including nuanced details, relationships between objects, and stylistic instructions.

Integration with ChatGPT: Seamlessly integrated into ChatGPT, users can iterate on their ideas conversationally, refining prompts without needing extensive expertise in crafting them.

Safety Features: OpenAI has implemented robust guardrails to prevent the misuse of the model, ensuring ethical usage.

Outpainting: Users can expand images beyond their original borders, a feature particularly useful for creative storytelling and design.

High Accessibility: The platform is designed to work seamlessly across various industries, from marketing to fine arts.

Strengths

High-quality output with intricate details and cohesive composition.

User-friendly, thanks to ChatGPT integration.

Ideal for professional artists, marketers, and content creators needing precision.

Limitations

Dependency on OpenAI's platform with limited offline usability.

More restrictive safety filters, which might hinder certain creative projects.

Subscription-based pricing might be a barrier for casual users.

Ideal For

Artists and designers seeking premium-quality visuals.

Users requiring detailed and precise interpretations of complex prompts.

Businesses looking to incorporate AI into creative workflows.

Additional Insights

Upcoming Features: OpenAI has hinted at future updates that may include improved multimodal capabilities, allowing DALL·E 3 to integrate more effectively with video content creation tools.

Use in Education: Increasingly used in educational settings for creating illustrative content for learning materials, offering a novel approach to teaching visual arts.

Architecture

Built on OpenAI's GPT-4 architecture, DALL·E 3 integrates tightly with OpenAI's text generation models, enabling nuanced understanding of context within prompts.

Model Refinements

Improved latent diffusion with multimodal alignment for better image-text coherence.

Enhanced ability to disambiguate between homonyms or complex multi-step instructions in prompts.

Tokenizer Improvements

Uses a more advanced tokenizer for interpreting prompt instructions with greater detail, such as stylistic elements or complex object placement.

Safety & Content Filters

Robust filters to detect and suppress the generation of harmful, inappropriate, or biased content using OpenAI’s moderation tools.

Scaling Infrastructure

Optimized for both inference and training on cutting-edge hardware like NVIDIA A100 GPUs for accelerated image generation and fine-tuning tasks.

Stable Diffusion 3: Open-Source Versatility

Stable Diffusion 3 is the latest iteration from Stability AI, emphasizing openness, flexibility, and community-driven innovation. Its open-source nature has made it a favorite for developers and tech-savvy users who value customization.

Key Features

Open-Source Accessibility: Users can download and run the model locally, providing full control over usage and customization.

Extensive Community Support: A vibrant community contributes to plugins, extensions, and additional tools, enhancing its capabilities.

ControlNet and Depth Maps: Offers advanced control over image generation by leveraging external inputs like sketches, poses, or depth data.

High Scalability: Can be scaled for enterprise use cases with the right infrastructure.

Modular Design: The model is compatible with various third-party integrations, allowing tailored workflows.

Strengths

Unmatched flexibility for tech-savvy users and developers.

Lower cost of entry compared to proprietary models.

Continuous innovation driven by an active open-source community.

Strong support for integrating with custom software and pipelines.

Limitations

Steeper learning curve for beginners.

Requires high-performance hardware for local deployment.

Output quality, while impressive, may require more fine-tuning compared to DALL·E 3.

Lack of an officially integrated interface for prompt refinement.

Ideal For

Developers, researchers, and power users who prioritize customization.

Projects requiring integration into custom workflows or applications.

Organizations seeking open-source solutions for cost efficiency.

Additional Insights

Experimental Plugins: New plugins for AR/VR content creation are being developed within the community, opening doors to immersive applications.

Enterprise Applications: Companies are exploring Stable Diffusion for industrial design prototyping and architectural visualization due to its scalability.

Architecture

Built on the U-Net model for denoising tasks, Stable Diffusion 3 operates with advanced noise prediction and latent diffusion mechanisms.

Uses CLIP (Contrastive Language-Image Pretraining) embeddings from OpenAI for text-image correlation.

Fine-Grained Customization

Users can train their own fine-tuned models using DreamBooth or LoRA (Low-Rank Adaptation) techniques.

Introduced "ControlNet" integration, allowing users to guide image generation using external information such as poses, depth maps, or sketches.

Sampling Techniques

Advanced samplers such as DPM-Solver++ (deterministic sampler) are integrated for faster generation and higher-quality outputs.

Memory Efficiency

Memory optimization via lower VRAM consumption with reduced precision techniques (FP16). This allows even consumer GPUs (e.g., NVIDIA RTX 3060) to run large models effectively.

Custom Training APIs

Features APIs for loading custom datasets and workflows to train specific models, making it a preferred choice for AI researchers and developers.

Runway Gen-3: The Filmmaker’s Dream

Runway Gen-3 is a standout tool, primarily targeting video creators and animators. While it offers image generation capabilities, its real strength lies in its ability to create AI-powered video content, setting it apart from competitors focused solely on still images.

Key Features

Text-to-Video Capabilities: Users can generate short videos directly from text prompts, a groundbreaking feature for content creators.

Video Editing Tools: Includes features like background replacement, inpainting, and style transfer for videos.

Cloud-Based Platform: Runway operates entirely in the cloud, eliminating the need for powerful local hardware.

Collaboration Features: Designed with team collaboration in mind, allowing multiple users to work on the same project.

Generative Effects: Offers tools to add AI-generated effects to existing videos, such as style blending and motion tracking.

Strengths

Unique focus on video generation and editing.

Easy-to-use interface tailored for creators without technical expertise.

Cloud-based accessibility makes it highly scalable.

Increasing adoption in creative industries like filmmaking and social media marketing.

Limitations

Limited image generation quality compared to DALL-E 3 and Stable Diffusion 3.

Subscription costs can add up for heavy users.

Still maturing in terms of detailed text-to-video rendering.

Ideal For

Video creators, animators, and marketers need dynamic content.

Users prioritize video editing and AI-powered storytelling.

Teams looking for collaborative creative tools.

Additional Insights

Expanding Video Length: Runway Gen-3 is experimenting with generating longer videos, aiming to support projects like short films and commercials.

3D Capabilities: Runway has hinted at integrating 3D modeling features to complement its video creation tools.

Video-to-Video Transformation

Runway Gen-3 excels in transforming input videos into entirely different styles, leveraging generative adversarial networks (GANs) optimized for sequential frames.

Includes temporal consistency algorithms to maintain smooth transitions between frames.

Motion Dynamics AI

Uses advanced temporal embeddings and recurrent neural network (RNN) techniques for recognizing and preserving motion flow across scenes.

Generative Training

Trained on diverse datasets of real-world and animated video clips, offering extensive stylistic diversity for creators.

Pipeline Infrastructure

Built on high-performance cloud GPU instances, utilizing TensorFlow and PyTorch backend frameworks for faster rendering of high-definition videos.

Works with pre-trained weights on datasets exceeding petabytes in size, focusing on motion recognition, object tracking, and style transfers.

Edge Detection & Masking

Supports input videos with edge-detection layers for selective transformations (e.g., only altering the background or specific objects). This is powered by edge-mask datasets and preprocessing techniques.

Compatibility

Supports real-time collaboration workflows through Runway's cloud-based environment. Includes integration with Adobe After Effects, Premiere Pro, and Blender.

Emerging Technical Trends Across the Models

Cross-Modality Learning

All three tools are advancing in cross-modality learning (text-to-image-to-video) to enhance multi-step creativity pipelines.

DALL·E 3 and Stable Diffusion focus on fine details, while Runway Gen-3 emphasizes smooth transitions in motion.

Fine-Tuning Potential

Stable diffusion leads to custom fine-tuning, allowing researchers to create personalized generative models.

DALL-E 3’s focus remains closed-loop due to OpenAI’s proprietary system, offering high precision but less customization.

Runway Gen-3 offers industry-specific video workflows, making it appealing to professionals in film and advertising.

Open Source vs Closed Source

Stable Diffusion 3 is fully open-source, encouraging community development.

DALL-E 3 is proprietary, focusing on a controlled ecosystem.

Runway Gen-3 provides a balanced approach, offering API-based access and a subscription model for professionals.

Ethics & Governance

Tools like Stable Diffusion 3 allow unrestricted creation, raising questions about ethical use.

DALL-E 3 employs robust moderation systems, ensuring safety and compliance with OpenAI's policies.

Runway Gen-3’s applications are focused on professional media, reducing misuse but limiting public experimentation.

Choose DALL-E 3 if you prioritize precision and artistic quality in still images and want a user-friendly, integrated experience.

Opt for Stable Diffusion 3 if you need flexibility, customization, and open-source accessibility, especially for tech-driven projects.

Go with Runway Gen-3 if you’re focused on video creation and editing, making it ideal for filmmakers and social media marketers.

Each tool excels in its domain, so understanding your creative goals is key to making the right choice. Regardless of which you choose, these models exemplify the remarkable capabilities of generative AI in transforming how we create and visualize content.

Subscribe To Our Newsletter

All © Copyright reserved by Accessible-Learning Hub

| Terms & Conditions

Knowledge is power. Learn with Us. 📚